1,5×105106.

(14)Here σt increases as the simulation proceeds.This will improve the ergodicity of ASAMC,because the isolated regions included in X (t)tend to be far and far separated.Under the above setting,ASAMC was run 20times independently.On average,each run costs 9.5m CPU time on a computer of 2.8GHz (all simulations reported in this section were done on this machine).Table 3summarizes the computational results produced by ASAMC and other algorithms described below.

SAMC and SA were also applied to this example.SAMC was run 20times under the same setting as ASAMC except for ι=5.Other settings of ι,say,ι=0,1,2and 10,were also tried.The results are similar to or worse than those reported in Table 3.We note that for SA the sample space is not necessarily restricted to a compact region,but the restriction can often improve its performance,as the restriction makes the search focused on a small region and avoids blind search in the broad sample space.For each of the linear and geomet-ric cooling schedules,SA was run 20times with the highest temperature T 1=10and the lowest temperature T 500=0.01.Each run consisted of N =2×106iterations.The proposal distribution is the same as that used by ASAMC except for σk =√T k .For SA,we have also tried other cooling schedules,for example,linear and geometric schedules with a higher value of T 1and a scaled reciprocal schedule (Szu 1986).The results are similar to or worse than those reported in Table 3.

For a thorough comparison,BFGS was also applied to this example.Like back-propagation,BFGS is gradient-based,but it usually converges faster than back-propagation.As commented by Hastie et al.(2001),back-propagation can be very slow,and for that rea-son it is usually not the algorithm of choice.Because of the dif?culty in evaluation of the Hessian matrix,second-order techniques such as Newton’s method are not attractive here.

The conjugate gradient and BFGS algorithms are often recommended for MLP training,as they avoid explicit evaluation of the Hessian matrix while still providing faster convergence. For the derivation of BFGS,refer to Bishop(1995)or a standard text such as Polak(1971) or Fletcher(1987).BFGS has been implemented in many software,such as S-PLUS and R, where a MLP can be trained by BFGS by calling the command nnet(·).The software R is publicly available at https://www.doczj.com/doc/1c17863440.html,/.For this example,BFGS was also run20times independently,and it usually converged within300iterations in each run.

Table3shows that both ASAMC and SAMC have made dramatic improvements over SA and BFGS for this example.ASAMC produced perfect solutions in19out of20runs with an average of1.25×106iterations,whereas SA never produced a perfect solution even with more iterations in each run.Table3also indicates that ASAMC is better than SAMC in optimization.This is consistent with the results reported in Table2.

4.1.2Two-spiral problem

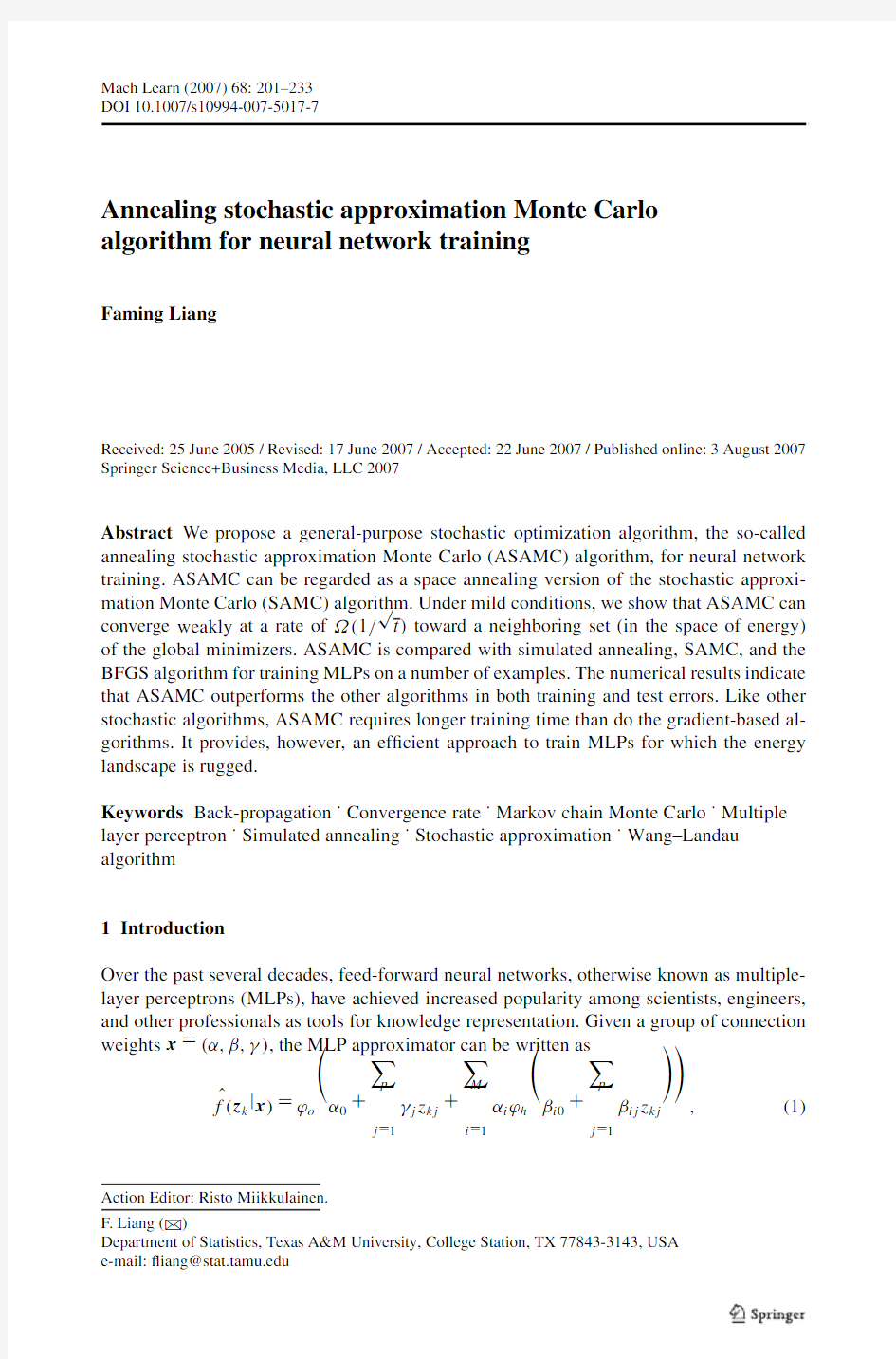

Another famous benchmark example for MLP training is the two spiral problem(Lang and Witbrock1989).The task requires the MLP to learn a mapping that distinguishes between points on two intertwined spirals shown in Fig.4.This problem can be solved using MLPs with multiple hidden layers(with or without shortcut connections).Lang and Witbrock (1989)solved the problem using a2-5-5-5-1MLP with shortcut connections(138train-able connection weights).Fahlman and Lebiere(1990)solved the problem using cascade-correlation networks with12–19hidden units,the smallest network having114connections. Wong and Liang(1997)solved the problem using a2-14-4-1MLP without shortcut connec-tions.It is generally believed that this problem is very dif?cult to solve using the standard one-hidden-layer MLP,because it requires the MLP to learn a highly nonlinear separation of the input space.Baum and Lang(1991)reported that a solution could be found using a2-50-1back-propagation MLP,but only the MLP has been initialized with queries.

For this problem,we trained a2-30-1MLP,which consists of a total of121connections. In ASAMC,the sample space was restricted to the region X=[?50,50]d,and was parti-tioned into250subregions:E1={x∈X:U(x)≤0.2},E2={x∈X:0.249.8}.In simulations,we setψ(x)=exp{?U(x)},

t0=10000,ι=0,andΔ=5,and employed the same proposal distribution as in the8-parity example.ASAMC was run20times independently,and each run consisted of a maximum of107iterations.The simulation stopped early if a solution with U(x)<0.2was found. Figure4(a)shows the classi?cation map learned in one run,which indicates that a MLP with30hidden units is able to separate the two spirals successfully.Figure4(b)shows the average classi?cation map over the20runs by the ensemble averaging approach(Perrone 1993).Each solution in the ensemble was weighted equally.Ensemble averaging smoothes the classi?cation boundary and improves the generalization performance of the MLP.

For comparison,SAMC,SA and BFGS were also applied to this example.SAMC was run20times under the same setting as ASAMC except forι=5.Other choices ofι,0,1,2 and10,have also been tried.The results are similar.BFGS was run20times independently, and it converged within1000iterations in each run.In the runs of SA,the sample space was restricted to X=[?50,50]d as in ASAMC,and the parameters held the same setting as used in the8-parity example except that the total number of iterations was increased to N=107.

Table4summarizes the results produced in the above runs.The comparison shows that ASAMC has made a dramatic improvement over SAMC,SA and BFGS for this example.

Fig.4Classi?cation maps learned by AESAMC with a MLP of30hidden units.The black and white points show the training data for two different spirals.a Classi?cation map learned in one run.b Classi?cation map averaged over20runs.This?gure demonstrates the success of ASAMC in minimization of complex functions

Table4Comparison of ASAMC,SAMC,SA and BFGS for the two-spiral example.The notations are the same as in Table3

Algorithm Mean SD Minimum Maximum Proportion Iteration(×106)Time ASAMC0.6200.1910.1873.23157.0794m SAMC2.7270.2081.0924.089010.0132m SA-117.4850.7069.0222.06010.0123m SA-26.4330.4503.0311.02010.0123m BFGS15.500.89910.0024.000–3s

ASAMC found perfect solutions in15out of20runs with an average of7.07×106iterations, while SAMC,SA and BFGS failed to?nd perfect solutions in all runs.

We note that the MLP structure considered above is not minimal to this problem. ASAMC can?nd perfect solutions to this problem using a MLP with27hidden units(109 connections).Under the same setting as used above,ASAMC found perfect solutions using this small MLP in5out of20runs.The success rate can be increases by increasing the number of hidden units.In our simulations,ASAMC succeeded in19out of20runs when the number of hidden units is35,and succeeded in20out of20runs when the number of

hidden units is40.

The n -parity and two-spiral problems represent,as explained above,different types of learning tasks,although both are very challenging.The former is to learn a mapping which is highly disconnected in the input space,and the latter is to learn a mapping which is highly nonlinear in the input space.The success of ASAMC in both problems suggests its superiority in minimization of complex functions.

4.2Real-world examples

In this section,ASAMC was tested with three real-world examples for which all attributes are numeric and there are no missing values.Refer to Bishop (1995)for discussions on the treatments for missing values in the context of neural networks,whilst this is beyond the scope of this paper.For the real-world problems,we are often concerned with the gener-alization performance of MLP.Hence,the regularization term was included in the energy function (2)for all the three examples.The regularization parameter was set to 0.05.In ap-plying ASAMC and SAMC to these examples,the sample space was restricted to the region X =[?50,50]d ,and the parameters were set as follows:ψ(x )=exp {?U(x )},t 0=1000,and Δ=5.The proposal distribution employed in this section is the same as that used for the 8-parity example except that σt was ?xed to 1.Each algorithm was run 50times inde-pendently,and each run consisted of 2.5×105iterations.In all the three examples,both ?h (z)and ?o (z)were set to the sigmoid function,the input variables were normalized to have mean 0and variance 1,and ιwas set to 0for ASAMC and 5for SAMC.For SAMC,other choices of ι,e.g.,0and 2,were also tried.The results are similar.

As implied by Theorem 8.3,both ASAMC and SAMC will converge weakly toward the set E ?.Although it may be easy for them to reach E ?,it may not be easy for them to locate a global energy minimum exactly.So we suggest the best solutions sampled by SAMC and ASAMC be further subject to a post minimization procedure,say,conjugate gradient or a few hundred steps of MH moves performed at a low temperature.For the three examples,the MH moves were performed at temperature t =10?4.

SA was also applied to the three examples in this section.For each example,SA was run 50times with the highest temperature T 1=10and the lowest temperature T 500=10?4.Each run consisted of N =2.5×105iterations.The sample space was restricted to [?50,50]d as in ASAMC and SAMC.The proposal distribution was the same as that used by ASAMC except for σk =√T k .Both the geometric and linear cooling schedules were tried.Since the results from the linear cooling schedule are inferior to those from the geometric one,only the results from the geometric cooling schedule are reported below.

4.2.1BUPA liver disorders

This dataset was from Richard S.Forsyth at BUPA Medical Research Ltd and can be down-loaded from the machine learning repository at UCI (https://www.doczj.com/doc/1c17863440.html,/~mlearn/MLRepository.html ).Each sample in the dataset constitutes the record of a single male individual.Five attributes (mean corpuscular volume,alkaline phosphatase,alamine aminotransferase,aspartate aminotransferase,and γ-glutamyl transpeptidase)in each record are results from blood tests which are thought to be sensitive to liver disorders that might arise from excessive alcohol consumption.The sixth feature is the number of drinks per day.There are a total of 345samples in the dataset.

In our study,200samples were randomly chosen as the training samples and the rest as the test samples.The data was modeled by a MLP with 2hidden units and shortcut connections.The total number of connections is 23.The MLP was trained by ASAMC,

Table5Comparison of the ASAMC,SAMC,SA and BFGS algorithms for the BUPA liver disorders ex-ample.The training error is measured as the minimum energy value found in each run,and the test error is measured as the misclassi?cation rate for the test samples

Algorithms Training error Test error rate(%)Time7 mean1min2max3mean4min5max6

ASAMC32.838(0.053)32.48333.20833.556(0.251)31.72435.86217s SAMC32.994(0.050)32.48334.27034.634(0.218)31.03440.00017s SA33.239(0.063)32.48334.53334.483(0.278)29.65540.69014s BFGS33.690(0.112)32.69636.20034.828(0.290)29.65540.6901s

1Average of the training errors over50runs(the number in the parentheses represent the standard deviation of the average)

2Minimum training error over50runs

3Maximum training error over50runs

4Average misclassi?cation rate over50runs(the number in the parentheses represent the standard deviation of the average)

5Minimum misclassi?cation rate over50runs

6Maximum misclassi?cation rate over50runs

7CPU time cost by a single run on a core Intel Xeon X5355with speed2.66GHz

Table6Comparison of the ASAMC,SAMC,SA and BFGS algorithms for the Pima Indians diabetes ex-ample.Refer to Table5for notations of the table

Algorithms Training error Test error rate(%)Time mean min max mean min max

ASAMC82.986(0.035)82.78483.49419.60(0.174)17.7122.4063s SAMC83.356(0.054)82.78583.81721.28(0.264)18.2326.0463s SA83.517(0.066)82.78584.78221.13(0.238)17.7123.9653s BFGS83.841(0.103)82.78687.48021.77(0.214)18.7526.041s

SAMC,SA and BFGS.The numerical results are summarized in Table5.In ASAMC and SAMC,the sample space were partitioned into250subregions:E1={x∈X:U(x)≤0.2}, E2={x∈X:0.249.8}.For this example, ASAMC outperforms the other algorithms in both training and test errors.

4.2.2Pima Indians diabetes classi?cation

The data were collected by the National Institute of Diabetes and Digestive and Kidney Diseases and can be downloaded from the machine learning repository at UCI.The dataset contains768records of female Pima Indians,characterized by eight physiological descrip-tors.The task is to predict presence or absence of diabetes.An early study of this data was carried out by Smith et al.(1988)using a MLP.In their study,the MLP was trained using 576samples,and an accuracy rate of76%was achieved on the rest192samples.

We modeled the data by a MLP with3hidden units(without shortcut connections)and a total of31connections.The MLP was trained using ASAMC,SAMC,SA and BFGS on the?rst576samples.The results are summarized in Table6.In ASAMC and SAMC,

Table7Comparison of the ASAMC,SAMC,SA and BFGS algorithms for the email spam identi?cation example.Refer to Table5for notations of the table

Algorithms Training error Test error rate(%)Time mean min max mean min max

ASAMC115.833(0.501)111.198123.4556.464(0.051)5.5347.3577.3m SAMC129.113(0.665)122.241147.1216.629(0.048)5.9907.1618.0m SA120.406(0.609)112.632129.5906.652(0.049)5.8597.487 6.9m BFGS124.303(1.056)114.713141.6846.665(0.052)5.8597.292 3.4s

the sample space were partitioned into500subregions with an equal energy bandwidth, E1={x∈X:U(x)≤0.2},E2={x∈X:0.299.8}.The comparison shows that ASAMC outperforms the other algorithms in both training and test errors.ASAMC achieved an accuracy rate of about81%,which is better than76%obtained by Smith et al.(1988).

4.2.3Email spam identi?cation

The data were collected by Hewlett-Packard laboratories,Palo Alto,California in a study to screen email for“spam”and can be downloaded from the machine learning repository at UCI.The data consists of information from4601email messages.For all4601email messages,the true outcome(email type)email or spam is available,along with the relative frequencies of57of the most commonly occurring words and punctuation marks in the email message.

In this study,3065samples were randomly chosen as the training samples and the rest as the test samples.The data was modeled by a MLP with2hidden units and without shortcut connections,which consists of119connections.The MLP was trained by ASAMC,SAMC, SA and BFGS.The numerical results are summarized in Table7.In ASAMC and SAMC, the sample space were partitioned into1250subregions with an equal energy bandwidth: E1={x∈X:U(x)≤0.2},E2={x∈X:0.2248.2}.For this example,ASAMC again outperforms the other training algorithms in both training and test errors.

The three real-world examples represent applications of ASAMC to classi?cation MLPs with different sizes of training sets.If,for example,we use“samples×attributes”to mea-sure the size of a training set(suppose that the numbers of samples and attributes have about the same ratio in each training set),then the training set sizes of the three examples are1200,4608and174,705,respectively.So the three examples represent problems with a small,moderate and large size of training set,respectively.The success of ASAMC in the three examples suggests that ASAMC can make reliable training and predictions for problems with various sizes of training sets.

4.3Signi?cance of ASAMC results

ASAMC has been compared with SAMC,SA and BFGS on two benchmark examples and three real-world examples.The signi?cance of the ASAMC results has been assessed us-ing the two-sample t-tests.The corresponding p-values are summarized in Table8.The hypotheses corresponding to the?rst entry of Table8are H0:ASAMC has the same train-ing error as SAMC for the8-parity example versus H1:ASAMC has a smaller training error

Table8The p-values of the two-sample t-tests(with unequal variances)for the training and test errors produced by ASAMC versus those produced by SAMC,SA and BFGS.For SA,only the results generated with the geometric cooling schedule and from the restricted sample space are considered

Example Training error Test error

SAMC SA BFGS SAMC SA BFGS

8-parity5.2×10?46.4×10?75.6×10?12–––

2-spiral3.1×10?93.1×10?121.6×10?13–––

BUPA1.7×10?22.2×10?61.1×10?98.1×10?47.5×10?36.4×10?4 Pima7.0×10?83.0×10?104.2×10?114.3×10?76.5×10?73.0×10?12 Spam0.04.4×10?82.2×10?101.0×10?24.6×10?33.5×10?3

than SAMC for the8-parity example.The hypotheses corresponding to other entries are sim-ilar.Table8shows that for all MLP examples studied in this paper,ASAMC can outperform signi?cantly(at a level of0.01)SAMC,SA and BFGS in both training and test errors.Like other stochastic algorithms,ASAMC is slower than the gradient-based algorithms.How-ever,as indicated by the running times reported above,its training speed is still acceptable to us.

5Discussion and future work

The strength of ASAMC comes from two aspects,its self-adjusting mechanism and its pro-gressive shrinkage for the sample space.The self-adjusting mechanism makes ASAMC ca-pable of overcoming the local-trap problems encountered in MLP training,and the sample space shrinkage accelerates exploration for low energy regions.We note that for a given problem,the signi?cance of ASAMC should only apply to the appropriately chosen net-works.For the networks which over?t to the data excessively,ASAMC and other training algorithms may perform equally in both training and test errors.

In this paper,we considered only the MLPs that have a single layer of hidden units and use the sigmoid function as activation functions.Such MLPs are rather general.Cybenko (1989),Funahashi(1989),Hornik et al.(1989),and Barron(1993)have shown the so-called universal approximation theorem:a MLP with a single hidden layer can approximate any given training set uniformly by increasing the number of hidden units,assuming that the acti-vation function of the hidden units is a nonconstant,bounded,and monotonically increasing continuous function.If the MLP is modi?ed,e.g.,to include multiple hidden layers,multiple output units,or to use other functions as activation functions,ASAMC should still work well given its superiority in function optimization.It is worth noting that the tangent activation function often gives rise to faster convergence of training algorithms than does the sigmoid function(Bishop1995,p.127).

ASAMC avoids the requirement for the gradient information of the energy function,so it can be applied to the optimization problems for which the gradient information is not available,e.g.,decision tree learning problems(Sastry et al.2002).This is similar to SA. Besides optimization,SAMC and ASAMC can also be applied to solve the problems of function approximation,as illustrated by the knapsack example.A neural learning exam-ple in this respect is evidence evaluation for Bayesian MLPs.As pointed out by MacKay (1992b),the Bayesian evidence can provide a useful guideline of architecture selection for

电镀铜

电镀铜 工程培训材料- 电镀铜 铜具有良好的导电性和良好的机械性能,能与其它金属形成良好的金属键,获得镀层间良好的结合力。因此,印制析制作过程中采用镀铜工艺。 1 电镀铜的机理 镀液的主要成分是硫酸铜和硫酸。在直流电的作用下,阴阳极发生电解反应,阳极铜失去子变成Cu2+溶于溶液中,阴极Cu2+获得电子还原成Cu原子。具体反应如下: 1.1 阴极反应 Cu2++ 2e-? Cu 副反应Cu+ + e-? Cu Cu2+ + e-? Cu+ 由于铜的还原电位比H+高得多,所以一般不会有H2析出。 1.2 阳极反应 Cu ? 2e-? Cu2+ 副反应Cu ? e-? Cu+ 在足够硫酸环境下,亦有如下反应 2 Cu+ + 1/2O2 + 2H+? 2 Cu2+ + H2O 当硫酸含量较低时,有如下反应 2 Cu+ + 2H2O ? 2 Cu(OH)2 + 2H+ 2 Cu(OH)2 ? Cu2O + H2O Cu2O即成“铜粉”,有Cu2O出现时镀层会变得疏松粗糙。 2 电镀锡机理 电镀锡与电镀铜机理一样利用电解作用获得金属镀层。 阴极反应Sn2+ + 2e- ? Sn 阳极反应Sn - 2e- ? Sn2+ 镀锡溶液主要成分是硫酸亚锡与硫酸。 3 电镀线各药水缸成份及作用 3.1 除油缸 主要成份为酸性除油剂,它可以清除板面污渍,指纹及菲林碎等杂质,获得清洁的基铜表面。 3.2 微蚀缸 主要成份硫酸钠和硫酸溶液,如本公司所用的也有硫酸和双氧水型。 微蚀作用可以除待镀线路与孔内镀层的氧化层,增加其表面的粗糙度,从而提高与镀层有结合力。 3.3 浸酸缸 主要成份H2SO4 浸酸缸可以去除铜表面轻微的氧化层,同时防止污物污染铜缸。

电镀废水处理方法

电镀废水处理方法 一电镀废水的来源 电镀废水主要包括电镀漂洗废水、钝化废水、镀件酸洗废水、刷洗地坪和极板的废水应急由于操作或管理不善引起的“跑、冒、滴、漏”产生的废水,另外还有废水处理过程中自用水以及化验室的排水等。 二电镀废水的性质和分类 1 电镀废水的性质 电镀废水中主要的污染物为各种金属离子,常见的有铬、铜、镍、铅、铝、金、银、镉、铁等;其次是酸类和碱类物质,如硫酸、盐酸、硝酸和氢氧化钠、碳酸钠等;有些镀液还是用了催化剂、添加剂和颜料等其他物质,这些物质大部分是有机物。另外在镀件基材的预处理过程中漂洗下来的油脂、油污。氧化皮、尘土等杂质也都被带入了电镀废水中,是电镀废水的成分复杂。其所造成的污染大致为:化学毒物的污染,有机需氧物质的污染,无机固体悬浮物的污染以及酸、碱、热等的污染和有色、泡沫、油类等污染。但只要的污染时重金属离子、酸、碱和部分有机物的污染。 2 电镀废水的分类 电镀废水一般按废水所含的主要污染物分类。如含氰废水,含铬废水,含镍、铜、锌、铬废水,含酸废水等。 当废水中含有一种以上的主要污染物时(如氰化镀镉,既有氰化物又有镉),一般仍按其中一种污染物分类;当同一镀种有几种工艺方法时,也有按不同镀种工艺再分成小类,如把含铜废水再分成焦磷酸镀铜废水,硫酸铜镀铜废水等。当几种不同镀种废水都含铜一种主要污染物时,如镀铬、钝化废水混合在一起时就统称为含铬废水。若分质监理系统时,则分别为镀铬废水、钝化废水,一般将不同镀种和不同主要污染物的废水混合在一起时的废水统称为电镀混合废水。 三电镀废水单元处理方法 1 化学沉淀法 向废水中投加某种化学物质,使之与废水中欲厂区的污染物发生直接的化学反应,生成难溶的固体物二分离除去的方法,称为化学沉淀法。它适用于处理含金属离子的电镀废水。 用于电镀废水处理的沉淀法主要由氢氧化物沉淀法、钡盐法、碳酸盐法、硫化物沉淀法、置换沉淀法及铁氧体沉淀法。 1)氢氧化物沉淀法:电镀废水中的许多中金属离子可以删除氢氧化物沉淀二得以去除。 2)钡盐沉淀法:主要用于处理含六价铬的废水,采用的沉淀剂有碳酸钡、硫化钡、硝酸钡、氢氧化钡等。 3)硫化物沉淀法:许多重金属能形成硫化物沉淀。大多数金属硫化物的溶解度比其氢氧化物的溶解度要小很多,因此采用硫化物可使中金属得到等完全地去除。 2 混凝沉淀法 混凝法即向废水中投加某种混凝剂,使水中难以沉淀的胶体悬浮颗粒或乳状污染物失去稳定后,在一定的水力反应条件下,好像碰撞凝聚,形成较大的颗粒或絮状物而沉淀分离。 3 化学氧化还原法 在化学法处理电镀废水中,广泛利用氧化还原把废水中某些有毒的污染物变成无毒害物,从而达到净化处理的目的,这种方法称为氧化还原法,这是一种最终处理有毒废水的主

国内电镀废水处理现状

国内电镀废水处理现状 国内电镀行业屑于劳动密集型的“三来一补”企业,耗能高、排污量大、产品附加值相对较低,对环境的污染危害性较大,属重污染行业,已不符合现今发展循环经济的理念,因此,政府对这类工艺落后、污染严重的企业态度明确,以政策法规和技术支撑为保障,实施生态化改造,强化管理、逐步淘汰,对超标排放而又治理无望的企业,注册期到,一律终止,工商部门不再续期办理营业执照。执行“严格管理、提高效益、保护环境、实现资源有效利用”的策略。 珠三角电镀品种有印制电路板、电子元器件、电脑配件、汽车部件、眼镜、卫生洁具、摩托车配件、家电、灯具、门锁、五金件、首饰、钟表等。电镀工艺有普通电镀、化学镀、复合电镀、脉冲电镀、电铸、机械镀、真空蒸镀、离子镀。单一金属有锌、铜、镍、铬、锡、金、银、铀、铑、钯、铟等。二元合金有铜基的铜镍、铜锌、铜锡;锌基的锌铜、锌镍、锌铁、锌钴;镍基的镍磷、镍钴;锡基的锡锌、锡镍、锡钴。三元合金有铜镍铬、锡钴锌。在色彩方面有黑镍、沙丁镍、黑铬、沙丁铬、枪色、古铜、光亮铜、光亮镍、彩色钝化膜、蓝白色。基体材料有金属、铝、工程塑料等。 (一)管理现状 随着经济的发展,环境保护的工作越来越得到重视,国家成立了环境保护部,2009年,各省相继成立环境保护厅,从组织上给予开展该项工作的保证。政府对电镀企业进行强制管理是从2002年正式开始,从这时起,电镀废水的处理有了较快的发展,人们由不认识到较熟练地掌握废水处理技术,设备由简单的几个池子,发展到今天的半自动控制的连续处理,技术、设备、管理上都取得了很大的成绩,一些难处理、多年难以解决的技术问题都已克服,政府倡导的环保意识已普及,企业界接受了“严格管理、提高效益、保护环境、实现资源有效利用”这个理念,并逐渐自觉接受强制管理。 1.政策管理 (1)国家出台了《中华人民共和国固体废物污染环境防治法》,各省市也出台了相应的文件,对产生工业固体废物(电镀废水厂产生大量污泥)的单位强行建立、健全污染环境治理赍任制度:①电镀企业成立时要经过严格审批,要备齐一系列资料,如环保审批批文,污染防治设施的评估报告书和验收资料,生产工艺流程图,投资生产规模,产品种类和数量、原辅材料种类及数量、产生的工业固体废物特别是危险废物种类数量及其收集、忙存、转移、处理情况等;②执法人员采取现场监测、采集样品、拍摄现场等措施进行监管;③重视电镀企业布局,在深圳等经济发达地区已不允许再新建电镀厂,已有的集中到工业园区,按环保局的标准进行整改,达不到要求的强制关闭。 (2)国家实行工业固体废物申报登记制度,要求有关单位如实向环保主管部门申报工业固体废物的种类、产生量、把存、流向、处置等有关资料,如有重大改变,应当及时办理变更申报登记,产生危险废物的单位必须按照国家有关规定制定危险废物管理计划、意外事故的防范措施和应急预案,并向环保主管部门备案。

电镀铜配方工艺、盲孔电镀配方工艺及电镀处理技术开发

电镀系列之二:电镀铜工艺及配方技术开发 线路板在制作过程中,通孔经过孔金属化后往往要经过电镀铜来加厚孔铜,增强电路的耐候性能。通常涉及到的镀铜过程包括普通电镀铜以及盲孔填孔。线路板中使用的电镀铜技术主要还是酸铜,其镀液组成为硫酸、硫酸铜、氯离子、光亮剂(B)、整平剂(L)以及载运剂(C)。B L C B B C B C B C B B B B L B L C B B C B C B C B B B B L B C L B C L B C L B C L 图1、添加剂B、C、L 的作用机理 光亮剂(B):吸附于低电流密度区并提高沉积速率; 整平剂(L):快速地吸附到所有受镀表面并均一地抑制电沉积; 载运剂(C):携带光剂进入低电流密度区,提高低电流密度区的沉积速率;三剂一起作用,达到铜面、孔铜一起电镀,产生光亮镀层。 (1)PCB 普通电镀铜 禾川化学经过研究,开发出一款适用于PCB 孔电镀铜药水,具有以下特点: (1)镀液容易控制,镀层平整度高; (2)镀层致密性好,不易产生针孔; (3)可快速获得镜面光亮及整平特性; (4)添加剂消耗量稳定,消耗量少; (5)通孔电镀效果好,TP 值大于80%,延展性,热应力等参数符合PCB

标准。 图2、PCB电镀铜效果图 (2)FPC普通电镀铜 禾川化学经过研究,开发出一款适用于FPC孔铜电镀的药水,具有以下特点:(1)镀液容易控制,镀层平整度高; (2)镀层延展性好,耐折度好; (3)可快速获得镜面光亮及整平特性; (4)添加剂消耗量稳定,消耗量少; (5)通孔电镀效果好,TP值大于120%,延展性,热应力等参数符合PCB 标准。 图3、FPC电镀铜效果图 (3)盲孔填空电镀 填孔电镀添加剂的组成:光亮剂(B又称加速剂),其作用减小极化,促进铜

电镀废水处理论文

前言 据了解,我国的电镀工厂大约有一万多家,每年排放的电镀废水约40亿m2.含Cr(VI)废水是电镀行业的主要废水来源之一。Cr(VI)具有强毒性,是国际抗癌眼睛中心和美国毒理学组织公布的致癌物,具有明显的致癌作用,Cr (VI)化合物在自然界不能被微生物分解,具渗透前移性较强,对人体有强烈的致敏作用。因此,对含Cr(VI)电镀废水的妥善处理,是电镀行业中一个必须解决的环境问题。 电镀行业是通用性强、使用面广、跨行业、跨部门的重要加工工业和工艺性生产技术。由于电镀行业使用了大量强酸、强碱、重金属溶液,甚至包括镉、氰化物、铬酐等有毒有害化学品,在工艺过程中排放了污染环境和危害人类健康的废水、废气和废渣,已成为一个重污染行业。 除了少部分国有大型企业、三资企业及新建的正规专门电镀厂拥有国际先进水平的工艺设施,大多数中小型企业仍然使用简陋而陈旧的设备,操作方式以手工操作为主。我国电镀行业存在的主要问题有: (1)厂点多、规模小,专业化程度低。(2)装备水平低。表现在一方面缺少机械装备,以手工操作为主;另一方面是技术装备水平不高,自动化程度低,可靠性差,产品质量部稳定。(3)管理水平较低,经济效益较差。(4)电镀污染治理水平低,有效治理率低。(5)经营粗放,原材料利用率低。一大部分甚至绝大部分宝贵的原材料流失并变成了污染物。在清洁生产审计中调查的10条电镀加工线中,平均用水量为0.82t/m2,是国外的10倍。 鉴于铬污染的严重危害性,污水综合处理排放标准规定总铬与Cr(VI)的最高允许排放溶度分别为1.5mg/L和0.5mg/L。在控制排放溶度与总量的同时,发展高效、经济的水处理工艺成为研究的热点。 目前,国内外许多研究者对高盐废水作了许多研究,对于含Cr(VI)电镀废水的处理主要采用化学还原法、电解法、微生物法、萃取法等。化学法是当前应用最广泛的一种方法,主要有化学还原法和化学沉淀法。但由于在实际运作中,投料量和PH值较难控制,如果控制不当,可能造成处理效果不佳,如果投料过量,浪费资源,成本不但增加,而且出手COD也会增加,还易形成[Cr2(OH)2SO3]2+络离子,加碱亦难沉淀;如果投料不足,则Cr(VI)还原不充分,出水Cr(VI)还原不充分,出水中Cr(VI)含量不达标。同时,化学法二

填孔电镀品质可靠性的研究和探讨.

2011秋季国际PcB技术/信息论坛孔化与电镀Hole Processing and Plating 填孔电镀品质可靠性的研究和探讨 Paper Code:S-021 彭涛田维丰刘晨姜雪飞彭卫红刘东 深圳崇达多层线路板有限公司 摘要填孔电镀是满足PCB高密度化、更小化、更便宜的一种重要途径。随着电子行业和PCB 行业的高速发展,填孔电镀的需求量增长迅速,填孔电镀的应用也日广泛,填孔电镀 的生产难度也相应增加。填孔电镀是一种新工艺流程,相对普通电镀铜而言,其反应 机理复杂,过程控制更难监控,品质可靠性低。本文主要讲述填孔电镀反应机理,并 通过DOE试验来探讨如何提升填孔电镀工艺能力和品质可靠性。 关键词填孔电镀i填充率 中图分类号:TN41文献标识码:A 文章编号:1009—0096(2011增刊-0153-06 The research and investigation of filling plating quality and reliability PENG Tao TIAN Wei-feng L1UChert JIANGXue-fei PENG Wei-hong L1UDong

Abstract In the process of PCB jointing brigade,the via air ladder Call bring on the jointing solder air and it will debase the jointing intension and quailty dependability,because of above.more and more customers require the via ofHDl circuitry board should be done by filling?in plating.In the relafiv9ly ofnormal plating,the reaction mechanism offilling—in plating is more complex,the process control is moFe difficult to be watched,and the quailty dependability is worse.The letterpress tell of the reaction mechanism of filling—in plating,discuss the way how to step up the technical ability offilling—in plating by DOE experiment and quailty dependability. Key words filling plating;filling ratio 1为什么需要填孔 1.1PCB高密度化、高精细化的发展趋势 随着电子产业的高密度、高精细化,HDI板焊盘直径和间距的逐渐减小,使盲孔孑L径也逐渐减小,盲孔厚径 比随之加大,普通的电镀药水和传统的电镀工艺不能达到盲孔镀铜的效果,为保证盲孔d6质的可靠性,更多生 产厂家选择专用盲孔电镀药水或增加填孔流程。 .】53. 孔化与电镀HoIeProcessing and Plaling 2011秋季国际PcB技术,信息论坛 圉1盲孔高厚径比使品质可靠性降低

含镍电镀废水处理

本文介绍含镍电镀废水处理方案,通过化学沉淀法,可以把镍处理至表三标准,镍浓度处理至0.1mg/L以下。 l 工具/原料 l 含镍电镀废水 l 化学镀镍废水 l 锌镍合金处理剂 l 重金属捕集剂 l 聚合氯化铝PAC、聚丙乙烯酰胺PAM、氢氧化钠 l 方法/步骤 1.含镍电镀废水介绍含镍电镀废水是指电镀镍时所产生的清洗水,一般分为电镀镍废水和化学镀镍废水,电镀镍废水是指通过电镀把金属镍镀在金属基底上,例如以铜为基底;化学镀镍废水是指通过化学氧化还原的方法把镍镀在基底上,基底多为塑料等非导体。电镀镍废水的成分比较简单,一般多为镍离子以及硫酸根等,化学镀镍废水成分复杂,除了镍离子外,废水中还含有大量的络合剂,比如柠檬酸、酒石酸、次磷酸钠等。 2.含镍电镀废水处理标准在电镀废水处理标准中,国家表一标准要求镍排放标准不高于1mg/L,国家表二标准要求不高于0.5mg/L,国家

表三标准要求不高于0.1mg/L,《电镀废水治理工程规范》中要求含镍废水需要单独收集,并且镍需要处理至标准才能排放至综合池。 3.针对电镀含镍废水以及化学镀镍废水,可采用化学沉淀法进行处理,化学沉淀法不需要复杂的设备。其中,电镀含镍废水可以直接采用加碱至11,PAC混凝,PAM絮凝沉淀出水,镍即可达标,如果含镍废水中混有前处理废水,那么需要在加碱之后的出水加入少量重金属捕集剂重金属捕集剂进行螯合反应,重金属捕集剂重金属捕集剂可以把镍离子从低浓度处理至达标。 对于化学镀镍废水,由于废水中存在大量的络合剂,络合剂与镍离子形成络合小分子溶解于废水中,因此直接加碱不能沉淀,通过加入锌镍合金处理剂进行反应,可以破坏络合健的结构,通过螯合反应与镍离子结合,再通过混凝絮凝沉淀,把镍离子去除。 4.根据含镍电镀废水处理方案,设计相应的含镍废水处理工艺。对于电镀镍废水,采用两步法处理比较划算,即先用氢氧化钠进行沉淀一次以后,再加入重金属捕集剂重金属捕集剂螯合沉淀。 5.对于化学镀镍废水,可以通过一步法直接加锌镍合金处理剂进行螯合沉淀,把镍离子去除。 l 注意事项 l 电镀镍废水与化学镀镍废水,镍的种类不一样,处理方法也不同l 注意在破坏络合剂时,有时也可以采用氧化破络的办法

含铜电镀废水处理技术

含铜电镀废水处理技术 在电镀行业,除了镀件要求的电镀铜之外,镀铜层常作为镀镍、镀锡、镀铬、镀银、镀金的底层,以提高基体金属与表面镀层的结合力和镀层的防腐蚀性能,因此,含铜电镀废水在电镀行业中十分普遍,而该种废水通常含有多种重金属和络合剂,这给铜以及其它金属的去除和回收带来了麻烦,安全而有效地处理含铜混合电镀废水仍是电镀废水处理的一项艰巨任务。 目前,对于含铜电镀废水的处理主要采用化学法、离子交换法、膜分离法、吸附法、生物法等,这些方法也是处理其它重金属废水常用的方法,本文主要介绍在含铜电镀废水中的具体应用。 1 化学法处理含铜电镀废水 1.1中和沉淀法 目前国内常采用化学中和法、混凝沉淀法处理含铜综合电镀废水,在对废水中的酸、碱进行中和的同时,铜离子形成氢氧化铜沉淀,然后再经固液分离装置去除沉淀物。 单一含铜废水在pH值为6.92时,就能使铜离子沉淀去除而达标,一般电镀废水中的铜与铁共存时,控制pH值在8——9,也能使其达到排放标准。然而对既含铜又含其它重金属及络合物的混合电镀废水,铜的去除效果不好,往往达不到排放标准,主要是因为此方法的处理实质是调节废水pH值,而各种金属最佳沉淀的pH值不同,使得去除效果不好;再者如果废水中含有氰、铵等络合离子,与铜离子形成络合物,铜离子不易离解,使得铜离子不能达标排放。特别是对含有氰的含铜混合废水经处理后,铜离子的浓度和CN-的浓度几乎成正比,只要废水中的CN-存在,出水中的铜离子浓度就不会达标。这就使得利用中和沉淀法处理含铜混合废水的出水效果不好,特别是对于铜的去除效果不佳。 1.2硫化物沉淀法

硫化物沉淀法处理重金属废水具有很大的优势,可以解决一些弱络合态重金属不达标的问题,硫化铜的溶解度比氢氧化铜的溶解度低得多,而且反应的pH值范围较宽,硫化物还能沉淀部分铜离子络合物,所以不需要分流处理。然而,由于硫化物沉淀细小,不易沉降,限制了它的应用,另外氰根离子的存在影响硫化物的沉淀,会溶解部分硫化物沉淀。 沉淀法处理电镀废水应用最为广泛,除了以上两种常见的方法之外,很多研究者把研究的重点放到了重金属沉淀剂的开发上。用淀粉黄原酸酯(ISX)处理含铜电镀废水,铜脱除率大于99%。YijiuLi等利用二乙基氨基二硫代甲酸钠(DDTC)作为重金属捕获剂,当DDTC 与铜的质量比为0.8——1.2时,铜的去除率可以达到99.6%,该捕获剂巳经工业应用。重金属沉淀剂的研究将更有利于化学沉淀法的发展。 1.3电化学法 电化学方法处理重金属废水具有高效、可自动控制、污泥量少等优点,且处理含铜电镀废水能直接回收金属铜,处理时对废水含铜浓度的范围适应较广,尤其对浓度较高(铜的质量浓度大于1g/L时)的废水有一定的经济效益,但低浓度时电流效率较低。该方法主要用于硫酸铜镀铜废水等酸性介质的含铜废水,是较为成熟的处理含铜电镀废水的方法之一,国内有商品设备供应。目前,常用的除平板电极电解槽外,还有含非导体颗粒的平板电极电解槽和流化床电解槽等多种形式的电解槽。 近年来的试验研究该方法也能用于氰化铜、焦磷酸镀铜等电镀废水处理。 L.Szpyrkowicz等利用不锈钢电极在pH值为13时直接氧化氰化铜废水,在1.5h内使得含铜废水中铜的质量浓度由470mg/L降到0.25mg/L,回收金属铜335.3mg,同时指出不锈钢电极的表面状态对氧化铜氰化合物具有重要的影响,特别是水力条件对电化学反应器破铜氰络合物的影响,并提出了新的反应器的动力和电流效率的精确数值。研究者又不断地改进

填孔镀铜配方组成,工艺流程及技术研究进展

填孔镀铜配方组成,工艺流程及技术研究进展 摘要:文章简述了填孔电镀的概况、工艺以及填孔工艺中的镀铜添加剂 关键词:电镀;填孔;镀铜添加剂; 1前言 从20世纪60年代开始,PCB行业就采用孔金属化和电镀技术来解决层间连接或导通的问题。实际上过去、现在和将来,在PCB进行电镀铜的最核心问题是解决孔内镀铜连通、镀铜厚度均匀性和填孔镀铜方面等问题。随着PCB行业产品的高密度化和结构多样化的发展,PCB电镀技术有了飞快的进步,但是电镀铜的核心问题仍然没有得到有效的解决。到目前为止,PCB行业电镀经历了常规直流电镀—直接电镀—脉冲电镀—新型直流电镀等过程,核心仍然是围绕着PCB层间“孔”导通的问题。 在孔金属化过程中,采用填铜工艺可以提高电路板层间的导通性能,改善产品的导热性,减少孔内孔洞,降低传输信号的损失,这将有效的提高电子产品的可靠性和稳定性。在填孔镀铜工艺中添加剂组分之间的相互作用直接影响着填铜工艺的顺利进行,因此,了解镀铜添加剂的作用原理对填孔电镀工艺非常重要。 禾川化学是一家专业从事精细化学品以及高分子分析、研发的公司,具有丰富的分析研发经验,经过多年的技术积累,可以运用尖端的科学仪器、完善的标准图谱库、强大原材料库,彻底解决众多化工企业生产研发过程中遇到的难题,利用其八大服务优势,最终实现企业产品性能改进及新产品研发。 样品分析检测流程:样品确认—物理表征前处理—大型仪器分析—工程师

解谱—分析结果验证—后续技术服务。有任何配方技术难题,可即刻联系禾川化学技术团队,我们将为企业提供一站式配方技术解决方案! 2填孔电镀的工艺流程 2.1填孔镀铜的机理 填孔电镀添加剂由三类组份组成:光亮剂(又称加速剂),其作用减小极化,促进铜的沉积、细化晶粒;载运剂(又称抑制剂),增加阴极极化,降低表面张力,协助光亮剂作用;整平剂,抑制高电流密度区域铜的沉积。微盲孔孔底和孔内沉积速率的差异主要来源于添加剂在孔内不同位置吸附分布,其分布形成过程如下: 1、由于整平剂带正电,最易吸附在孔口电位最负的位置,并且其扩散速率较慢因此在孔底位置整平剂浓度较低; 2、加速剂最易在低电流密度区域富集,并且其扩散速率快,因此,孔底加速剂浓度较高; 3、在孔口电位最负,同时对流最强烈,整平剂将逐渐替代抑制剂加强对孔口的抑制,最终使得微孔底部的铜沉积速率大于表面沉积速率,从而达到填孔的效果。 2.2填孔镀铜的过程 填孔镀铜过程主要分为四个时期:起始期、爆发期、回复期、平衡期。 (1)起始期:在此阶段,分散于电镀液中的各添加剂,如抑制剂因分子

含金属电镀废水处理方法

电镀废水的治理在国内外普遍受到重视,目前市面上也有多种治理技术,通过将有毒治理为无毒、有害转化为无害、回收贵重金属、水循环使用等措施消除和减少污染物的排放量。随着电镀工业的快速发展和环保要求的日益提高,电镀污水治理已开始进入清洁生产工艺、总量控制和循环经济整合阶段,资源回收利用和闭路循环是发展的主流方向。 我国处理电镀废水常用的方法有化学法、生物法、物化法和电化学法等。 化学法是依靠氧化还原反应或中和沉淀反应将有毒有害的物质分解为无毒无害的物质,或者直接将重金属经沉淀或气浮从废水中除去,缺点是药剂投加量很大,产生大量固废且增加废水中的盐分。 生物法是一种处理电镀废水的新技术:一些微生物代谢产物能使废水中的重金属离子改变价态,同时微生物菌群本身还有较强的生物絮凝、静电吸附作用,能够吸附金属离子,使重金属经固液分离后进入菌泥饼,从而使得废水达标排放或回用; 化学法主要优势是项目运营成本不高,但是生物成长环境不容易控制,往往会因水质的变化而大量中毒死亡,功能菌和废水中金属离子的反应效率并不高,且培养菌种的培养基消耗量较大,处理成本较高。电解法、原电池法、电渗析法、电凝聚气浮法等电化学方法。 物化法在工业上应用广泛,它利用离子交换或膜分离或吸附剂等方法去除电镀废水所含

的杂质。 当前应用较广泛的吸附剂就是活性炭了,主要用于含铬、含氰废水。它的特点是处理调节温和,操作安全,深度净化的处理水可以回用,但该方法存在活性炭再生复杂和再生液不能直接回镀槽利用的问题,吸附容量小,不适于有害物含量高的废水。为解决这一难题,研究了多种重金属纳米吸附材料,具有吸附容量大,可再生使用,使用寿命长等优点,而且工程化应用时,吸附端可实现模块化设计,能根据生产能力灵活调节,占地节省、结构紧凑,同时具备自动化程度高、工艺流程短、操作简单和能耗低等优点,从而弥补了吸附法的不足,拓宽了吸附法在电镀废水中的应用,已拥有多个电镀行业废水处理案例。 以上就是相关内容的介绍,希望对大家了解这一问题会有更多的帮助,同时如有这方面的兴趣或需求,可以咨询了解一下官网或直接拨打热线询问。

电镀废水处理课程设计说明书

绪论 1设计说明书 1.1工程概况 (1)电镀工艺及废水的产生 电镀是将金属通过电解方法镀到制品表面的过程.电镀是工业上通用性强、使用面广的行业之一。常用的镀种有镀镍、镀铜、镀铬、镀锌、镀镉、镀铅、镀银、镀金和镀锡.无论哪种镀种或镀件,电镀工艺大体上相同。在电镀过程中,除油、酸洗和电镀等操作以后,都要用水清洗电镀废水来源于电镀生产过程中的镀件清洗、镀件过滤、镀件液以及由于操作或管理不善引起的“跑、冒、滴、漏”;另外还有地面冲洗、通风冷凝等。 (2)电镀废水的性质及危害 电镀废水的水质、水量与电镀生产的工艺条件、生产负荷、操作管理与用水方式等因素有关.电镀废水的水质复杂,成分不易控制,其中有毒有害的物质有镉、铬、镍、铅、氰化物、氟化物、铜、锌、锰、碱、酸、悬浮物、石油类物质、含氮化合物、表面活性剂及磷酸盐等。这些废水进入水体,会危及水生动植物生长,影响水产养殖,造成大幅度减产甚至鱼虾绝迹;或是破坏农田土壤,毁坏庄稼,并通过食物链危害人类健康;或是进入饮用水源,在人体内积累,轻者引起慢性中毒,重者导致死亡。 1.2企业简介 东莞市市区污水处理厂位于南城区石鼓村王洲,是东莞市目前采用二级处理、日处理生活污水设计能力20万吨的一家最大的国有污水处理厂。占地面积15.42万平方米,截污主干管总长度为14.77Km,管径为D1400mm至D2600mm;收水范围:莞城区、南城区、万江区南面组团、东城区(牛山片区、桑园、周屋、温塘片区除外)的全部生活污水;服务面积62.95平方公里,服务范围现状人口49.96万人。外管辖新基污水泵站、珊洲河污水泵站两座和管网的维护。两期工程建成,一期采用厌氧—氧化沟工艺(A/O工艺),处理能力为10万吨/日;二期采用缺氧、厌氧—氧化沟工艺(A2/O工艺),处理能力为10万吨/日。经该厂处理后的尾水,由市环保监测站常规抽样检验,水质符合国家《城镇污水处理污染物排放标准》(GB18918-2002)一级B标准。 1.3自然状况 (1)东莞市属亚热带季风气候,长夏无冬,日照充足,雨量充沛,温差振幅小,季风明显。 (2)各地的年日照时数在1288.5~1780.0小时之间,年平均气温在22.7℃~23.6℃之间。(3)各区的总降水量在1547.4~2074.0毫米之间。一年中2~3月份日照最少,7月份日照最多。雨量集中在4~9月份,其中4~6月为前汛期,以锋面低槽降水为多。7~9月为后汛期,台风降水活跃。 (4)东莞市主要河流有、、寒溪水。市境96%属东江流域。 (5)东莞市地质构造上,位于北东东向罗浮山断裂带南部边缘的北东向大断裂南西部、东莞断凹盆地中。地势东南高、西北低。地貌以丘陵台地、冲积平原为主,丘陵台地占44.5%,冲积平原占43.3%,山地占6.2%。东南部多山,尤以东部为最,山体庞大,分割强烈,集中成片,起伏较大,海拔多在200~600米,坡度30℃左右,中南部低山丘陵成片,为丘陵台地区;东北部接近东江河滨,陆地和河谷平原分布其中,海拔30~

电镀废水处理发展趋势

【摘要】本文介绍了电镀废水的各种常用处理技术,及电镀废水处理技术今后的发展趋势。 【关键词】电镀废水;重金属;处理技术 1.概述 电镀是利用电化学的方法对金属和非金属表面进行装饰、防护及获取某些新的性能的一种工艺过程,是许多工业部门不可或缺的工艺环节。据“七五”期间国内47 个城市的初步统计,有电镀厂点5870 个以上。1987 年上海市共有530 多个,全国电镀厂点数估计近万个。这些电镀厂点在生产过程中,不仅产生各种漂洗废水,而且还排出各种废液。废水废液中含有酸、碱、CN 、Cr 6+ 、Cd 2+ 、Cu 2+ 、Ni 2+ 、Pb 2+ 、Hg 2+ 等金属离子和有毒物质,还有苯类、硝基、胺基类等有毒害的有机物,严重危害生物的生存。电镀工艺因其污染严重,于1994 年被我国政府列为25 种限制发展的行业之一。但是从国内外发展现状看,电镀技术是现代化工业不可缺少的组成部分,并没有被其它技术全面取代的趋势,而是在不断开拓新技术、新工艺的同时,着重致力于电镀污染的防治。电镀废水的治理在国内外普遍受到重视,研制出许多治理技术。我国对电镀废水的治理起步较早,60 年代初就已开始,至今将近有50 年的历史。60 年代至70 年代中期电镀废水的处理引起了重视,但仍处于单纯的控制排放阶段。70 年代中期至80 年代初,大多数电镀废水都已有了比较有效的处理,离子交换、薄膜蒸发浓缩等工艺在全国范围内推广使用,反渗透、电渗析等工艺已进入工业化使用阶段,废水中贵重物质的回收和水的回收利用技术也有了很大进展。80 年代至90 年代开始研究从根本上控制污染的技术,综合防治研究取得了可喜的成果。上世纪90 年代至今,电镀废水治理由工艺改革、回收利用和闭路循环进一步向综合防治方向发展,多元化组合处理同自动控制相结合的资源回用技术成为电镀废水治理的发展主流。

填孔电镀技术

填孔电镀Dimple 对高阶高密度互联产品的影响2009-10-22 15:21:56资料来源:PCBcity作者: 陈文德、陈臣 摘要:文章主要介绍填孔电镀的发展与填孔电镀Dimple 对高阶高密度互联产品的影响及其相关检测设备的应用对填孔电镀品质的作用。 关键词:盲孔;填孔电镀;Dimple;Smear;IC;BGA;BallPitch;BallArray; 一、引言: HDI 板市场的迅速发展,主要来自于手机、IC 封装以及笔记本电脑的应用。目前,国内HDI 的主要用途是手机、笔记本电脑和其他数码产品,三者比例为90% 、5% 、5% 。 根据高阶HDI 板件的用途---3G板或IC载板,它的未来增长非常迅速:未来几年全球3G手机增长将超过30% ,我国发放3G 牌照;它代表PCB的技术发展方向,3G手机的高速传输、多功能、高集成,必须具有提供强大的传输运行载体。为解决高速传输、多功能、高集成发展带来的高密度布线与高频传输,开创了盲孔填铜工艺,以增强传输信号的高保真与增大BGA区BallArray 的排列密度(BallPitch 减小)。 二、HDI 高密度的发展趋势: 电子产品的小型化给元器件制造和印制板加工业带来了一系列的挑战:产品越小,元器件集成程度就越大,对于元器件生产商来说,解决办法就是大幅度增加单位面积上的引脚数,IC 元器件封装由QFP 、TCP ( tapecarrier package) 向BGA 、CSP 转变,同时朝向更高阶的FC 发展(其线宽/ 间距达到60nm/ 60 nm )。与之相适应,HDI 线宽/ 间距也由4mil /4mil (100um/ 100um ) 大小变为3 mil /3 mil ( 75um/ 75 um) ,乃至于目前的60um/ 60 um ;其内部结构与加工技术也在不断变化以满足其更薄、更密、更小的要求。 HDI 发展为实现表面BGA 区BallArray 的高密度排列,进而采用了积层的方式来将表面走线引入内层,HDI 孔的加工经历着从简单的盲埋孔板到目前的高阶填孔板,在小孔加工与处理技术上,先后产生了VOP(ViaOn Pad )、StaggerVia 、StepVia 、SkipVia 、StackVia 、ELIC(EveryLayer Interconnection )等设计,以用来解决HDI 中BGA 区域BallArray 的高密度排列,部分设计叠构参见图2。 以下为部分高端HDI 微孔的叠构图:

酸性含铜电镀废水处理

酸性含铜电镀废水处理 葛丽颖, 刘定富, 曾祥钦, 李绍明 (贵州大学化学工程学院,贵州贵阳550003) 中图分类号:X 79 文献标识码:B 文章编号:100024742(2007)022******* 0 前言 据不完全统计,我国电镀厂每年排出的废水约40亿m 3[1] 。电镀废水不仅量大,而且对环境污染也 严重。由于含有自然界不能降解的重金属离子,因此,对电镀废水的处理历来受到各国政府的重视。对电镀废水处理主要有化学沉淀法、电解法、离子交换法和膜处理法[2] 等。化学法是目前国内外应用最广的。化学法处理电镀废水具有技术成熟、投资小、处理成本低、适应性强、管理方便、自动化程度高等诸多优点,加上砂滤能使出水水质澄清,达标排放,不失为既经济又有效的一种方法。本文研究了氢氧化钠中和沉淀酸性含铜电镀废水的影响因素,得到了重金属铜去除率较高的反应条件。 1 实验 1.1 仪器与试剂 721型可见分光光度计(上海欣茂仪器有限公 司),JJ 21型精密电动搅拌器(苏州威尔实验用品有 限公司),PHS 23C 型精密酸度计(上海大普仪器有限公司);氢氧化钠(分析纯),聚丙烯酸钠,聚丙烯酰胺,聚乙烯醇。1.2 实验水样 实验水样取自贵阳市083系统宇光分公司电镀车间酸性含铜废水。1.3 实验方法 移取400m L 实验水样于500m L 烧杯中,加入一定量的氢氧化钠溶液,搅拌一定时间后,加入一定量的絮凝剂,沉降,静置过滤。经分光光度法[3] 检测滤液中铜离子的质量浓度,计算废水中铜离子的去除率。 2 结果与讨论 2.1 pH 值对铜去除效果的影响 pH 值对铜去除效果有最直接的影响。实验水 样中Cu 2+ 的质量浓度为367mg ΠL ,搅拌时间4min , 在室温下进行。配制质量分数为20%的氢氧化钠。 改变氢氧化钠加入量,以调节废水pH 值,试验结果如图1所示。 图1 pH 值与滤液中铜的质量浓度的关系 图1是在实验过程中,用氢氧化钠溶液来调节废水pH 值,测得的pH 值与沉淀过滤后滤液中铜的 质量浓度的关系曲线。 从图1可以看出,刚开始反应时,随着溶液pH 值的增加,其滤液中铜的质量浓度变化较小;当溶液pH =5.6~8.5时,在此段pH 值范围内,滤液中铜的质量浓度变化很大。当溶液pH 值达到8.5左右时,滤液中铜的质量浓度小于1mg ΠL 。随着溶液pH 值的继续增加,当pH >11.3左右时,滤液中铜的质量浓度又逐渐增大,变化较小,同时沉淀颜色由浅蓝色变为深蓝色。当pH >13.5后,滤液中铜随pH 值的 增加继续增大,且变化较大。2.2 搅拌时间对铜去除率的影响 固定水样中的Cu 2+ 的质量浓度为367mg ΠL ,加 入氢氧化钠溶液,控制废水pH 值8.5,改变搅拌时间,结果如图2所示。 图2 搅拌时间对铜去除率的影响 从图2中可看出,氢氧化钠中和沉淀铜离子的 速率较快,在搅拌时间2min 以内,铜去除率高达99.78%。随着搅拌时间的增加,铜去除率随之有所增加,但变化较小。因此,搅拌时间对铜去除率影响不大,考虑到搅拌时间包括加碱及反应时间,故取搅 ?63? Mar.2007 E lectroplating &Pollution Control V ol.27N o.2

电镀污水处理工艺流程及行业介绍

电镀污水处理工艺流程及行业介绍电镀废水处理特点:电镀是利用化学和电化学方法在金属或在其它材料表面镀上各种金属。电镀技术广泛应用于机器制造、轻工、电子等行业。 1、污水特点 电镀是利用化学和电化学方法在金属或在其它材料表面镀上各种金属。电镀技术广泛应用于机器制造、轻工、电子等行业。电镀废水的成分非常复杂,除含氰(CN-)废水和酸碱废水外,重金属废水是电镀业潜在危害性极大的废水类别。根据重金属废水中所含重金属元素进行分类,一般可以分为含铬(Cr)废水、含镍(Ni)废水、含镉(Cd)废水、含铜(Cu)废水、含锌(Zn)废水、含金(Au)废水、含银(Ag)废水等。电镀废水的治理在国内外普遍受到重视,研制出多种治理技术,通过将有毒治理为无毒、有害转化为无害、回收贵重金属、水循环使用等措施消除和减少重金属的排放量。随着电镀工业的快速发展和环保要求的日益提高,目前,电镀废水治理已开始进入清洁生产工艺、总量控制和循环经济整合阶段,资源回收利用和闭路循环是发展的主流方向。 2工艺选择 根据电镀废水水质水量的特点和排放要求,结合目前国内外生活污水处理的应用现状和我司在电镀污水处理工程中的成功经验,综合处理效果、投资费用、运行管理、运行费用、平面布置等各方面的因素,在此选择以化学法为主的组合处理工艺。 3工艺流程及说明 电镀废水经过收集之后,自流入本处理系统,经过处理之后直接排放。

工艺流程如下所示: 含铬废水→含铬废水集水池→耐酸碱泵→还原反应池→混合废水调解池 含氰含碱废水→含氰含碱废水集水池→耐酸碱泵→一级氧化反应池→二级氧化反应池→混合废水调解池 混合废水调解池→耐酸碱泵→混合反应池→沉淀池→中和池→达标排放 4工艺流程说明: 含Cr6+废水从Cr6+集水池用耐酸碱泵提升至还原反应池,根据铬的浓度及废水处理量,通过pH和ORP自控仪控制H2SO4和Na2S2O5的投加量;还原反应完毕后自流进入混合废水调节池同其它废水一起进行进一步处理。含氰含碱污水自车间流入氰系调节池,后用耐酸碱泵提升至一级氧化反应池,根据含氰浓度及废水处理量,通过pH、ORP自控NaOH和NaClO的投加量,搅拌反应一级破氰后进入二级氧化反应池,再通过pH、ORP自控制仪分别控制H2SO4和NaClO的投加量,搅拌反应破氰完毕后自流进入混合废水调节池同其它废水一起进行进一步处理。 混合污水调节池废水用泵提升至快混反应池,加NaOH、PAC药剂,并用pH自控仪控制pH10~11,将金属离子转化成氢氧化物絮状沉淀,再进入慢混池加polymer絮凝剂,增大繁花,沉淀与水自流入综合污泥沉淀池。经沉淀后的上清液自流入中和池,再通过加酸回调,并用pH自控仪控制pH7~8,出水达标排放。综合污泥沉淀池的污泥经污泥浓缩池浓缩后用泵泵入板框压滤机压滤,污泥外运进一步处置,滤液回流至综合污水调节池继续处理。

电镀铜原理概

电镀铜(二) 3.4操作条件的影响 3.4.1温度 温度对镀液性能影响很大,温度提高,会导致允许的电流密度提高,加快电极反应速度,但温度过高,会加快添加剂的分解,使添加剂的消耗增加,同时镀层光亮度降低,镀层结晶粗糙。温度太低,虽然添加剂的消耗降低,但允许电流密度降低,高电流区容易烧焦。一般以20-300C为佳。 3.4.2电流密度 当镀液组成,添加剂,温度,搅拌等因素一定时,镀液所允许的电流密度范围也就一定了,为了提高生产效率,在保证镀层质量的前提下,应尽量使用高的电流密度。电流密度不同,沉积速度也不同。表8-5给出了不同电流密度下的沉积速度(以阴极电流效率100%计)。

表8-5 电流密度与沉积速度 镀液的最佳电流密度一定,但由于印制电板的图形多种多样,难以估计出准确的施镀面积,也就难以得出一个最佳的电流值。问题的症结在于正确测算图形电镀的施镀面积。下面介绍三种测算施镀面积的方法。 1)膜面积积分仪: 此仪器利用待镀印制板图形的生产底版,对光通过与阻挡不同,亦即底版黑色部分不透光,而透明部分光通过,将测得光通量自动转换成面积,再加上孔的面积,即可算出整个板面图形待镀面积。需指出的

是,由于底片上焊盘是实心的,多测了钻孔时钻掉部分的面积,而孔壁面积只能计算,孔壁面积S=πDH,D一孔径,H一板厚,每种孔径的孔壁面积只要算出一个;再乘以孔数即可。此法准确,但价格较贵,在国外已推广使用,国内很多大厂家也在使用。 2)称重计量法: 剪取一小块覆铜箔单面板,测量出一面的总面积,将板子在800C烘干1小时,干燥冷至室温,用天平称取总重量(Wo).在此板上作阴纹保护图形,蚀掉电镀图形部分的铜箔,清洗后按上法烘干称重,得除去电镀图形铜箔后的重量(W1),最后全部蚀刻掉剩余铜箔,清洗后按上法干燥称重,得无铜箔基体的净重(W2),按下式可算出待电镀图形的面积S: S=S0X(W0-W1)/(W0-W2) 式中:S0 覆铜箔板的面积。 (W0-W1) 电镀图形部分铜箔重量。 (W0-W2) 铜箔总重量。

含铜废水处理工艺分析

一、含铜废水的性质 含铜废水中存在的铜离子按照价态有二价态铜离子和一价态铜离子,按存在形式有游离铜(如Cu2+)和络合铜(如铜氰配离子、铜氨络合等)。 在染料、电镀等行业含铜废水中,铜离子往往以络合形态存在,如铜氰配离子。以酸性镀铜废水为例,废水中主要存在Cu2+、H+、Fe2+、Fe3+等阳离子和SO42-、Cl-等阴离子。氰化镀铜漂洗废水中含游离氰根离子300~450mg/L,含一价态铜离子400~550mg/L。 电镀生产过程中产生的含铜废水中的污染物,如硫酸铜、硫酸、焦磷酸铜等,其质量浓度在100mg/L及50mg/L以下;电路板生产过程中产生的含铜废水有含铜蚀刻液与洗涤废水等,其质量浓度在130~150mg/L及20mg/L以下;染料生产含铜废水的质量浓度位1291mg/L;铜矿山含铜废水,其质量浓度在几十至几百毫克每升。 二、含铜废水的处理 1.化学沉淀法 化学沉淀法包括氢氧化物沉淀法和硫化沉淀法。 氢氧化物沉淀法中石灰法使用较广,其机理主要是往废水中添加碱(一般是氢氧化钙),提供废水的pH值,使铜等重金属离子生成难容氢氧化物沉淀,从而降低废水中铜离子含量而达到排放标准。其处理工艺为:重金属酸性废水→沉砂池石灰乳混合反应池→沉淀池→净化水→外排。该法处理后的净化水有较高的pH值及钙硬度,和严重的结垢趋势,需采用合适的水质稳定措施进行阻垢后才能实现回用,而

且不适于处理印刷电路板生产过程中的含铜络合物废水。 硫化沉淀法是利用添加Na2S等能与重金属形成比较稳定的硫化沉淀物的原理,其工艺为:含铜废水→硫化物沉淀处理→中和处理→外排。该法用于常规的中和沉淀法无法处理的铜络合物的废水,但加入了大量的化学药剂,因此存在二次污染。 案例:氢氧化钠中和沉淀酸性含铜电镀废水,当溶液pH值达到8.5左右时,水中Cu2+质量浓度为367mg/L,滤液中铜的质量浓度小于1mg/L。 采用硫化沉淀法,对含Cu2+质量浓度为120mg/L的废水进行处理,处理后废水中Cu2+<0.5mg/L。 2.离子交换法 该法能有效的去除矿山废水中的铜离子,而且具有处理容量大、出水水质好等特点,且占地少、不需对废水进行分类处理,费用相对较低,但存在投资大、对树脂要求高、不便于控制管理等缺点。 工艺为:混合废水→阳离子交换柱→阴离子交换柱→回用及排放。(如果原水pH值过低,应先进行pH调整,废水的Cu2+浓度过高时,应进行除铜预处理,否则树脂再生会过于频繁)。 用于去除废水中Cu2+的离子交换树脂有:Amberlite IRC-718整合树脂、Dowex50x8强酸性阳离子树脂、螯合树脂Dowex XFS-4195、螯合树脂Dowex XFS-41196及国内的“争光”、“强酸1号”和PK208树脂等。 案例:移动车间进出水水质