4 – Medical Informatics

C. William Hanson

Key Points

1. A computer's hardware serves many of the same functions as those of the human nervous

system, with a processor acting as the brain and buses acting as conducting pathways, as well as

memory and communication devices.

2.The computer's operating system serves as the interface or translator between its hardware and

the software programs that run on it, such as the browser, word processor, and e-mail programs.

3.The hospital information system is the network of interfaced subsystems, both hardware and

software, that coexist to serve the multiple computing requirements of a hospital or health system,

including services such as admissions, discharge, transfer, billing, laboratory, radiology, and

others.

4.An electronic health record is a computerized record of patient care.

https://www.doczj.com/doc/3e9878620.html,puterized provider order entry systems are designed to minimize errors, increase patient care

efficiency, and provide decision support at the point of entry.

6.Decision support systems can provide providers with best-practice protocols and up-to-date

information on diseases or act to automatically intervene in patient care when appropriate.

7.The Health Insurance Portability and Accountability Act is a comprehensive piece of legislation

designed in part to enhance the privacy and security of computerized patient information.

8.Providers are increasingly able to care for patients at a distance via the Internet, and telemedicine

will continue to grow as the technology improves, reimbursement becomes available, and

legislation evolves.

Computer Hardware

Central Processing Unit

The central processing unit (CPU) is the “brain” of a modern computer. It sits on the motherboard, which is the computer's skeleton and nervous system, and communicates with the rest of the computer and the world through a variety of “peripherals.” Information travels through the computer on “buses,” which are the computer's information highways or “nerves,” in the form of “bits.” Bits are aggregated into meaningful information in exactly the same way that dots and dashes are used in Morse code. Bits are the building blocks for both the instructions, or programs, and the data, or files, with which the computer works.

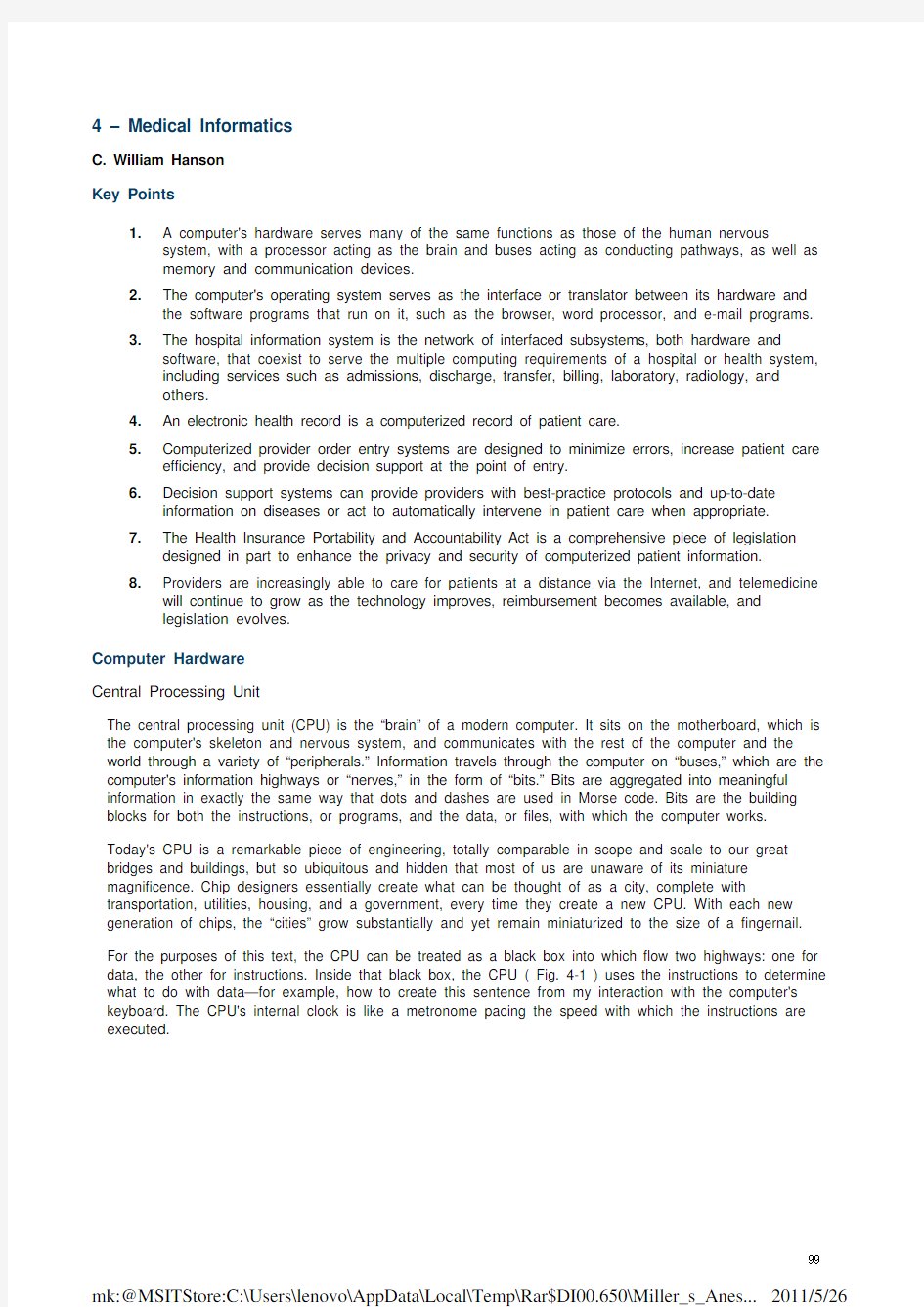

Today's CPU is a remarkable piece of engineering, totally comparable in scope and scale to our great bridges and buildings, but so ubiquitous and hidden that most of us are unaware of its miniature magnificence. Chip designers essentially create what can be thought of as a city, complete with transportation, utilities, housing, and a government, every time they create a new CPU. With each new generation of chips, the “cities” grow substantially and yet remain miniaturized to the size of a fingernail. For the purposes of this text, the CPU can be treated as a black box into which flow two highways: one for data, the other for instructions. Inside that black box, the CPU ( Fig. 4-1 ) uses the instructions to determine what to do with data—for example, how to create this sentence from my interaction with the computer's keyboard. The CPU's internal clock is like a metronome pacing the speed with which the instructions are executed.

Figure 4-1 Programs and data are stored side by side in memory in the form of single data bits—the program tells the central processing unit (CPU) what to do with the data. RAM, random-access memory.

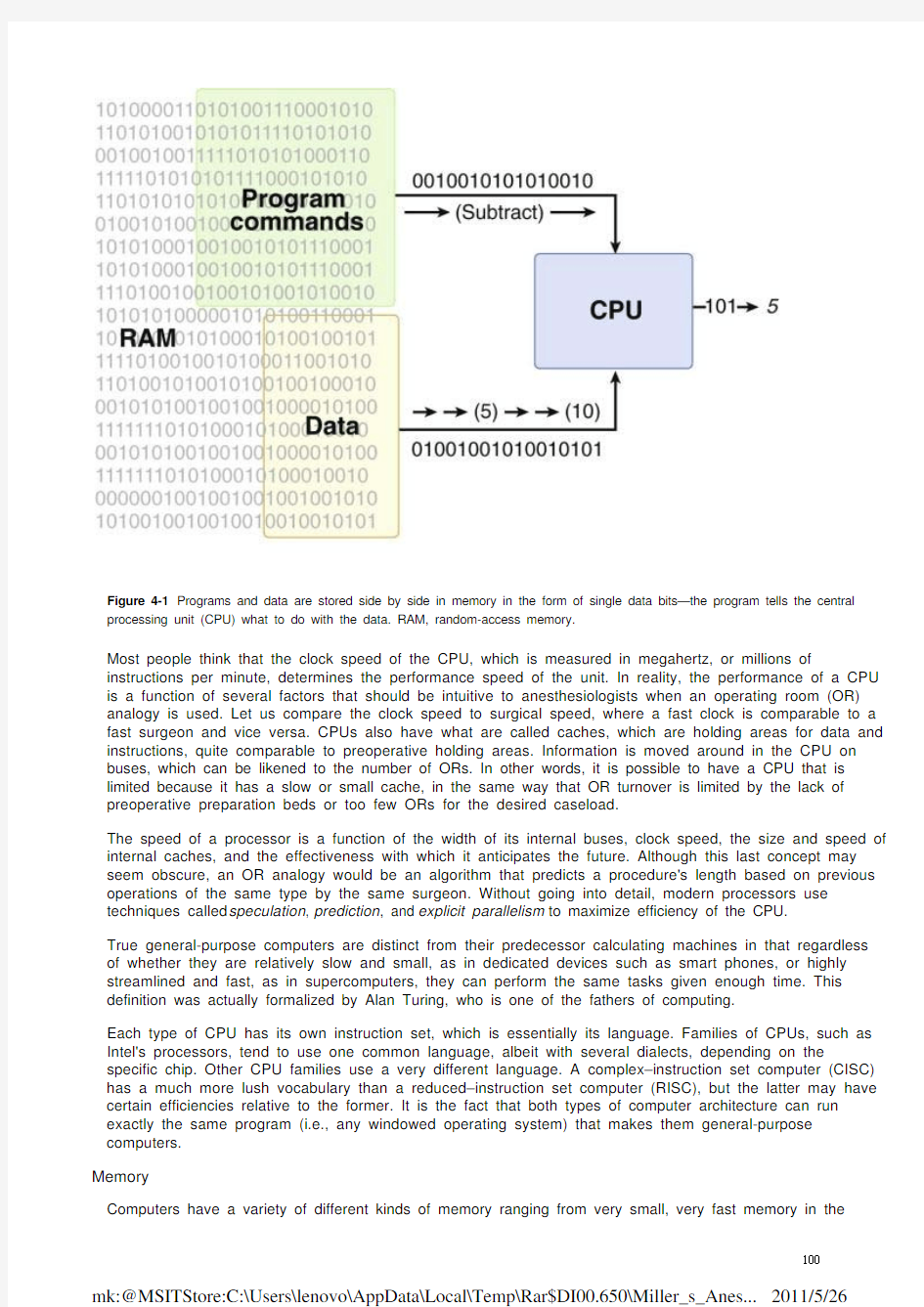

Most people think that the clock speed of the CPU, which is measured in megahertz, or millions of instructions per minute, determines the performance speed of the unit. In reality, the performance of a CPU is a function of several factors that should be intuitive to anesthesiologists when an operating room (OR) analogy is used. Let us compare the clock speed to surgical speed, where a fast clock is comparable to a fast surgeon and vice versa. CPUs also have what are called caches, which are holding areas for data and instructions, quite comparable to preoperative holding areas. Information is moved around in the CPU on buses, which can be likened to the number of ORs. In other words, it is possible to have a CPU that is limited because it has a slow or small cache, in the same way that OR turnover is limited by the lack of preoperative preparation beds or too few ORs for the desired caseload.

The speed of a processor is a function of the width of its internal buses, clock speed, the size and speed of internal caches, and the effectiveness with which it anticipates the future. Although this last concept may seem obscure, an OR analogy would be an algorithm that predicts a procedure's length based on previous operations of the same type by the same surgeon. Without going into detail, modern processors use techniques called speculation, prediction, and explicit parallelism to maximize efficiency of the CPU.

True general-purpose computers are distinct from their predecessor calculating machines in that regardless of whether they are relatively slow and small, as in dedicated devices such as smart phones, or highly streamlined and fast, as in supercomputers, they can perform the same tasks given enough time. This definition was actually formalized by Alan Turing, who is one of the fathers of computing.

Each type of CPU has its own instruction set, which is essentially its language. Families of CPUs, such as Intel's processors, tend to use one common language, albeit with several dialects, depending on the specific chip. Other CPU families use a very different language. A complex–instruction set computer (CISC) has a much more lush vocabulary than a reduced–instruction set computer (RISC), but the latter may have certain efficiencies relative to the former. It is the fact that both types of computer architecture can run exactly the same program (i.e., any windowed operating system) that makes them general-purpose computers.

Memory

Computers have a variety of different kinds of memory ranging from very small, very fast memory in the

CPU to much slower, typically much larger memory storage sites that may be fixed (hard disk) or removable (compact disk, flash drive).

Ideally, we would like to have an infinite amount of extremely fast memory immediately available to the CPU, just as we would like to have all of the OR patients for a given day waiting in the holding area ready to roll into the OR as soon as the previous case is completed. Unfortunately, this would be infinitely expensive. The issue of ready data availability is particularly important now, as opposed to a decade ago, because improvements in central processing speed have outpaced gains in memory speed such that the CPU can sit idle for extended periods while it waits for a desired chunk of data from memory.

Computer designers have come up with an approach that ensures a high likelihood that the desired data will be close by. This necessitates the storage of redundant copies of the same data in multiple locations at the same time. For example, the sentence I am currently editing in a document might be stored in very fast memory next to the CPU, whereas a version of the complete document, including an older copy of the same sentence, could be stored in slower, larger-capacity memory ( Fig. 4-2 ). At the conclusion of an editing session, the two versions are reconciled and the newer sentence is inserted into the document.

Figure 4-2 Processing of text editing using several “memory” caches in which duplicate copies of the same text may be kept nearby for ready access.

The very fast memory adjacent to the CPU is referred as cache memory, and it comes in different sizes and speeds. Cache memory is analogous to the preoperative and postoperative holding areas in an OR in that both represent rapidly accessible buffer space. Modern computer architectures have primary and