Image Appearance Modeling and High-Dynamic-Range Image Rendering

Mark D. Fairchild, Garrett M. Johnson, Jiangtao Kuang, Hiroshi Yamaguchi

Munsell Color Science Laboratory, Rochester Institute of Technology

Figure 1: An example of an HDR digital image of the RIT color cube rendered using the iCAM image appearance model to simulate human perception of the scene and (inset) rendered linearly.

Abstract

Recently, the field of color appearance modeling has been extended further into the spatial and temporal domains through efforts known as image appearance modeling. Image appearance models have applications in rendering and visualization of image data, cross-media image and video reproduction, and image quality specification. This paper provides an overview of one such model, iCAM, illustrates several examples of its application,and reviews a psychophysical experiment aimed at evaluating iCAM, and other algorithms for tone mapping of high-dynamic-range (HDR) images.

CR Categories: I.3.3 [Picture/Image Generation]: Display Algorithms; I.3.3 [Picture/Image Generation]: Viewing Algorithms

K e y w o r d s : HDR imaging, image reproduction, tone reproduction, image appearance, image quality, psychophysics

e-mail: {mdf, garrett, jxk4031, hxypci}@https://www.doczj.com/doc/7915380565.html,

This MCSL technical report is the full version of an abstract presented at the ACM-SIGGRAPH First Symposium on Applied

Perception in Graphics and Visualization, 2004.

1Introduction

The concept of image appearance modeling as a logical extension of traditional approaches to color appearance modeling and image quality assessment has been recently introduced and developed [Fairchild and Johnson 2002; 2003; 2004]. The approach has been to take the point-wise color processing inherent in color appearance models such as CIECAM02 [see Fairchild, 2004] that account for influences of absolute luminance level, spectral power distributions, metamerism, and chromatic adaptation in combination with models of the spatial and temporal visual system that are often used in image quality metrics and compression schemes. The result is a unified approach to image color appearance, image difference specification, and image quality metrics with a wide range of applications.

Applications of image appearance modeling include cross-media color reproduction, image/video difference measurement,image/video quality prediction, optimization of imaging/compression algorithms, exploration of human visual performance, image gamut mapping, and, perhaps of most interest in computer graphics and visualization, the rendering of high-dynamic-range (HDR) images for display on devices with limited dynamic range. HDR image rendering has been a topic of significant and growing interest in the computer graphics and visualization community since many global-illumination rendering systems are designed to simulate the full dynamic range of real scenes and these rendered images must often be incorporated with other media or displayed and printed on devices with limited dynamic range. HDR rendering has also more recently become of significant interest in the field of digital photography as even consumer digital cameras are becoming commercially available with extended-dynamic-range sensors.

This paper briefly reviews the concept of image appearance modeling, introduces one example of an image appearance model, describes its capabilities, and presents the result of a recent psychophysical experiment in which a number of HDR rendering algorithms were quantitatively compared and evaluated for preferred image reproduction.

2Image Appearance Modeling

Image appearance models represent an extension beyond, and combination of, traditional color appearance models, image and video difference or quality metrics, and tone reproduction operators. In many ways, an image appearance model attempts to combine all of these various functions into a single mathematical construct that can succeed in all these applications since its function follows that of the human visual system. The ultimate goal of an image appearance model is to predict the appearance of each pixel in a still or moving image (or location in a real scene) within its natural viewing environment. This is a challenging goal and research in many disciplines will be required over the coming decades to fully, or even nearly, succeed. The various applications of models are a convenient byproduct of the goal of predicting image appearance.

For example, rendering HDR images or scenes into limited-dynamic-range displays is a natural result of image appearance modeling since the human visual system itself has limited dynamic range due to stray light in the eye and neuronal limitations. Thus, if an image appearance model can be used to predict the appearance of each element of an HDR scene, those appearances can then be reproduced as accurately as possible on a display device (printed images can be considered an image display in the general sense).

The key to developing an image appearance model is to follow the performance of the human visual system. There are two general approaches to modeling the human visual system. One is to try to closely model human physiology. This approach is very successful at predicting specific psychophysical results such as thresholds or color matches however it often falls short in predicting higher-level perceptions since the ultimate integrating processes in the human brain are not well understood and extremely difficult to model at the physiological level. The second approach is to model the input (stimulus) and output (perceptual scale) of the visual system as a black box with some form of empirical model. The CIELAB color space could be considered an example of such an empirical model. The most successful approaches are often a combination of the two where empirical models are designed with some basis in physiological and psychophysical performance.

Color appearance models use the spectral power distributions of stimuli as input and then through basic colorimetry account for metamerism. On top of colorimetry, empirical models of chromatic adaptation, luminance adaptation, and simple surround effects are used to predict scales of perceived color attributes (brightness, lightness, colorfulness, chroma, saturation, and hue). These models generally function on a single color element at a time. Image quality models generally neglect color appearance issues and address spatial vision through the use of spatial contrast sensitivity functions and, sometimes, models of spatial frequency adaptation and masking. Generally spatial image quality models focus on threshold specification rather than suprathreshold scaling. Video models extend image quality models to the temporal domain by adding temporal contrast sensitivity functions, but normally neglect temporal adaptation and color appearance issues. Image appearance models [Fairchild, 2004; Fairchild and Johnson, 2004] combine all these approaches.

3The iCAM Model

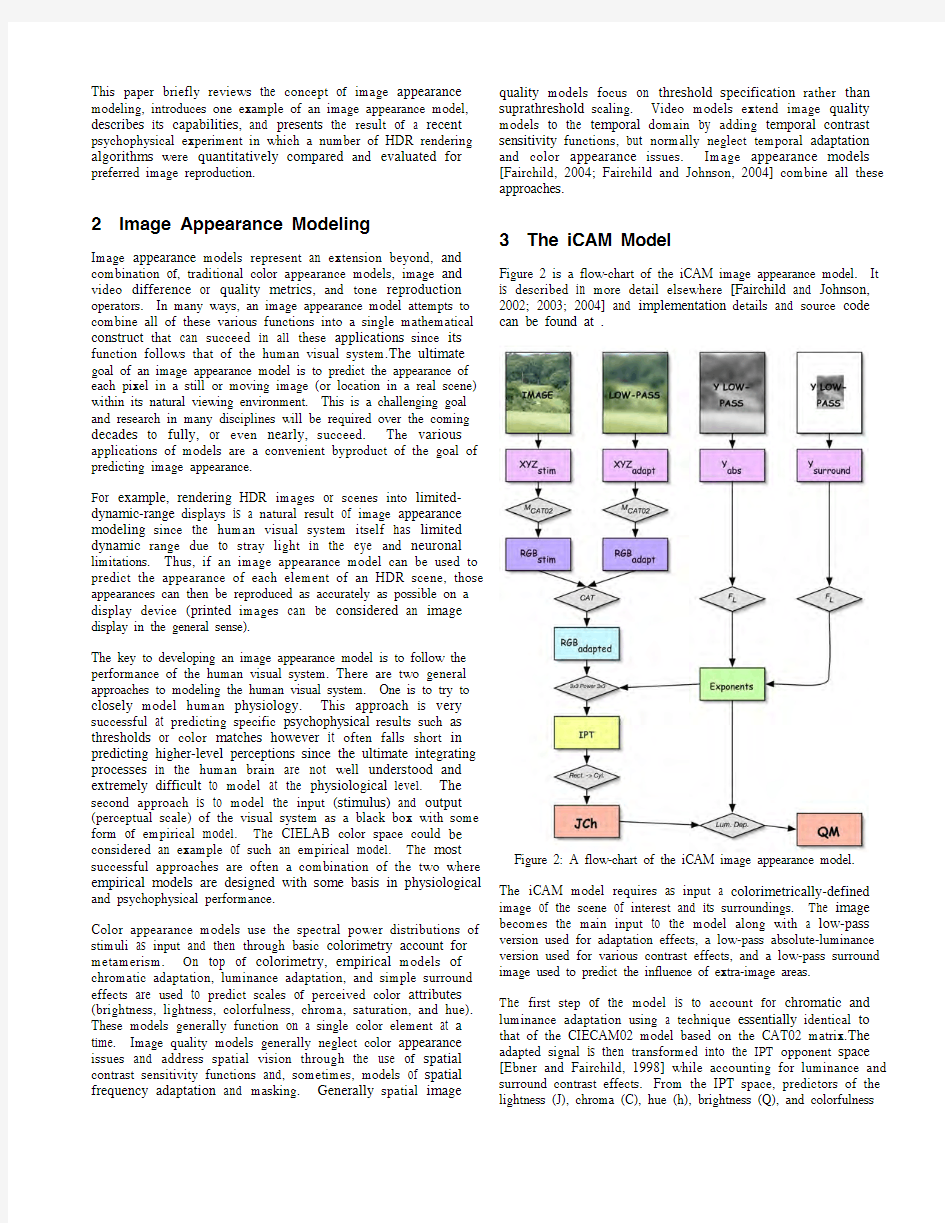

Figure 2 is a flow-chart of the iCAM image appearance model. It is described in more detail elsewhere [Fairchild and Johnson, 2002; 2003; 2004] and implementation details and source code

can be found at

Figure 2: A flow-chart of the iCAM image appearance model. The iCAM model requires as input a colorimetrically-defined image of the scene of interest and its surroundings. The image becomes the main input to the model along with a low-pass version used for adaptation effects, a low-pass absolute-luminance version used for various contrast effects, and a low-pass surround image used to predict the influence of extra-image areas.

The first step of the model is to account for chromatic and luminance adaptation using a technique essentially identical to that of the CIECAM02 model based on the CAT02 matrix. The adapted signal is then transformed into the IPT opponent space [Ebner and Fairchild, 1998] while accounting for luminance and surround contrast effects. From the IPT space, predictors of the lightness (J), chroma (C), hue (h), brightness (Q), and colorfulness

(M) of each scene element are derived. To render an HDR image, the JCh components are reproduced by inverting the adaptation model for the single fixed viewing condition of the display. To predict image quality, spatial filters are introduced into the IPT transformation and to account for temporal adaptation and contrast sensitivity for video applications, integrating functions and temporal filters are introduced into the adapting and IPT representations respectively. Details on these applications can be found in Fairchild and Johnson [2003,2004].

4HDR Rendering

The right-hand column of Fig. 3 includes several examples of HDR images rendered using the iCAM model with a single default set of parameters as described by Johnson and Fairchild [2003]. To the degree the model accurately represents human visual performance (and calibrated image data are available), these renderings should appear similar to the experience an observer would have when viewing the original scene (if it existed). The images are from a variety of publicly available image sets as described in detail by Kuang et al. [2004] and referenced at

To begin to compare the performance of various HDR rendering algorithms with the ultimate goals of finding the most preferable for image reproduction and the most accurate for vision modeling, an extensive psychophysical experiment was undertaken by Kuang et al. [2004]. The experiment and summary results are described below.

Ten HDR images, including one synthetic image, were used in the experiment as illustrated in Fig. 3. Image content was slected to span the space represented by magnitude of dynamic range and mean luminance. Both color and black-and-white versions of the images were evaluated to compare various algorithms for both their tone reproduction and color reproduction properties. Eight different HDR tone-rendering algorithms were included in the experiment. These include bilateral filtering [Durand and Dorsey, 2002], a photographic zone-system technique [Reinhard et al., 2002], a visibility-matching technique [Ward Larson et al., 1997], the iCAM model described in this paper, sigmoidal mapping [Braun and Fairchild, 1999], a recent retinex algorithm [Funt et al., 2000], a local non-linear masking procedure [Moroney, 2000], and a gradient domain technique [Fattal et al., 2002]. The techniques were chosen as those likely to produce the best results based on initial evaluations, those that are often referenced, and those that build upon earlier efforts and are improvements thereof. Figures 4 and 5 illustrate one set of examples each of color [image from

The experiment was carried out in a darkened room with images displayed on an Apple Cinema HD 23” LCD display that was carefully calibrated and characterized for accurate colorimetry. The white point luminance of the display was 180 cd/m2at a chromaticity approximating CIE illuminant D65. The total display area was 1920 x 1200 pixels allowing images to be viewed in pairs with long-dimensions of approximately 800 pixels. Images were presented on a gray background with a luminance of 20% of the white point. Each possible pair of renderings for each image content was evaluated (28 pairs x 10 images resulting in 280 pairs). Observers were asked to choose the preferred image in each of the 280 pairs and the entire experiment was repeated in a color and black-and-white (two 30-

minute sessions).

Figure 3 (in two parts): Ten HDR images used in the psychophysical experiments as rendered by the Durand and Dorsey [2002] algorithm (left column) and by iCAM (right

column).Thirty-three observers completed the color experiment and 23completed the black-and-white experiment. All were color normal with various degrees of image reproduction experience.The paired-comparison results were analyzed using Thurstone’s Law of Comparative Judgements, Case V, to produce interval scales of preferred tone reproduction quality along with 95%confidence intervals on each scale value.

Overall, and averaged across image content, the psychophysical results illustrated in Figs. 6 and 7 show that the Durand and Dorsey [2002] algorithm produced the most preferred images. As such, the 10 images rendered using the Durand and Dorsey algorithm are presented in the left-hand column of Fig. 3 in comparison with the iCAM renderings. The iCAM results were quite good, falling in a tie for third place in rendering preference with the Ward Larson et al. [1997] algorithm and behind the Reinhard et al. [2002] algorithm in addition to Durand and Dorsey

[2002].

Figure 4: One image from the experiment as rendered by each of the 8 algorithms tested. The algorithms are (a) Durand and Dorsey, (b) Reinhard, (c) Ward Larson, (d) iCAM, (e) Sigmoidal,

(f) Retinex, (g) Moroney, and (e) Fattal.It is of interest to note that the iCAM results are similar to Durand and Dorsey [2002] results in terms of tone reproduction, but less colorful and slightly lower in contrast overall. It is well established that observers prefer more colorful and contrasty images up to a point where they become unnatural and that the preferred images are generally more colorful and contrasty than accurate color reproductions. Since iCAM aimed to produce visually accurate results, it is possible that the iCAM images are indeed more visually accurate to the original scenes while at the same time being less preferred. This dualism in image reproduction is well established, particularly in consumer imaging systems. The fact that the Durand and Dorsey [2002] algorithm also performed best for preference with black-and-white images illustrates that the difference from iCAM is not only in colorfulness, but that a significant perceptual difference in tone reproduction is also present.

The results are essentially the same for the color and black-and-white experiments indicating that color reproduction issues are not separating the performance of these particular algorithms.There is a linear correlation coefficient of 0.98 between the results of the two experiments. Future experiments will examine the visual accuracy of these algorithms (and perhaps others) through direct comparison with HDR scenes to answer the open questions on the difference between preference and accuracy.

Figure 5: One image from the experiment as rendered by each of the 8 algorithms tested. The algorithms are (a) Durand and Dorsey, (b) Reinhard, (c) Ward Larson, (d) iCAM, (e) Sigmoidal,

(f) Retinex, (g) Moroney, and (h) Fattal.

D

u

r

a

n

d

&

D

o

r

s

e

y

R

e

i

n

h

a

r

d

W

a

r

d

L

a

r

s

o

n

i

C

A

M

S

i

g

m

o

i

d

a

l

R

e

t

i

n

e

x

M

o

r

o

n

e

y

F

a

t

t

a

l

Error Bar:

95%

Confidence

-

1

.

5

-

1

-

.

5

.

5

1

1

.

5

HDRi Rendering Algorithms

I

n

t

e

r

v

a

l

S

c

o

r

e

s

Figure 6: Overall visual preference scaling results, averaged across image content, of the eight algorithms for color images.

D

u

r

a

n

d

&

D

o

r

s

e

y

R

e

i

n

h

a

r

d

i

C

A

M

W

a

r

d

L

a

r

s

o

n

S

i

g

m

o

i

d

a

l

R

e

t

i

n

e

x

M

o

r

o

n

e

y

F

a

t

t

a

l

Error Bar:

95%

Confidence

-

1

.

5

-

1

-

.

5

.

5

1

1

.

5

HDRi Rendering Algorithms

I

n

t

e

r

v

a

l

S

c

o

r

e

s

Figure 7: Overall visual preference scaling results, averaged

across image content, of the eight algorithms for black/white

images.

Further details of the psychophysical experiment and analyses of the results can be found in Kuang et al. [2004]. Of importance to note is that there were significant image dependencies in the results. Only the average results (across image content) are presented in this paper. For example the Fattal et al. [2002] algorithm performs better on the image in Fig. 4 (one used in the initial algorithm testing and development) than it does on average. It should also be noted that the Durand and Dorsey [2002] algorithm does not perform best for all images, but it is always among the top 3 or 4 algorithms. While no single algorithm is optimal for all images, the average results do provide a measure of the consistency in their relative performance.

5Image Quality

The iCAM model, or similar models, can be used as the basis for an image quality metric through formulation of an image

difference specification. Just such an image difference metric,referred to as ΔIm, for image difference, has been developed and tested [Fairchild and Johnson, 2004]. The essential nature of this metric is to compare two images (e.g., original and reproduction)after spatial filtering to remove spatial frequencies and contrasts that are imperceptible. The comparison results in image difference maps along the dimensions of lightness, chroma, and hue that can be combined into a single overall image difference map much the same way a traditional color difference, ΔE*ab is

computed in the CIELAB color space.

Figure 8: An example of the utility of an image difference metric.Reproduction A, with an imperceptible change from the original of added sub-threshold noise, has an average ΔE*ab of 2.5 and ΔIm of 0.5. Reproduction B, with a clearly perceived change of a green banana, has an average ΔE*ab of 1.25 and ΔIm of 1.5.

A summary image difference metric can then be computed using various statistics of the image difference map such as the mean,median, max, 95% quantile, standard deviation, etc. Each statistical summary provides unique and important information on the image differences. For example, the mean has been used as a good descriptor of psychophysically-measured perceived sharpness [Johnson and Fairchild, 2000], contrast [Calabria and Fairchild, 2003a,b], and preference [Fernandez et al., 2003]scales. Ninety-five-percent quantiles are useful for identifying image regions with significant perceptual perturbations such as when a single object has been manipulated in an image. Lastly,an overall image quality metric can be derived by using summary image difference statistics for differences between a given image and a reference image defined as an optimum reproduction (or perhaps the original scene if accurate reproduction is the objective). Figure 8 illustrates how an image difference metric can correctly ignore the introduction of imperceptible noise into an image. Figure 9 illustrates the prediction of perceived contrast data from Calabria and Fairchild [2003a,b] by the mean ΔIm across a variety of images. Ideally the plot in Fig. 9 should indicate a linear growth in ΔIm as contrast changes in either direction from the standard at 0.00. The model predictions correlate with scaled contrast quite well.

Figure 9: Mean iCAM image difference, ΔIm, as a function of psychophysically-scaled perceived contrast. The image with perceived contrast of 0.00 was taken as the standard for difference

computations. Mean ΔIm are unsigned.Details of the formulation and testing of the iCAM-based image difference metric can be found in Fairchild and Johnson [2004].While the metric has yet to be extended to the temporal domain, it would be a simple matter to add temporal contrast sensitivity functions to the present spatial contrast sensitivity functions to allow prediction of flicker and other temporal artifacts. Temporal adaptation would also have to be treated as described in the following section.

Such image difference metrics have applications in several areas of computer graphics and visualization. For example, an image difference metric could be used to guide an iterative rendering technique to focus on improved detail in areas where perceptual differences are still being improved and to stop iterating on areas where no further improvement can be perceived. In visualization,an image difference metric can be used to confirm that significant magnitude differences in the data are being rendered into visually significant differences in the visualized image and that equal

increments in data magnitude map into equal increments of perceived color change within the spatial and temporal context of the image.

6Video Rendering Concepts

Fairchild and Johnson [2003] have completed the preliminary work required to extend the iCAM model, as presented in this paper, to the application of rendering HDR digital video. The main enhancement was to include a temporal integrating function for derivation of the adaptation image used as input for rendering any given frame. This temporal integration function was derived from previous research [Fairchild and Reniff, 1995] on the time-course of chromatic adaptation and utilizes the previous 10seconds of video to set the state of adaptation with the most recent

frames weighted most highly.

Figure 10: Frames from an HDR video rendered using iCAM with spatial and temporal modeling of adaptation. See text for full

description.The resultant rendering effect is similar to that experienced visually when one moves across a significant change in lighting conditions (for example turning on the bathroom lights on a dark morning right after waking up). At first, the change in viewing conditions is overwhelming, but then the visual system quickly

adapts and the scene settles to a more stable perception. This form of the model has applications in video rendering and video quality measurement (with an appropriate set of temporal contrast sensitivity functions). For example, a motion picture with a large dynamic range could be rendered with this model to produce a similar visual experience when viewed as a DVD on a lesser display in sub-optimal viewing conditions.

Figure 10 illustrates some video frames from an HDR video rendering completed with the temporal iCAM model. An HDR video was created via pan-and-scan through a still HDR image (the memorial scene from

The first frame of video (Fig. 10a), illustrates the state of dark adaptation (upper right) and the rendered video as being overwhelmingly bright (lower right; like first opening your eyes in the morning). After ten seconds, the visual system has had time to adapt to that scene (Fig. 10b) and adapt more to brighter areas (upper right). The rendered video frame (lower right) then appears more normal and much like the final iCAM rendering of that segment of the still image. Fig. 10c simply shows the same information for another area of the scene that was viewed at the end of the video.

7Conclusions and Future Directions

This paper has introduced a relatively new area of research in color imaging science that has been described as image appearance modeling, described an example of such a model called iCAM, presented the results of a recent psychophysical experiment to test iCAM and several other algorithms for tone mapping HDR images, and outlined some other applications of image appearance models for image and video rendering and quality.

It is expected that this natural combination of previously disparate research areas (color appearance, image quality, rendering and visualization) will continue to result in novel models of human visual performance and a wide variety of forthcoming technological applications. Potential applications include:

Digital Cinema,

HDR Digital Photography,

Image Quality Assessment and Prediction,Imaging Systems Modeling and Simulation,Visualization and Rendering Optimization,

Digital Compositing,

Display Independent Rendering and Encoding,

Full Utilization of Future Display Technologies, and

others yet to be imagined.

8Acknowledgements

The research presented in this paper has been supported by Fuji Photo Film, the Munsell Color Science Laboratory, and Eastman Kodak. The authors thank the many observers who have taken part in the various psychophysical experiments used to develop and test image appearance models and other algorithms. References

B RAUN, G.J. AND F AIRCHILD, M.D. 1999. Image lightness rescaling

through sigmoidal contrast enhancement functions. in Proceedings of IS&T/SPIE Electronic Imaging ’99: Device Independent Color, Color Hardcopy, and Graphic Arts IV, 96-105.

C ALABRIA, A.J. AN

D F AIRCHILD, M.D. 2003a. Perceived image contrast

and observer preference I: The effects of lightness, chroma, and sharpness manipulations on contrast perception. J. Imaging Sci. & Tech. 47, 479-493.

C ALABRIA, A.J. AN

D F AIRCHILD, M.D. 2003b. Perceived image contrast

and observer preference II: Empirical modeling of perceivd image contrast and observer preference data. J. Imaging Sci. & Tech. 47, 494-508.

D URAND, F. AND D ORSEY, J. 2002. Fast bilateral filtering for the display

of high-dynamic-range images. in Proceedings of SIGGRAPH 2002, Annual Conference Series, ACM, 257-265.

E BNER, F. AND

F AIRCHILD. 1998. Development and testing of a color

space (IPT) with improved hue uniformity. in Proceedings of IS&T/SID 6th Color Imaging Conference, 2-7.

F AIRCHILD, M.D. AND R ENIFF, L. 1995. Time-course of chromatic

adaptation for color-appearance judgements. J. Opt. Soc. Am. A 12, 824-833.

F AIRCHILD, M.D. AND J OHNSON, G.M. 2002. Meet iCAM: A next-

generation color appearance model. in Proceedings of IS&T/SID 10th Color Imaging Conference, 33-38.

F AIRCHILD, M.D. AND J OHNSON, G.M. 2003. Image appearance modeling.

in Proceedings of IS&T/SPIE Electronic Imaging Conference, Vol.

5007, 149-160.

F AIRCHILD, M.D. AND J OHNSON, G.M. 2004. The iCAM framework for

image appearance, differences, and quality. J. Electronic Imaging 13, 126-138.

F AIRCHILD, M.D. 2004. Color Appearance Models, 2nd Ed., IS&T/Wiley.

F ATTAL, R., L ISCHINSKI, D. AND W ERMAN, M. 2002. Gradient domain

high dynamic range compression. in Proceedings of SIGGRAPH 2002, Annual Conference Series, ACM, 249-256.

F ERNANDEZ, S., J OHNSON, G.M. AND F AIRCHILD, M.D. 2003. Statistical

summaries of iCAM image-difference maps. in Proceedings of IS&T PICS Conference, 108-113.F UNT, B., C IUREA, F. AND M C C ANN, J. 2000. Retinex in Matlab. in

Proceedings of IS&T/SID 8th Color Imaging Conference, 112-121.

J OHNSON, G.M.AND F A I R C H I L D, M.D. 2000. Sharpness rules. in Proceedings of IS&T/SID 8th Color Imaging Conference, 24-30.

J OHNSON, G.M. AND F AIRCHILD, M.D. 2003. Rendering HDR images. in Proceedings of IS&T/SID 11th Color Imaging Conference, 36-41.

K UANG, J., Y AMAGUCHI, H., J OHNSON, G.M.AND F AIRCHILD, M.D.

2004. Testing HDR image rendering algorithms. in Proceedings of IS&T/SID 12th Color Imaging Conference, submitted.

M ORONEY, N. 2000. Local color correction using non-linear masking. in Proceedings of IS&T/SID 8th Color Imaging Conference, 108-111.

R EINHARD, E., S TARK, M., S H I R L E Y, P. AND F ERWERDA, J. 2002.

Photographic tone reproduction for digital images. in Proceedings of SIGGRAPH 2002, Annual Conference Series, ACM, 267-276.

W ARD L ARSON G., R USHMEIER, H. AND P IATKO, C. 1997. A visibility matching tone reproduction operator for high dynamic range scenes.

IEEE Trans. on Vis. & Comp. Graphics 3, 291-306.

Image Appearance Modeling and High-Dynamic-Range Image Rendering

Fairchild, Johnson, Kuang, and Yamaguchi

Figure 1: An example of an HDR digital

image of the RIT color cube rendered using

the iCAM image appearance model to

similate human perception of the scene and

(inset) rendered linearly.

Figure 2: A flow-chart of the iCAM image

appearance model.

psychophysical experiments as rendered by

the Durand and Dorsey [2002] algorithm

(left column) and by iCAM (right column).

Figure 4: One image from the experiment as

rendered by each of the eight algorithms

tested. The algorithms are (a) Durand and

Dorsey, (b) Reinhard, (c) Ward Larson, (d)

iCAM, (e) Sigmoidal, (f) Retinex, (g)

Moroney, and (e) Fattal.

Figure 8: An example of the utility of an

image difference metric. Reproduction A,

with an imperceptible change from the

original of added sub-threshold noise, has an

average ΔE*ab of 2.5 and ΔIm of 0.5.

Reproduction B, with a clearly perceived

change of a green banana, has an average

ΔE*ab of 1.25 and ΔIm of 1.5.

多功能6位电子钟说明书 一、原理说明: 1、显示原理: 显示部分主要器件为2位共阳红色数码管,驱动采用PNP型三极管驱动,各端口配有限流电阻,驱动方式为扫描,占用P1.0~P1.6端口。冒号部分采用4个Φ3.0的红色发光,驱动方式为独立端口驱动,占用P1.7端口。 2、键盘原理: 按键S1~S3采用复用的方式与显示部分的P3.5、P3.4、P3.2口复用。其工作方式为,在相应端口输出高电平时读取按键的状态并由单片机支除抖动并赋予相应的键值。 3、迅响电路及输入、输出电路原理: 迅响电路由有源蜂鸣器和PNP型三极管组成。其工作原理是当PNP型三极管导通后有源蜂鸣器立即发出定频声响。驱动方式为独立端口驱动,占用P3.7端口。 输出电路是与迅响电路复合作用的,其电路结构为有源蜂鸣器,4.7K定值电阻R16,排针J3并联。当有源蜂鸣器无迅响时J3输出低电平,当有源蜂鸣器发出声响时J3输出高电平,J3可接入数字电路等各种需要。驱动方式为迅响复合输出,不占端口。 输入电路是与迅响电路复合作用的,其电路结构是在迅响电路的PNP型三极管的基极电路中接入排针J2。引脚排针可改变单片机I/O口的电平状态,从而达到输入的目的。驱动方式为复合端口驱动,占用P3.7端口。 4、单片机系统: 本产品采用AT89C2051为核心器件(AT89C2051烧写程序必须借助专用编程器,我们提供的单片机已经写入程序),并配合所有的必须的电路,只具有上电复位的功能,无手动复位功能。 二、使用说明: 1、功能按键说明: S1为功能选择按键,S2为功能扩展按键,S3为数值加一按键。 2、功能及操作说明:操作时,连续短时间(小于1秒)按动S1,即可在以上的6个功能中连

外表重要吗Is Appearance Important When I was very small, I was always educated by my parents and teachers that appearance was not important, and we shouldn’t deny a person by his face, only the beautiful soul could make a person shinning. I kept this words in my heart, but as I grow up, I start to question about the importance of appearance. It occupies some places in my heart. People will make judgement of another person in first sight. The one who looks tidy and beautiful will be surely attract the public’s attention and becomes the spotlight. On the contrary, if the person looks terrible, he will miss many chances to make friends, because he will be ignored by the first sight. It is true that an attractive people is confident and has the charm, and everybody will appreciate his character. So before you go out of the house, make sure you look good. 在我很小的时候,我的父母和老师总是教育我外表是不重要的,我们不能通过外表而去否认一个人,因为只有美丽的心灵才能使人发光。我心里一直把这些话牢记于心,但是当我长大后,我开始怀疑关于外貌的重要性,因为外貌在我心里占据了一定分量。人们会根据第一印象来判断一个人。那些看起来整洁,漂亮的人肯定会吸引更多公众的

英语情景口语:personal appearance相貌 3 personal appearance Beginner A: That girl looks very attractive, doesn’t she? B: do you think so? I don’t like girls who look like that. I like girls who aren’t too slim. If you like her, go and talk to her. A: I’d like to, but there’s her boyfriend. He’s very broad-shouldered. B: he’s huge! He must go to the gym to have a well-built body like that. A: do you prefer tall girls or short ones? B: I don’t mind, but I like girls with long hair. A: we have different tastes. I like girls with short hair. I like tall girls- probably because I’m so tall myself. B: have you ever dated a girl taller than you? A: no, never. I don’t think I’ve ever met a girl taller than me! Have you gained weight recently? B: yes, I have. Perhaps I should go to the gym, like that girl’s boyfriend. A: I ‘m getting a bit plump myself. Perhaps I’ll go with you. Intemp3ediate A: let’s play a little game. I’ll describe someone and you try and guess who it is. B: ok. I’m really bored at the moment. A: ok. This man is tall and slim. He’s got blue eyes and curly brown hair. B: does he have a moustache or a beard? A: good question. Yes, he has a moustache, but no beard. B: sounds like mike, is it? A: yes, it is. You describe someone we both know. B: right. She’s not very tall and she’s quite plump. She’s got blonder hair, but I don’t know what color her eyes are. A: is she attractive? I don’t think I know anyone like that. B: I like slim girls, so I doubt I would find a plump girl attractive. You’ll have to give me some more infomp3ation. A: she’s got tiny feet and wears really unfashionable shoes. In fact, she wears unfashionable clothes too. B: this doesn’t sound like anyone I know. I give up. Teel me who she is. A; she’s your mother! B: how embarrassing! I don’t even recognize a description of my own mother! How important do you think appearance is ? A: I think that unfortunately it’s more important than a person’s character. Advertising and stuff tells us that we have to be attractive. I think it’s wrong, but that’s the way the world is now. A: I’m afraid you’re right. I chose my girlfriend because she has a wonderful personality. B: well, you certainly didn’t choose her because of her looks! Hey, I was joking! Don’t hit me!

七彩时钟使用说明书 本产品融合了万年历之时间、日期、星期、温度的显示,特别适合居家办公使用。 一、功能简介 ★正常时间功能:显示时间、日期(从2000年至2099年)、星期、温度,并可实现12/24小时制的转换。 ★闹钟和贪睡功能:每日闹铃,闹铃音乐有8首可选,同时可开启贪睡功能。 ★环境温度显示功能:温度测量00C-500C或320F-1220F并可进行摄氏/华氏温度转换。★七彩灯功能:可发出七种颜色的光,循环变色。 二、功能操作 ⑴.时间日期设置 ★上电后显示正常状态.按SET键进入时间、日期的设置,并以下列顺序分别设置小时分钟、年、月、日、星期等,通过UP/DOWN键配合来完成设置。 时→分→年→月→日→正常显示 ★设置范围:时为1-12或0-23,分为0-59,年为2000-2099.月为1-12.日为1-31;在日期设置的同时,星期由MON 3=. SUN相应的自动改变。 ★在设置状态,也可按AL键或无按键1分钟退出设置,并显示当前所设置的时间。 ★在正常状态,按UP键进行12和24小时转换。 ⑵、闹钟和贪睡设置 ★在正常状态,按AL键一次进入闹钟模式。 ★在闹钟状态,按SET键进入闹铃设定状态,以下列顺序分别设置小时、分钟、贪睡、音乐,通过UP/DOWN键配合来完成其设置。 时→分→贪睡→音乐→退出 ★在设置状态,如果无按键1分钟或按MODE键退出设置,并显示当前所设置的时间。 ★在闹钟状态,通过UP键开启闹铃的标志,按第二次UP键开启贪睡功能。 闹铃→Zz贪睡→OFF ★当闹钟到达设定时间,响闹1分钟;当贪睡时间到达响闹,按SET键取消响闹或按任意键停止响闹。 ★贪睡的间隔延续时间范围设定:1-60分钟。 ★当闹铃及贪睡的标志未开启时,即闹铃和贪睡同时关闭,只有在闹铃标志开启时,重按UP,贪睡功能才有效。 ⑶、温度转换 在正常状态,按DOWN键可以进行摄氏l华氏温度间的相互转换。 ⑷、按TAP可开启夜灯,5秒钟自动熄灭。 ⑸、把开关置ON或DEMO位置开启七彩灯。 ⑹、可使用外接直流电源:4.5V 100MA的变压器。 三、注意事项: 1、避免猛烈冲击、跌落。 2、勿置阳光直射、高温、潮湿的地方。 3、避免使用带有腐蚀性化学成份的液体和硬布来抹擦本产品表面。 4、当屏幕显示混乱时,拔出钮扣电池,重新装上恢复原始状态,使显示恢复正常。 5、切勿新旧电池混在一起使用,在屏幕显示不清楚时请及时更换新电池。 6、如长时间不使用时钟时,请将电池取出,以免电池漏液损坏本机。 7、请勿随意拆开产品调整内部元件参数。

本电子闹钟的设计是以单片机技术为核心,采用了小规模集成度的单片机制作的功能相对完善的电子闹钟。硬件设计应用了成熟的数字钟电路的基本设计方法,并详细介绍了系统的工作原理。硬件电路中除了使用AT89C51外,另外还有晶振、电阻、电容、发光二极管、开关、喇叭等元件。在硬件电路的基础上,软件设计按照系统设计功能的要求,运用所学的汇编语言,实现的功能包括‘时时-分分-秒秒’显示,设定和修改定时时间的小时和分钟、校正时钟时间的小时、分钟和秒、定时时间到能发出一分钟的报警声。 一芯片介绍 AT89C51是一种带4K字节FLASH存储器的低电压、高性能CMOS 8位微处理器,俗称单片机。AT89C51是一种带2K字节闪存可编程可擦除只读存储器的单片机。单片机的可擦除只读存储器可以反复擦除1000次。该器件采用ATMEL高密度非易失存储器制造技术制造,与工业标准的MCS-51指令集和输出管脚相兼容。由于将多功能8位CPU和闪烁存储器组合在单个芯片中,ATMEL的AT89C51是一种高效微控制器,AT89C51是它的一种精简版本。AT89C51单片机为很多嵌入式控制系统提供了一种灵活性高且价廉的方案,外形及引脚排列如图1-1所示。 图1-1 AT89C51引脚图 74LS573 的八个锁存器都是透明的D 型锁存器,当使能(G)为高时,

Q 输出将随数据(D)输入而变。当使能为低时,输出将锁存在已建立的数据电平上。输出控制不影响锁存器的内部工作,即老数据可以保持,甚至当输出被关闭时,新的数据也可以置入。这种电路可以驱动大电容或低阻抗负载,可以直接与系统总线接口并驱动总线,而不需要外接口。特别适用于缓冲寄存器,I/O 通道,双向总线驱动器和工作寄存器。外形及引脚排列如图1-2所示。 图1-2 74LS573引脚图

Don’t Judge a Person by His Appearance With the development of the society and the improvement of living standard of the people, more and more advanced theories emerge. However, the conventional view of judging the person by the appearance still exists among the public. This phenomenon arouses substantial discussion and heated debates. Judging a person by his appearance will lead to a number of problems. To start with, the appearance of one person is not equal to his ability, which is the key to success. The most handsome boy may have lowest quality. Additionally,if you treat a person only because of his pleasant appearance, you may be cheated since a dangerous man frequently disguise himself with charming smile and gentle behaviors. Eventually, when you seek for a husband or a wife only by one’s good looks, but don’t care about whether you have

XX 学院 课程设计说明书(20XX / 20XX学年第一学期) 课程名称:嵌入式系统设计 题目:定时时钟 专业班级:XXXXXXXXXXXX 学生姓名:XXX 学号:XXXXXXXX 指导教师:XXXXXX 设计周数:2周 设计成绩: 二OXX年X 月XX 日

定时时钟设计说明书 1.选题意义及背景介绍 电子钟在生活中应用非常广泛,而一种简单方便的数字电子钟则更能受到人们的欢迎。所以设计一个简易数字电子钟很有必要。本电子钟采用AT89C52单片机为核心,使用12MHz 晶振与单片机AT89C52 相连接,通过软件编程的方法实现以24小时为一个周期,同时8位7段LED数码管显示时间的小时、分钟和秒,并在计时过程中具有整点报时、定时闹钟功能。时钟设有起始状态,时钟显示,设置时钟时,设置时钟分,设置闹钟时和设置闹钟分共六个状态。电子钟设有四个操作按钮:KEY1(MODE)、KEY2(PLUS)、KEY3(MINUS)、KEY4(RESET),对电子钟进行模式切换和设置等操作。 2.1.1方案设计 2.1.2系统流程框图 AT89C52 按钮 数码管显示 开启电源 初始显示d.1004-22 循环检测按键状态 闹铃 KEY1是否按下模式切换KEY2是否按下 数字加 KEY3是否按下 数字减

2.1.3电路设计 (整体电路图)

(已封装的SUB1 内部图) 2.1.4主要代码 1)通过循环实现程序的延时 void delay(uint z) { uint x, y; for (x = 0; x Ladies and Gentlemen: It’s really my great honor to be here and give you a speech. Today my topic is Judge by Appearance. Now I am standing here, dear friends, what is your impression of me? A girl with small eyes? She is not my style! So just now, you judged me by appearance. The most interesting thing is everyone judges others by their appearance, but Judge by appearance has negative meanings. Actually, in my view, it’s inevitable and necessary to Judge by appearance. Imagine you are now meeting a stranger, there is no doubt that outward appearance is the first thing and the only thing you see and know about the person. So Judge by Appearance is a kind of human nature. In many novels, hero and heroine tends to twist together because of judging each other by appearance, though it may lead to misunderstanding, nobody can avoid it. Apart from facial appearance, outward appearance also exist in personal attire as well as behavior,I don’t care weather your clothes are in fashion or not. But if your hair is all funky with a disgusting smell, I just want to step away. What’s more, if somebody appear smiley and behave in good manners, we will have a great first impression of them as this is the type of person we want to be around. However if someone looks grumpy we will avoid this type of person. So actually, we judge one‘s appearance to determine if the other person is a threat or trustworthy and decide how to get along with them or possible reject them. However, it doesn’t means that appearance can show everything. You know what? At the time when I have butch haircu t in primary school, everyone thought I was a tomboy. But they don’t understand me at all. Only god knows I am a typical sentimental girl! It’s obviously that appearances are deceiving sometimes and we should always make an effort to investigate further and get to know the real person. Appearance doesn’t stay, what stays is our heart and our soul, and that is the real thing that we should judge people upon. Remember this and you may make a sound judgment in the future. 电子闹钟设计说明书 一、实现的功能 一个简单的电子闹钟设计程序,和一般的闹钟的功能差不多。首先此程序能够同步电脑上的显示时间,保证时间的准确性;24小时制,可以根据自己喜欢的铃声设置闹钟提示音,还能自己设置提示语句,如“时间到了该起床了”,“大懒虫,天亮了,该起床了”等等,所以这是一个集实用和趣味于一体的小程序。 二、设计步骤 1、打开Microsoft Visual C++ 6.0,在文件中点击新建,在弹出框内选择MFC AppWizard[exe]工程,输入工程名张卢锐的闹钟及其所在位置,点击确定,如图所示。 2、将弹出MFC AppWizard-step 1对话框,选择基本对话框,点击完成,如图所示。 然后一直点下一步,最后点完成,就建立了一个基于对话窗口的程序框架,如图所示。 3、下面是计算器的界面设计 在控件的“编辑框”按钮上单击鼠标左键,在对话框编辑窗口上合适的位置按下鼠标左键并拖动鼠标画出一个大小合适的编辑框。在编辑框上单击鼠标右键,在弹出的快捷莱单中选择属性选项,此时弹出Edit属性对话框,以显示小时的窗口为例,如图所示,在该对话框中输入ID属性。 在控件的“Button”按钮上单击鼠标左键,在对话框上的合适的位置上按下鼠标左键并拖动鼠标画出一个大小合适的下压式按钮。在按钮上单击鼠标右键,在弹出的快捷菜单中选择属性选项,此时也弹出Push Button属性对话框,以数字按钮打开为例,如图所示,在该对话框中输入控件的ID值和标题属性。 按照上面的操作过程编辑其他按钮对象的属性。 表1 各按钮和编辑框等对象的属性 对象ID 标题或说明 编辑框IDC_HOUR 输入定时的整点时间 编辑框IDC_MINUTE 输入定时的分钟数 编辑框IDC_FILE 链接提示应所在地址 编辑框IDC_WARING 自己编辑显示文本 按钮IDC_OPEN 打开 按钮IDC_IDOK 闹钟开始 按钮IDC_CHANGE 重新输入 静态文本IDC_STATIC 界面上的静态文本,如时,分,备注完成后界面如图所示。 facial features she has a thin face an oval face a round face clean-shaven a bloated face a cherubic face a chubby face chubby-cheeked a chubby/podgy face he had a weather-beaten face face she has freckles spots/pimples blackheads moles warts wrinkles rosy cheeks a birthmark a double chin hollow cheeks a dimple smooth-cheeked/smooth-faced a deadpan face a doleful face a sad face a serious face a smiling face a happy face smooth-cheeked/smooth-faced to go red in the face (with anger/heat) to go red/to blush (with embarassment) he looks worried frightened surprised a smile a smirk a frown nose a bulbous nose a hooked nose a big nose a turned-up/snu b nose a pointed nose a flat nose/a pug nose a lopsided nose a hooter/conk (colloquial Br. Eng.) a schnozzle (colloquial Am. Eng.) to flare your nostrils/to snort eyes she has brown eyes he has beady eyes a black eye red eyes bloodshot eyes to wink to blink she is cross-eyed a squint she's blind he's blind in one eye to go blind Appearance I magine you have two candidates for a job. Their CV s are equally good, and they both give good interview. You cannot help noticing, though, that one is pug-ugly and the other is handsome. Are you swayed by their appearance? 翻译解析: 1.for a job中的for是介词,在翻译时可以采用词性转译的方法处理为动词,意为竞争一份工作。 2. CV是curriculum vitae的缩写,简历,履历的意思。 3. give good interview这一动作的发出者是前面提到的两位候选人,指的是他们在面试中表现都很好。 4. pug-ugly与后面的handsome相对,译成丑陋或其貌不扬。 5. be swayed by可以根据上下文进行推测,意为“左右摇摆不定”。 参考译文: 假设有两个候选人来竞争一份工作。他们两的履历不相上下,而且他们的面试表现也都很好。但是你不会不注意到其中一个人其貌不扬,而另一个则长相俊美。你的取舍是否会被他们俩的外貌所影响? I f you were swayed by someone’s looks, would that be wrong? In the past, people often equated beauty with virtue and ugliness with vice. 翻译解析: 1.equate A with B意思是将A等同于B。 C电子闹钟设计说 明书 电子闹钟设计说明书 一、实现的功能 一个简单的电子闹钟设计程序,和一般的闹钟的功能差不多。首先此程序能够同步电脑上的显示时间,保证时间的准确性;24小时制,能够根据自己喜欢的铃声设置闹钟提示音,还能自己设置提示语句,如“时间到了该起床了”,“大懒虫,天亮了,该起床了”等等,因此这是一个集实用和趣味于一体的小程序。 二、设计步骤 1、打开Microsoft Visual C++ 6.0,在文件中点击新建,在弹出框内选择MFC AppWizard[exe]工程,输入工程名张卢锐的闹钟及其所在位置,点击确定,如图所示。 2、将弹出MFC AppWizard-step 1对话框,选择基本对话框,点 击完成,如图所示。 然后一直点下一步,最后点完成,就建立了一个基于对话窗口的程序框架,如图所示。 3、下面是计算器的界面设计 在控件的“编辑框”按钮上单击鼠标左键,在对话框编辑窗口上合适的位置按下鼠标左键并拖动鼠标画出一个大小合适的编辑框。在编辑框上单击鼠标右键,在弹出的快捷莱单中选择属性选 项,此时弹出Edit属性对话框,以显示小时的窗口为例,如图所示,在该对话框中输入ID属性。 在控件的“Button”按钮上单击鼠标左键,在对话框上的合适的位置上按下鼠标左键并拖动鼠标画出一个大小合适的下压式按钮。在按钮上单击鼠标右键,在弹出的快捷菜单中选择属性选项,此时也弹出Push Button属性对话框,以数字按钮打开为例,如图所示,在该对话框中输入控件的ID值和标题属性。 按照上面的操作过程编辑其它按钮对象的属性。 表1 各按钮和编辑框等对象的属性 Appearance: a double-edged sword With the society developing rapidly, it is widely believed that appearance of someone is both important and beneficial to the employment and looking for a partner in marriage. For this reason, current people pay more and more attention to appearance of everyone. However, what I would like to say is that the appearance is a double- edged sword, which means that we should not pay overmuch attention to the appearance. There are so many reasons showing that people attached importance to the appearance a long time ago. For example, a story named’ Zou Ji satirizes the king of Qi’ from the ancient book called ‘ intrigues of the warring states’ recorded a minister in the Spring and Autumn period ,Zou Ji, was very concerned about his appearance. Meanwhile, we have enough reason to believe that the love of beauty has appeared very early, probably following the emergence of human society. Having realized the long history of our hunger to look beautiful, we can draw the conclusion that the appearance is of significance to everyone in modern society. Because the beauty brings pleasure to people, most people will be interest in someone with good look. Therefore, it didn’t come as a shock to learn that beautiful employees have more chances to obtain a job than average person. We, nevertheless, are all aware that everything has its two sides. The beauty is no exception. Not only is the beauty hollow, but also the person with good look is shallow without rich connotation and talent. What I want to stress is that each of us should spare no effort to take measures to expand our mind. Although you beautifully make up when you take an interview, you can’t consider enough points or speak your idea confidently. 一、功能描述 本工程包括矩阵键盘和数码管显示模块,共同实现一个带有闹钟功能、可以设置时间的数字时钟。具体功能如下: 1.数码管可以显示时十位、时个位、分十位、分个位、秒十位、秒个位。 2.上电后,数码管显示000000,并开始每秒计时。 3.按下按键0进入时间设置状态。再按下按键0退出时间设置状态,继续 计时。 4.在时间设置状态,通过按键1来选择设置的时间位,在0~5之间循环选 择。 5.在时间设置状态,通过按键2来对当前选择的时间位进行加1。 6.在计时状态下,按下按键14,进入闹钟时间点设置状态。再按下按健 15,退出闹钟设置状态。 7.在闹钟设置状态,按下按键13选择设置的时间位,此时可以按下所需要 的按键序号设置对应闹钟时间。 8.当前时间与所设置的时间点匹配上了,蜂鸣器响应5秒。 二、平台效果图 三、实现过程 首先根据所需要的功能,列出工程顶层的输入输出信号列表。 我们把工程分成四个模块,分别是数码管显示模块,矩阵键盘扫描模块,时钟计数模块,闹钟设定模块。 1.数码管显示模块 本模块实现了将时钟数据或者闹钟数据显示到七段译码器上的功能。 七段译码器引脚图: 根据七段译码器的型号共阴极或者共阳极,给予信号0或1点亮对应的led灯,一个八段数码管称为一位,多个数码管并列在一起可构成多位数码管,它们的段选(a,b,c,d,e,f,g,dp)连在一起,而各自的公共端称为位选线。显示时,都从段选线送入字符编码,而选中哪个位选线,那个数码管便会被点亮。数码管的8段,对应一个字节的8位,a对应最低位,dp 对应最高位。所以如果想让数码管显示数字0,那么共阴数码管的字符编码为00111111,即;共阳数码管的字符编码为11000000。 在轮流显示过程中,每位数码管的点亮时间为1~2ms,由于人的视觉暂留现象及发光二极管的余辉效应,尽管实际上各位数码管并非同时点亮,但只要扫描的速度足够快,给人的印象就是一组稳定的显示数据,不会有闪烁感,动态显示的效果和静态显示是一样的,能够节省大量的I/O端口,而且功耗更低。 本模块采用6个七段译码器显示闹钟小时分钟秒位,使用一个计数器不停计数0-5,每个数字代表一个七段译码器,在对应的七段译码器给予对应的字符编码,以此达到扫描数码管显示数据的功能。 信号列表如下: 木头闹钟说明书 产品功能 ●同屏显示年、月、日、时、分、星期功能: ●闹钟及贪睡功能:贪睡功能开启后,闹钟可以6次闹响,每次闹响间隔5分钟。没有开启贪睡功能,闹响 1分钟后,闹钟功能自动关闭; ●时间记忆功能:不使用AC / DC适配器时,LED屏不显示,但时钟内部会继续正常计时,当连接上适配器时,时钟会自动显示当前正常的时间,无需重新设置; ● 1/4小时制转换功能; ●省电模式:每天下午6点至第二天早上7点,LED显示亮度会自动降低,使人的视觉感到舒适。 使用方法 走时及闹铃设置 ●将AC / DC适配器插上电源,把6V直流电压插头接入时钟6V接口,LED数字屏即发亮显示; ●按住SET键3秒进入设定模式,再按动SET键,每按动一次,年、月、日、时、分将依次闪烁。要设置年、月、日、时、分,只要改变在闪烁的数字即可,按动UP和DOWN 键均可调节,设置结束按SET键,时 钟回到正常的走时状态。星期的设定是全自动的, 它会随着时间的设置而自动改变。●设置闹铃时间, 按动DOWN键显示屏出现AL:- -或AL:on ,每按动DOWN键一次即会转换一次。按住DOWN键3秒,时的数字在闪烁时,即可通过UP 和DOWN键进行调节,要调节分的数字,先按动SET键切换到分的闪烁,同样用UP和DOWN键进行调 节,设置结束按SET键,时钟回到正常的走时状态。闹铃闹响时,按下SET或UP键,闹铃闹响停止,并 且关闭闹钟功能,如果闹铃闹响时,按下DOWN 键闹响停止,则贪睡功能自动开启,闹铃会每隔5分钟 闹响一次,可连续6次。若贪睡闹响未达到第6次,在任何一次闹响时,按下SET或UP键,不但闹响停止,同时自动关闭贪睡功能,如果是按下DOWN键,每隔分钟会再闹响一次,直至达到6次闹响为止; ●在设定模式下,15秒内不按任何键,时钟将恢复时间显示。 12/24小时制设置 正常时间显示下,每按一次UP键,可以实现1/4小时制的转换。在12小时制式下,数字走到12,这 时在时钟的左上角将会出现亮点,表示这时已是下午时间。 电源供电 AC / DC适配器输入电压:220-230V 0HZ ,输出电压:6V /00mA。功率是:6V*0.2=1.2W将适配器6V直 精品文档 木头闹钟说明书 产品功能 ●同屏显示年、月、日、时、分、星期功能: ●闹钟及贪睡功能:贪睡功能开启后,闹钟可以6次闹响,每次闹响间隔5分钟。没有开启贪睡功能,闹响 1分钟后,闹钟功能自动关闭; ●时间记忆功能:不使用AC / DC适配器时,LED屏不显示,但时钟内部会继续正常计时,当连接上适配器时,时钟会自动显示当前正常的时间,无需重新设置; ● 1/4小时制转换功能; ●省电模式:每天下午6点至第二天早上7点,LED显示亮度会自动降低,使人的视觉感到舒适。 使用方法 走时及闹铃设置 ●将AC / DC适配器插上电源,把6V直流电压插头接入时钟6V接口,LED数字屏即发亮显示; ●按住SET键3秒进入设定模式,再按动SET键,每按动一次,年、月、日、时、分将依次闪烁。要设置年、月、日、时、分,只要改变在闪烁的数字即可,按动UP和DOWN 键均可调节,设置结束按SET键,时 钟回到正常的走时状态。星期的设定是全自动的, 它会随着时间的设置而自动改变。●设置闹铃时间,2016全新精品资料-全新公文范文-全程指导写作–独家原创 11/ 1. 精品文档 按动DOWN键显示屏出现AL:- -或AL:on ,每按动DOWN键一次即会转换一次。按住DOWN键3秒,时的数字在闪烁时,即可通过UP 和DOWN键进行调节,要调节分的数字,先按动SET键切换到分的闪烁,同样用UP和DOWN键进行调 节,设置结束按SET键,时钟回到正常的走时状态。闹铃闹响时,按下SET或UP键,闹铃闹响停止,并且关闭闹钟功能,如果闹铃闹响时,按下DOWN 键闹响停止,则贪睡功能自动开启,闹铃会每隔5分钟 闹响一次,可连续6次。若贪睡闹响未达到第6次,在任何一次闹响时,按下SET或UP键,不但闹响停止,同时自动关闭贪睡功能,如果是按下DOWN键,每隔分钟会再闹响一次,直至达到6次闹响为止; ●在设定模式下,15秒内不按任何键,时钟将恢复时间显示。 12/24小时制设置 正常时间显示下,每按一次UP键,可以实现1/4小时制的转换。在12小时制式下,数字走到12,这英语演讲稿Judge by appearance

C++电子闹钟设计说明书

physical appearance1

Appearance翻译讲解

C电子闹钟设计说明书

英语作文(300字)appearance,doubt,count,dialectics

闹钟功能说明文档

木头闹钟说明书

木头闹钟说明书