Analysis and Synthesis of Cooperation between Modalities

Jean-Claude Martin

LIMSI-CNRS

BP133, 91403 Orsay Cedex, France

martin@limsi.fr

Abstract

Analysis and synthesis of natural modalities is a long term field of research. In this paper we provide a survey of some studies in this domain and also describe our own approach on both the analysis of cooperation between modalities (in human-human communication and human-computer communication), and on the synthesis of cooperation between modalities in Embodied Conversational Agents. We focus on the cooperation between gestures and speech in deictics.

1Introduction

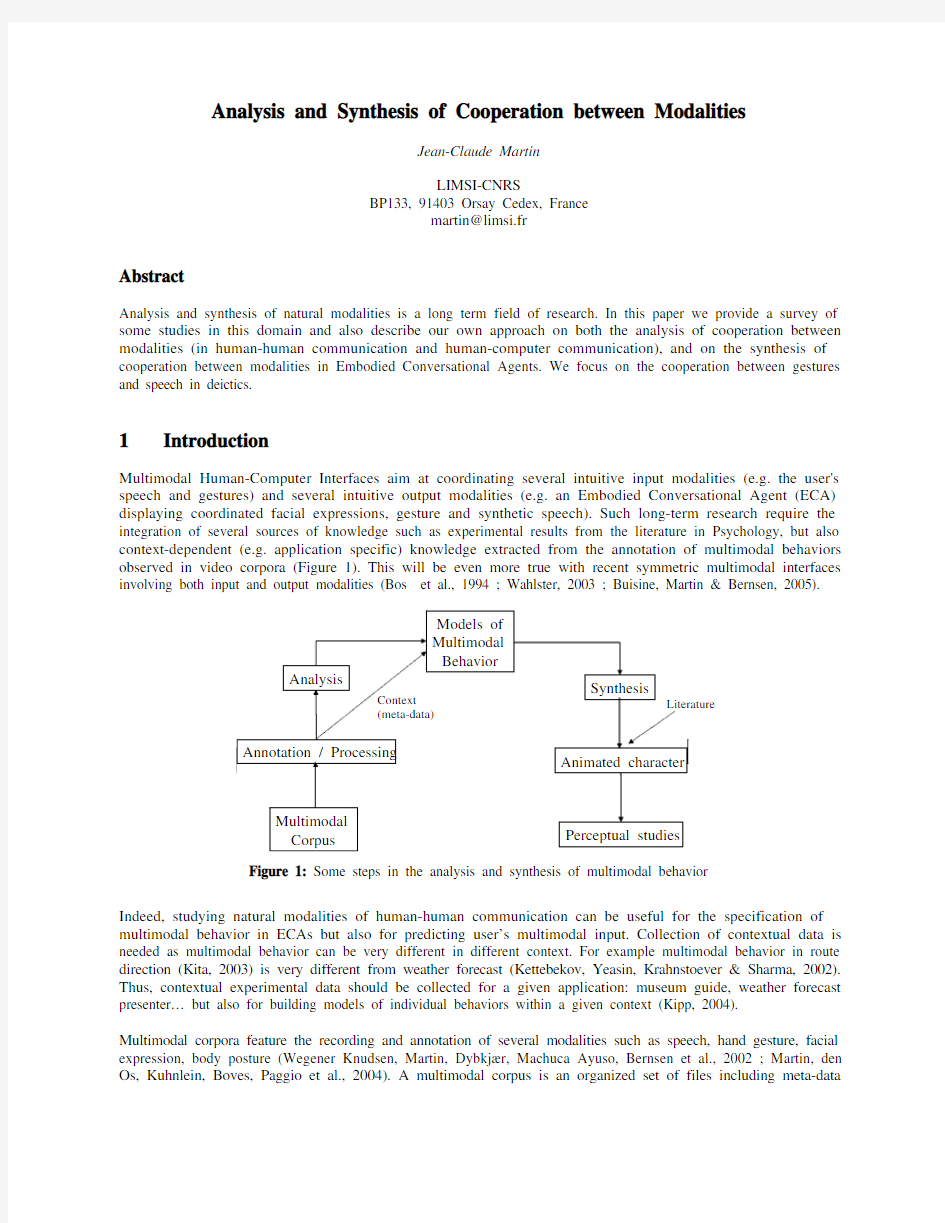

Multimodal Human-Computer Interfaces aim at coordinating several intuitive input modalities (e.g. the user's speech and gestures) and several intuitive output modalities (e.g. an Embodied Conversational Agent (ECA) displaying coordinated facial expressions, gesture and synthetic speech). Such long-term research require the integration of several sources of knowledge such as experimental results from the literature in Psychology, but also context-dependent (e.g. application specific) knowledge extracted from the annotation of multimodal behaviors observed in video corpora (Figure 1). This will be even more true with recent symmetric multimodal interfaces involving both input and output modalities (Bos et al., 1994 ; Wahlster, 2003 ; Buisine, Martin & Bernsen, 2005).

Figure 1: Some steps in the analysis and synthesis of multimodal behavior

Indeed, studying natural modalities of human-human communication can be useful for the specification of multimodal behavior in ECAs but also for predicting user’s multimodal input. Collection of contextual data is needed as multimodal behavior can be very different in different context. For example multimodal behavior in route direction (Kita, 2003) is very different from weather forecast (Kettebekov, Yeasin, Krahnstoever & Sharma, 2002). Thus, contextual experimental data should be collected for a given application: museum guide, weather forecast presenter… but also for building models of individual behaviors within a given context (Kipp, 2004).

Multimodal corpora feature the recording and annotation of several modalities such as speech, hand gesture, facial expression, body posture (Wegener Knudsen, Martin, Dybkj?r, Machuca Ayuso, Bernsen et al., 2002 ; Martin, den Os, Kuhnlein, Boves, Paggio et al., 2004). A multimodal corpus is an organized set of files including meta-data

(recording conditions, context), media files (video / audio), trackers/sensors data, logged files from recognition modules, documentation (annotation guide, protocol description and annotation example, subject's answers to questionnaires). Various settings can involve recording of natural modalities: interaction with a multimodal system, human-human conversations, naturalistic or acted emotional (Douglas-Cowie, Campbell N., Cowie R. & Roach P., 2003). The analysis of such corpora requires several steps such as the segmentation and formatting of videos, the multi-level manual or semi-automatic annotation, the automatic processing of annotations and statistical analysis. Some tools are available for assisting the various steps involved in the annotation process such as Praat for audio processing, Anvil (Kipp, 2001) for the annotation of multimodal behavior, and SPSS for statistical analysis.

In this paper we survey some of the studies from the literature focusing on multimodal deictic behavior in either human-human communication or human-computer interaction (both input and output). We also describe our own approach for the annotation, the analysis and the generation of cooperation between modalities.

Several gesture classifications have been proposed (McNeill, 1992 ; Kipp, 2004) including adaptors, emblems, deictics, iconics, metaphorics, and beats. In this paper we will focus on cooperation between references in speech and deictic gestures. “Deictic gestures are pointing movements, which are prototypically performed with the pointing finger, although any extensible object or body part can be used, including the head, nose, or chin, as well as manipulated artifacts” (McNeill, 1992). Multimodal deictics have been studied in human-human communication, multimodal input interfaces and embodied conversational agents.

2

Studies of multimodal deictic

2.1 Deictic in human-human communication

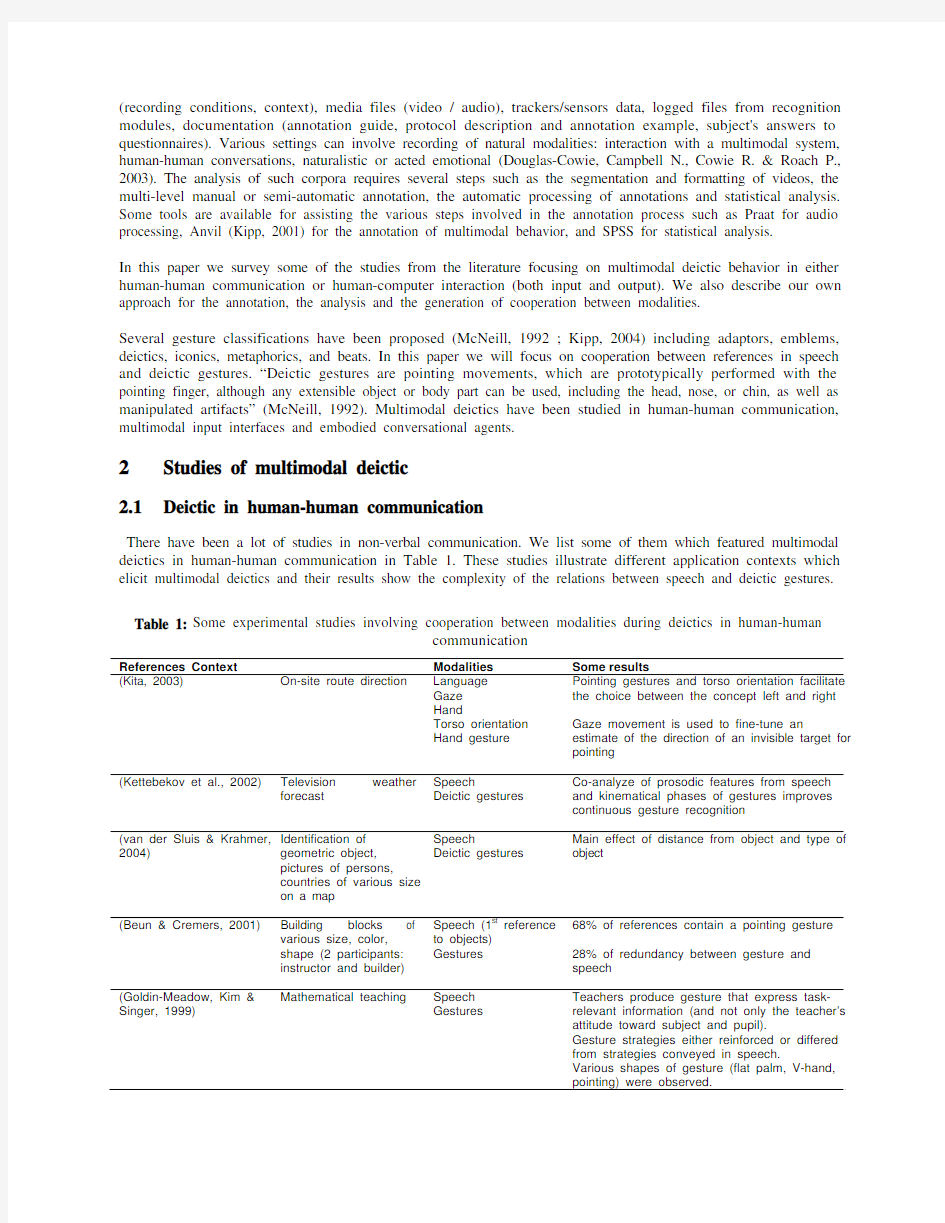

There have been a lot of studies in non-verbal communication. We list some of them which featured multimodal deictics in human-human communication in Table 1. These studies illustrate different application contexts which elicit multimodal deictics and their results show the complexity of the relations between speech and deictic gestures.

Table 1: Some experimental studies involving cooperation between modalities during deictics in human-human

communication

References Context Modalities Some results

(Kita, 2003) On-site route direction Language Gaze Hand Torso orientation Hand gesture Pointing gestures and torso orientation facilitate

the choice between the concept left and right

Gaze movement is used to fine-tune an

estimate of the direction of an invisible target for

pointing

(Kettebekov et al., 2002) Television weather forecast Speech Deictic gestures Co-analyze of prosodic features from speech

and kinematical phases of gestures improves

continuous gesture recognition

(van der Sluis & Krahmer, 2004) Identification of geometric object, pictures of persons, countries of various size

on a map

Speech Deictic gestures Main effect of distance from object and type of object

(Beun & Cremers, 2001) Building blocks of various size, color, shape (2 participants: instructor and builder) Speech (1st reference to objects) Gestures 68% of references contain a pointing gesture

28% of redundancy between gesture and

speech

(Goldin-Meadow, Kim & Singer, 1999) Mathematical teaching Speech Gestures Teachers produce gesture that express task-

relevant information (and not only the teacher’s

attitude toward subject and pupil).

Gesture strategies either reinforced or differed

from strategies conveyed in speech.

Various shapes of gesture (flat palm, V-hand, pointing) were observed.

2.2Deictic in multimodal input interfaces

Several classifications have been proposed for studying user’s multimodal input in multimodal interfaces: time and fusion (Nigay & Coutaz, 1993), a typology of semantic cooperation between modalities (equivalence, specialization, redundancy, complementarity, transfer) (Martin, Julia & Cheyer, 1998). Behaviors in different modalities can also

be inconsistent or independent. Several studies have tackled the relations between gesture and speech in multimodal input systems involving these temporal and semantic dimensions of user’s multimodal input (Table 2).

Table 2: Some experimental studies involving cooperation between modalities in human-computer interaction References Application Modalities

Some

results

(Huls, Claassen & Bos, 1995) File manager Typed text

Mouse pointing

gestures Variety in use of referring expressions

(Oviatt, De Angeli & Kuhn, 1997) Map task Speech

Pen gestures

2D graphics 60% of multimodal constructions do not contain

any spoken deictic

(Oviatt & Kuhn, 1998) Map task Speech

Pen gestures

2D graphics Most common deictic terms are “her”, “there”, “this”, “that”. Explicit linguistic specification of definite and indefinite reference is less common compared to speech only.

(Kranstedt, Kühnlein & Wachsmuth, 2003) Assembly task Speech

3D gestures

3D graphics

Temporal synchronization

between speech and pointing

Influence of spatio-temporal

restrictions of the environment

(density of objects) on deictic

behavior

2.3Deictic in Embodied Conversational Agents

Deictic is one of the classical non-verbal behaviors displayed by Embodied Conversational Agents (Kipp, 2004), even more often in a pedagogical context. Such characters sometime use a pointer as in the EDWARD system (Bos , Huls & Claassen, 1994) or the PPP agent (André & Rist, 1996). In PPP, the type, location and duration of deictic gestures are computed at runtime. Cosmo is a pedagogical agent in a learning environment for the domain of Internet packet routing (Lester, Towns, Callaway, Voerman & P., 2000). It features a deictic behavior planner which exploits a world model and the evolving explanation plan as it selects and coordinates locomotive, gestural, and speech behaviors. Other applications include route description in a 3D environment such as in the Angelica system (Theune, Heylen & Nijholt 2004). Its architecture involves sequential steps (content determination, microplanning, realization) for the generation of deictic behavior. The virtual presenter (in various applicative contexts such as weather presentations or presentations of 3D environments) described in (Noma, Zhao & Badler, 2000), uses several forms of deictics: basic pointing gesture, move in palm direction (to show a map/chart), pointing with palm back (covering larger area), pointing with palm down (emphasize phrase or list item).

Yet, the deictic behaviors of these ECAs seldom uses knowledge extracted from application specific corpus (Kipp, 2004). The Rea ECA uses probabilistic rules for posture shift generation that are rooted in empirical data (Cassell, Nakano, Bickmore, Sidner & Rich, 2001). The Max ECA has been enhanced by empirical results (Kranstedt et al., 2003). Some researchers have also driven experimental studies for designing or validating individual algorithms such as reference generation but not integrated in the design of an ECA (van der Sluis & Krahmer, 2004).

3Illustrative examples from our own approach

3.1Studying deictics in human-human communication

Presentations in a pedagogical context are an rich source of deictic multimodal behavior (Goldin-Meadow et al., 1999). We have videotaped some students presentations (Figure 2) and observed various shapes of deictic gestures involving either palm or fingers (Martin, Réty & Bensimon, 2002).

Figure 2: Frame displaying multimodal behavior from a collective student presentation (Martin et al., 2002) 3.2Deictic in multimodal input interfaces

Several dimensions can be used for classifying multimodal behavior of users in multimodal input interfaces. For example, in (Kehler, 2000), the combinations of speech and pen input from users in a multimodal map application have been analyzed (Figure 3). It was found that the interpretation of referring expressions can be computed with very high accuracy using a model which pairs an impoverished notion of discourse state with a simple set of rules that are insensitive to the type of referring expression used. We have designed a coding scheme for studying cooperation between speech and gesture in deictic expressions during this map task (Martin, Grimard & Alexandri, 2001). This coding scheme features the annotation of available "referenceable" objects and the annotation of references to such objects in each modality. A corpus of multimodal behavior is annotated as a multimodal session which includes one referenceable objects section and one or more multimodal segments. Each multimodal segment is made of a speech segment, a gesture segment, the annotation of temporal relation between these two segments and a graphics segment. Software has been developed for parsing such descriptions and for computing metrics measuring the cooperation between modalities such as redundancy and complementarity (Figure 4).

Figure 3: Recording of user's speech and pen gesture when interacting with a multimodal map application (Kehler,

2000 ; Cheyer, Julia & Martin, 2001)

Figure 4: Multimodal salience values are computed for each object (y-axis) in each multimodal segment (x-axis) (Martin et al., 2001)

In (Buisine & Martin, 2005), the observed patterns of the multimodal behaviors of users interacting with 2D ECAs were classified as: redundancy, complementarity, dialogical complementarity (e.g. two sequential turns in a dialogue are achieved via different modalities), concurrency, multimodal repetition. In (Gustafson, Boye, Bell, Wirén, Martin et al., 2004), the authors have classified the observed patterns of multimodal behaviors of users interacting with 3D ECAs according to the spoken utterance occurring along the gesture: accept, correction, deictic pronoun, redundant reference, contradicting reference. In the user’s multimodal speech combined with 2D gestures when interacting with a 3D character, (Buisine et al. 2005) have observed a few cases of plural affordance of single objects (e.g. the graphical object is represented internally as a single object but elicit a plural gestural or spoken behavior such as “can you tell me about these two” while pointing on a statue representing two characters). The perceptual properties of objects need to be encoded in the system as they might appear for a given user ; (Landragin, Bellalem & Romary, 2001) proposed such a model for multimodal fusion integrating speech, gestures and graphics.

3.3Deictic in Embodied Conversational Agents

As described above, cooperation between modalities during deictic behavior were used in several pedagogical agents for driving attention such as in Cosmo (Lester, Voerman, Towns & Callaway, 1999) and Steve (Rickel & Johnson, 2000). In the technical presentations experiments described in (Buisine, Abrilian & Martin, 2004), the multimodal behavior of the LEA agents during deictics was specified manually from rules extracted from the literature (Figure 5) in three multimodal strategies including speech only, complementary and redundant multimodal reference. The multimodal strategies proved to influence subjective ratings of quality of explanation, in particular for male users.

You should use this round button in the middle of the remote.

Figure 5: A 2D animated character manually specified from the literature with a redundant strategy (Buisine et al.,

2004)

The specification of the multimodal behavior of Embodied Conversational Agents (ECA) often propose a direct and deterministic one-step mapping from high-level specifications of dialog state or agent emotion onto low-level specifications of the multimodal behavior to be displayed by the agent (e.g. facial expression, gestures, vocal utterance). The difference of abstraction between these two levels of specification makes difficult the definition of such a complex mapping. We proposed an intermediate level of specification based on combinations between modalities (e.g. redundancy, complementarity). Such intermediate level specifications can be described using XML in the case of deictic expressions. We defined algorithms for parsing such descriptions and generating the corresponding multimodal behavior of 2D cartoon-like conversational agents (Abrilian, Martin & Buisine, 2003). Some random selection has been introduced in these algorithms in order to induce some "natural variations" in the agent's behavior (Figure 6).

Figure 6: The XML specification (left-hand side) drives the ECA to a random selection of 70% of the attributes of the object #3 in the gesture, gaze and speech modalities (right-hand side)

4Conclusions and future directions

In this paper we have described a general approach for the analysis and synthesis of multimodal behaviors, and surveyed several studies on multimodal deictic. A multimodal deictic behavior can be represented according to several dimensions:

?its function

?the perceptual properties of the targeted graphical objects: number and plural/singular affordance, distance between the hand and the object, static or dynamic objects, geometry (2D point or area, 3D

objects), distractors,

?the temporal properties (duration, repetition) of the individual modalities involved (speech, gaze, head, locomotion) and their temporal relations (e.g. keeping hand on gestured object while gazing at

listener),

?the hand-shape, handedness and movement features of hand gestures.

Although they are required for providing context-dependent knowledge on multimodal behavior, multimodal corpora have several difficulties and requirements including the need to record new data in a given context, the required size of data, the speed of annotation, the fast access and statistics computation, the unclear possibility of reuse in another context, and difficulties in corpus validation. Two types of multimodal corpora are possible depending on these requirements and availability: detailed annotations of short videos (using the Anvil software) or light annotations of long videos (using manual annotation with Excel). Literature should also be used to drive the protocol for collecting experimental data.

Deictic has a main function of focus but can also be combined with other communicative functions or behavior including emotional expression (e.g. deictics with beats). Within the Humaine Network of Excellence (HUMAINE 2004-2008) we are contributing to the collection and the annotation of a video corpus of emotional behaviors (Abrilian, Buisine, Devillers & Martin, 2005 ; Abrilian, Martin & Devillers, 2005). We intend to use it for the modeling of relations between multimodal behaviors and emotions. One future use of such models is the specification of embodied conversational agents involving combinations of communicative and emotional behaviors.

References

Abrilian, S., Martin, J.-C., & Buisine, S. (2003). Algorithms for controlling cooperation between output modalities

in 2D Embodied Conversational Agents.Proceedings of Proceedings of the Fifth International Conference on Multimodal Interfaces (ICMI'2003), November 5-7, pp. 293-296.

Abrilian, S., Martin, J.-C., & Devillers, L. (2005). A Corpus-Based Approach for the Modeling of Multimodal Emotional Behaviors for the Specification of Embodied Agents.Proceedings of HCI International 2005, 22 - 27 July.

Abrilian, S., Buisine, S., Devillers, L., & Martin, J.-C. (2005). EmoTV1: Annotation of Real-life Emotions for the Specification of Multimodal Affective Interfaces.Proceedings of HCI International 2005, 22 - 27 July.

André, E., & Rist, T. (1996). Coping with temporal constraints in multimedia presentation planning.Proceedings of Thirteenth National Conference on Artificial Intelligence, pp. 142-147.

Beun, R.-J., & Cremers, A. (2001). Multimodal Reference to Objects: An Empirical Approach.Proceedings of Cooperative Multimodal Communication : Second International Conference (CMC'98). Revised Papers

Editors: (Eds.):, January 28-30.

Bos , E., Huls , C., & Claassen, W. (1994). EDWARD: full integration of language and action in a multimodal user interface. International Journal of Human-Computer Studies,40(3), 473-495.

Buisine, S., & Martin, J.-C. (2005). Children’s and Adults’ Multimodal Interaction with 2D Conversational Agents. Proceedings of CHI'2005, 2-7 April.

Buisine, S., Abrilian, S., & Martin, J.-C. (2004). Evaluation of Individual Multimodal Behavior of 2D Embodied Agents in Presentation Tasks. In Z. Ruttkay & C. Pelachaud (Eds.), From Brows to Trust. Evaluating Embodied Conversational Agents, Vol. 7.

Buisine, S., Martin, J.-C., & Bernsen, N.O. (2005). Children's Gesture and Speech in Conversation with 3D Characters.Proceedings of HCI International 2005, 22-27 July 2005.

Cassell, J., Nakano, Y.I., Bickmore, T.W., Sidner, C.L., & Rich, C. (2001). Non-verbal cues for discourse structure. Proceedings of 39th Annual Meeting of the Association for Computational Linguistics, pp. 106-115.

Cheyer, A., Julia, L., & Martin, J.-C. (2001). A Unified Framework for Constructing Multimodal Experiments and Applications. In H. Bunt, Beun, R.J., Borghuis, T. (Ed.), Cooperative Multimodal Communication, (pp. 234-242): Springer.

Douglas-Cowie, E., Campbell N., Cowie R., & Roach P. (2003). Emotional speech: towards a new generation of databases. Speech communication,40.

Goldin-Meadow, S., Kim, S., & Singer, M. (1999). What the teacher's hand tell the student mind about math. Journal of Educational Psychology(91), 720-730.

Gustafson, J., Boye, J., Bell, L., Wirén, M., Martin, J.-C., Buisine, S., & Abrilian, S. (2004). NICE Deliverable

D2.2b. Collection and analysis of multimodal speech and gesture data in an edutainment application.

Huls, C., Claassen, W., & Bos, E. (1995). Automatic Referent Resolution of Deictic and Anaphoric Expressions. Computational Linguistics,21(1), 59-79.

Kehler, A. (2000). Cognitive Status and Form of Reference in Multimodal Human-Computer Interaction. Proceedings of 17th National Conference on Artificial Intelligence (AAAI-2000), July, 2000.

Kettebekov, S., Yeasin, M., Krahnstoever, N., & Sharma, R. (2002). Prosody Based Co-analysis of Deictic Gestures and Speech in Weather Narration Broadcast.Proceedings of Workshop on "Multimodal Resources and Multimodal Systems Evaluation", Conference On Language Resources And Evaluation (LREC'2002), pp. 57-62.

Kipp, M. (2001). Anvil - A Generic Annotation Tool for Multimodal Dialogue.Proceedings of Eurospeech'2001.

Kipp, M. (2004). Gesture Generation by Imitation. From Human Behavior to Computer Character Animation. Florida: Boca Raton, https://www.doczj.com/doc/b54945556.html,.

Kita, S. (2003). Interplay of Gaze, Hand, Torso Orientation, and Language in Pointing. In S. Kita (Ed.), Pointing. Where Language, Culture, and Cognition Meet, (pp. 307-328). London: Lawrence Erlbaum Associates.

Kranstedt, A., Kühnlein, P., & Wachsmuth, I. (2003). Deixis in Multimodal Human Computer Interaction: An Interdisciplinary Approach.

Landragin, F., Bellalem, N., & Romary, L. (2001). Visual Salience and Perceptual Grouping in Multimodal Interactivity. Proceedings of First International Workshop on Information Presentation and Natural Multimodal Dialogue, pp. 151-155.

Lester, J.C., Voerman, J.L., Towns, S.G., & Callaway. (1999). Deictic believability: coordinating gesture, locomotion and speech in lifelike pedagogical agents. Applied Artificial Intelligence(13), 383-414.

Lester, J.C., Towns, S.G., Callaway, C.B., Voerman, J.L., & P., F. (2000). Deictic and emotive communication in animated pedagogical agents. In J. Cassell, Sullivan, J., Prevost, S., Churchill, E. (Eds.). (Ed.), Embodied Conversational Agents, (pp. p 123-154): The MIT Press.

Martin, J.C., Julia, L., & Cheyer, A. (1998). A Theoretical Framework for Multimodal User Studies.Proceedings of Conference on Cooperative Multimodal Communication, Theory and Applications (CMC'98), 28-30 January. Martin, J.C., Grimard, S., & Alexandri, K. (2001). On the annotation of the multimodal behavior and computation of cooperation between modalities.Proceedings of Proceedings of the workshop on "Representing, Annotating, and Evaluating Non-Verbal and Verbal Communicative Acts to Achieve Contextual Embodied Agents" in conjunction with the 5th International Conference on Autonomous Agents (AAMAS'2001), May 29, pp. 1-7.

Martin, J.C., Réty, J.H., & Bensimon, N. (2002). Multimodal and Adaptative Pedagogical Resources.Proceedings of 3rd International Conference on Language Resources and Evaluation (LREC'2002), 29-31 may.

Martin, J.-C., den Os, E., Kuhnlein, P., Boves, L., Paggio, P., & Catizone, R. (2004). Workshop "Multimodal Corpora: Models Of Human Behaviour For The Specification And Evaluation Of Multimodal Input And Output Interfaces".Proceedings of In Association with the 4th International Conference On Language Resources And Evaluation LREC2004 https://www.doczj.com/doc/b54945556.html,/lrec2004/index.php, 25th may.

McNeill, D. (1992). Hand and mind - what gestures reveal about thoughts: University of Chicago Press.

Nigay, L., & Coutaz, J. (1993). A design space for multimodal systems : concurent processing and data fusion. Proceedings of INTERCHI’93, April, pp. 172-178.

Noma, T., Zhao, L., & Badler, N. (2000). Design of a Virtual Human Presenter. IEEE Journal of Computer Graphics and Applications,20(4), 79-85.

Oviatt, S., & Kuhn, K. (1998). Referential features and linguistic indirection in multimodal language.Proceedings of International Conference on Spoken Language Processing (ICSLP-98).

Oviatt, S., De Angeli, A., & Kuhn, K. (1997). Integration and synchronization of input modes during multimodal human-computer interaction.Proceedings of Human Factors in Computing Systems (CHI'97), pp. 415-422. Rickel, J., & Johnson, W.L. (2000). Task-oriented collaboration with embodied agents in virtual worlds. In J.S. Cassell, J.; Prevost, S.; Churchill, E. (Ed.), Embodied Conversational Agents, (pp. 95 - 122): Cambridge, MA: MIT Press.

Theune, M., Heylen, D., & Nijholt , A. (2004). Generating Embodied Information Presentations. In O. Stock & M. Zancanaro (Eds.), Multimodal Intelligent Information Presentation, (pp. 47-70): Kluwer Academic Publishers.

van der Sluis, L., & Krahmer, E. (2004). Production Experiments For Evaluating Multimodal Generation. Wahlster, W. (2003). SmartKom: Symmetric multimodality in an adaptive and reusable dialogue shell.Proceedings of Human Computer Interaction Status Conference, pp. 47-62.

Wegener Knudsen, M., Martin, J.-C., Dybkj?r, L., Machuca Ayuso, M.-J., Bernsen, N.O., Carletta, J., Heid, U., Kita, S., Llisterri, J., Pelachaud, C., Poggi, I., Reithinger, N., van Elswijk, G., & Wittenburg, P. (2002). Survey of Multimodal Annotation Schemes and Best Practice. ISLE Natural Interactivity and Multimodality. Working Group Deliverable D9.1.