Challenges Towards Elastic Power Management in Internet Data Centers

Jie Liu Microsoft Research Microsoft Corp. Redmond,WA98052 liuj@https://www.doczj.com/doc/bc8980139.html,

Feng Zhao,

Microsoft Research

Microsoft Corp.

Redmond,WA98052

zhao@https://www.doczj.com/doc/bc8980139.html,

Xue Liu

School of Computer Science

McGill University

Montreal,Canada,H3A2A7

xueliu@cs.mcgill.ca

Wenbo He

Computer Science

University of New Mexico

Albuquerque,NM87131

wenbohe@https://www.doczj.com/doc/bc8980139.html,

Abstract

Data Centers are energy consuming facilities that host Internet services such as cloud computing platforms.Their complex cyber and physical systems bring unprecedented challenges in resource managements.In this paper,we give an overview of the resource provisioning and utilization patterns in data centers and propose a macro-resource management layer to coordinate among cyber-and-physical resources.We review some existing work and solutions in the?eld and explain their limitations.We give some future research directions and the potential solutions to jointly optimize computing and environmental resources in data centers.

1.Introduction

The world today relies as much on its cyber-infrastructure as its physical infrastructure.Mobile devices,personal com-puters,and servers connected by wireless,broadband and ?ber networks are substantial assets for individuals,enter-prises,and societies.On-line services,such as Web contents, E-mails,storage,social networking,entertainment and e-commerce,become intrinsic parts of everyday life.As a result,the IT industry became the fastest growing sector in US energy consumption.As a central part of the IT infrastructure,the number of servers and their resource consumptions grow exponentially.The rated power con-sumptions of servers have increased by10times over the past ten years.A recent study estimates that the worldwide spending on enterprise and data center power and cooling to top$30billion in year2008and is likely to even surpass spending on new server hardware[1].

The trend continues with the emergent of Cloud Comput-ing,where data and computation hosting are outsourced to Internet Date Centers(IDCs),for reliability,management, and cost bene?ts[2],[3],[4],[5].IDCs use economies of scale and statistical multiplexing to amortize the total cost of ownership over a large number of machines,shared system operators,and variety of workloads.It uses geographical distribution and replication to improve data reliability and to reduce access latency.The surging demand of software as services calls for the urgent need of designing and deploy-ment of energy-ef?cient Internet Data Centers.Therefore, the environmental impacts of data centers,especially in term of energy consumptions,become part of the social responsibility of the IT industry.

Furthermore,the cost of constructing multi-megawatt data centers is staggering.It takes several hundred million dollars to construct the facility,and another several hundred million dollars for the computing and networking devices.Among facility costs,the electrical and mechanical systems for power distribution and cooling constitute the biggest portion [6].Thus,fully utilizing their capacities is as important as ef?ciently operating data centers.

Low power and energy ef?cient computing have been long standing topics in computer hardware and software design.Low power chipsets are fast emerging partly because of the adoption of dynamic voltage and frequency scaling (DVFS)[7],[8]and chip multiprocessing[9].Power-saving features have been built into commercial operating systems. Recently,efforts such as the Climate Savers Computing Initiative intended to help lower worldwide computer energy consumption by promoting the adoption of high-ef?ciency power supplies and encouraging the use of power-savings features already present in users’equipment.

However,we argue in this paper that the individual ma-chine level(or micro-level)resource management does not provide complete solutions for energy-ef?cient date centers. Due to the scale and complexity of data center computing, resource demands in IDCs can change dramatically.Services are interdependent.Workload is diverse.Servers can be re-purposed within minutes.A user request can hit hundreds or even thousands of machines located in distant data centers in split of a second.These activities must be coordinated with physical resources such as power and cooling to ensure high operation ef?ciency.In addition to maintain request/response service quality,it is also desirable to fully utilize data center design capacities without wasting capital investments. Thus,the resource management mechanisms have to be elastic.We view federated data centers as a cyber-physical system that spans across the globe.They need to track user demands,allocate suf?cient resources to diverse applica-tions,and try to ful?ll designed capacity.In this

elastic

2009 29th IEEE International Conference on Distributed Computing Systems Workshops 1545-0678/09 $25.00 ? 2009 IEEE

DOI 10.1109/ICDCSW.2009.44

65

resource management paradigm,new challenges emerge for the design,operation,and power management at the data centers level(or macro-level).While service demands rise, data centers must ramp up resources–computing,power distribution,and cooling–to provide desired quality of service.While demands recede,resources can be reclaimed and dynamically allocated to other services.These chal-lenges cannot be solved by computing systems alone(such as application organizations,load distribution,or machine virtualization)or physical systems alone(such as power dis-tribution and cooling control).It needs close coordinations between the cyber-activities and physical activities.

In the rest of this paper,we?rst give an overview of the typical architecture in data centers in section2.The we review some preliminary work in this domain in section3. We describe the challenges and future research directions in sections4and5respectively.Finally,we conclude the paper in section6.

2.The Physical Infrastructure in Data Centers Only part of the total energy consumed by a data center is used in powering up the servers.Others are lost in power distribution and used to run the cooling system.A holistic power management scheme,therefore,needs to consider both the physical infrastructure and the computing activities in data centers.

Data centers can have vastly different footprints,from mega data centers that employ hundreds of thousands of servers and consume hundreds of mega Watts to edge data centers that can be loaded on a truck and serve a small community.In this session,we use a tier-2data center with hot and cold aisles as an example to give a brief overview of the physical infrastructure of data centers..

2.1.Power Distribution and Cost

A tier-2data center,providing99.741%availability[6], is typical for hosting Internet services.Figure1shows the power distribution system for a tier-2data center[10],[11]. Power drawn from the grid is transformed and conditioned to charge the UPS system(based on batteries or?ying wheels). The uninterrupted power is distributed through power dis-tribution units(PDUs)to supply power to the server and networking racks.This portion is called critical power,since it is used to perform“useful work.”The power is also used by water chillers,computer room air conditioning(CRAC) systems,and humidi?ers to provide appropriate temperature and humidity conditions for electronic devices.

The power capacity of a data center is primarily de?ned by the capability of the UPS system,both in terms of steady load handling and surge withstand.For well managed data centers,it is the maximum instantaneous power

consumption

Figure1:An illustration of power distribution tiers in a data center.

d

Z

?

??????

Figure2:An illustration of an air cooled data center on raised?oors.

from all servers allocated to each UPS unit determines how may servers can a data center host.

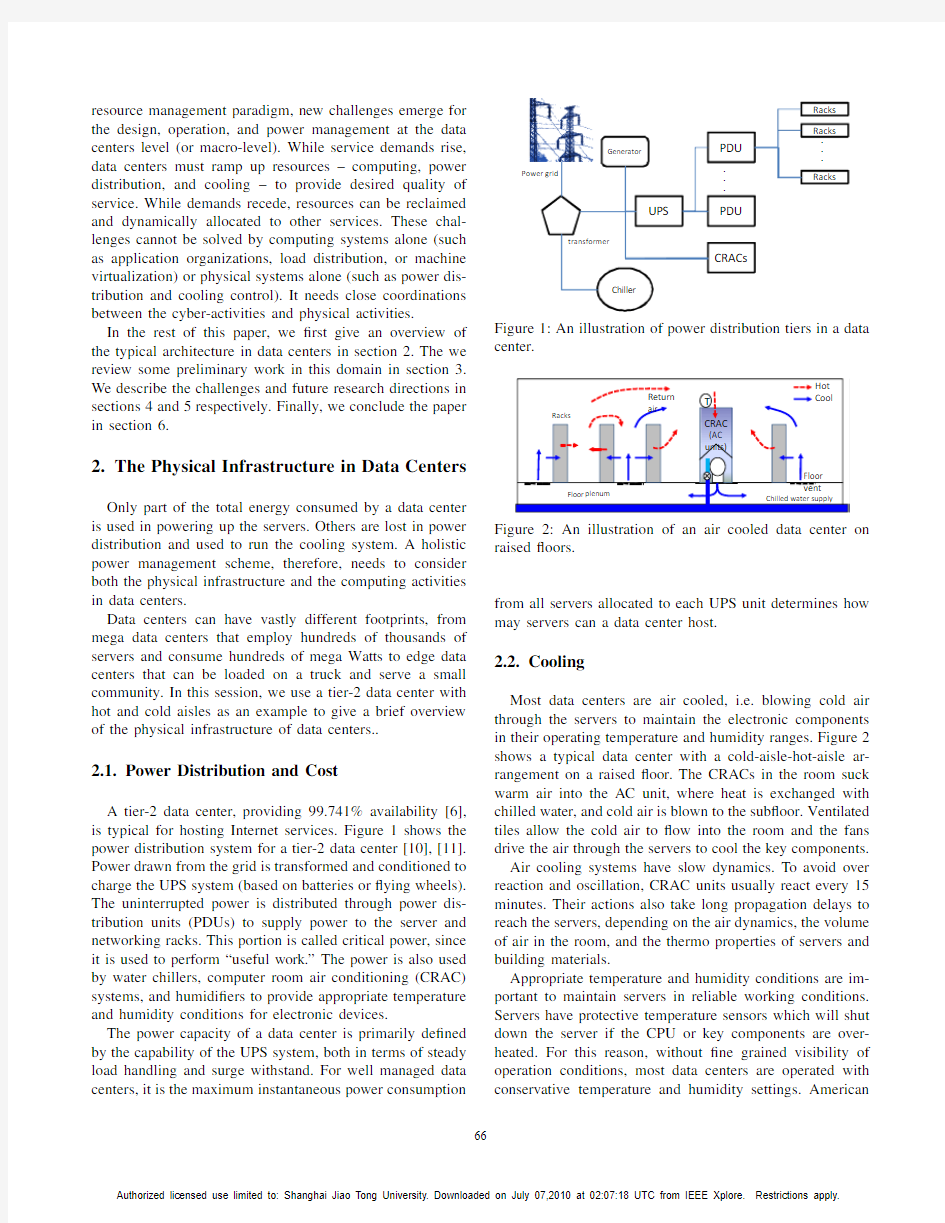

2.2.Cooling

Most data centers are air cooled,i.e.blowing cold air through the servers to maintain the electronic components in their operating temperature and humidity ranges.Figure2 shows a typical data center with a cold-aisle-hot-aisle ar-rangement on a raised?oor.The CRACs in the room suck warm air into the AC unit,where heat is exchanged with chilled water,and cold air is blown to the sub?oor.Ventilated tiles allow the cold air to?ow into the room and the fans drive the air through the servers to cool the key components. Air cooling systems have slow dynamics.To avoid over reaction and oscillation,CRAC units usually react every15 minutes.Their actions also take long propagation delays to reach the servers,depending on the air dynamics,the volume of air in the room,and the thermo properties of servers and building materials.

Appropriate temperature and humidity conditions are im-portant to maintain servers in reliable working conditions. Servers have protective temperature sensors which will shut down the server if the CPU or key components are over-heated.For this reason,without?ne grained visibility of operation conditions,most data centers are operated with conservative temperature and humidity settings.American

Society of Heating,Refrigerating,and Air-Conditioning Engineers (ASHREA)recommended that data centers be operated between 20o C to 25o C and 30%to 45%relative humidity.However,excessive cooling does not necessarily improve reliability or reduce device failure rates [12].

Maintaining a stringent operation conditions within a slow cooling-dynamic environment is expensive.This is evidenced by most data centers have power utilization effectiveness (PUE,de?ned as the total power consumed by the data center over the total power used to power computing devices.)close to 2.Recently,the industry has moved to extensive use of air-side economizers,https://www.doczj.com/doc/bc8980139.html,ing outside air to cool data centers directly,rather than relying on energy consuming water chillers.However,the temperature and humidity of outside air change continuously,bringing additional challenges to cooling control.

3.Elasticity of Data Center Computing

To understand the resource management challenges in data center computing,we give a brief description on the cyber-dynamics of software as services.

The demand for data center software services experience natural ?uctuation and spikes.For example,Figure 3shows the number of total users connected to Windows Live Messenger and the login rates of new users over a week,normalized to 1million users and 1400users/second login rate.We see that the number of users in the early afternoon is almost twice as much as those after midnight,and the total demand in weekdays are higher than that in weekends.We can also see the ?ash crowd effects,where a large number of users login in a short period of time.Similar demand variations were reported recently by other research studies [13],[14],[15].

Time in hours

L o g i n r a t e (p e r s e c o n d )

N u m b e r o f c o n n e c t i o n s (m i l l i o n s )

Figure 3:The load variation,in terms of total number of users and the new user login rates,of Messenger services,normalized to 5million users.

Another example of demand variation was reported in [5].

“When Animoto made its service available via Facebook,it experienced a demand surge that resulted in growing from 50servers to 3500servers in three days...After the peak subsided,traf?c fell to a level that was well below the peak.”The drastic demand variations require software applica-tions in data centers to be elastic.That is,they can take advantage of server-level parallelism to scale out in addition to scale up.They must easily be replicated and migrated at anytime and to anywhere.They can leverage data cen-ter level software infrastructures such as MapReduce [16],Dryad [17],and EC2[3],to perform data intensive parallel operations.Their performances can degrade gracefully when reaching resource limitations.

On the contrary to the slow cooling dynamics in data centers,the changes in computing activity are https://www.doczj.com/doc/bc8980139.html,ers expect sub-second response time from web pages.For mod-ern Internet application,each user request may hit hundreds to thousands of servers at various locations,which in turn,generates a power consumption spike of certain size at the servers (magnitudes depending on the computational load).Load balancing policies are usually updated at the scale of minutes,while VM migration or server repurpose may happen at the time scale of days or weeks.

3.1.Oversubscription of Resources

Elastic software applications bring opportunities for mul-tiplexing data center resources.Traditionally,data center resources are over-provisioned for every application.That is,the number of servers each application gets is large enough to handle the most severe workload in the near future.Power distribution and cooling capacity are also allocated to handle the worst case scenario that every server will reach their peaks at the same time.However,static server allocation is wasteful.The servers will have signi?cant lower utilization off the peak time.Although one can consolidate the load and shut down part of the servers for energy saving [18],the idled servers still strain the capacity of the data centers and the overall utilization from a data center point of view is low.In an elastic computing paradigm,if one application’s resource requirement is low,one can ?nd other services to ful?ll the exceeded capacity by re-purposing the servers.Thus,the utilization of the facility as a whole can be improved.

Oversubscription is a widely adopted strategy by service industries such as airlines to improve capacity utilization and amortize infrastructure cost.The host oversells its services to the extent that if every subscriber uses the services at the same time,the capacity will be exceeded.However,due to the statistical variations of utilization,with overwhelming probability,the host is safe and can maximize the return of its infrastructure investment.Oversubscription is a key to maximize the utilization of data center capacities.

3.2.Macro-Resource Management

Elastic software applications and resource oversubscrip-tion require coordinations beyond individual machines or homogeneous clusters.Coordinations must be taken place at the macro-scale,such as services of various roles,service to server assignment,network topologies and bandwidth allo-cations,load balancers,power capping policies,and cooling sensitivity and ef?ciencies,For example,what applications are best to share the same physical server?How much do services depend on each other?How do different tiers scale when user demands increase or decrease?How much can resources,e.g.power can be oversubscribed?How to protect the safety of the facility in the rare events that the demand exceeds the capacity?How much advantage can one take on air economizer?Where to migrate power consuming operations to best utilize cooling and power conversion ef?ciency across data centers without sacri?cing user experience?All these decisions need to be taken at the time scale of demand variations rather than monthly or seasonally manual resource adjustments.They need to be taken at the scale of a data center or multiple data centers rather than within a server or a cluster of servers.

W?∥?? ě?????????????ě ????????

>??ě ě??????????

????>??ě

W??????????W?∥?? ??????

?????????

^????? ???????????????? ??????????? ??∥?? ě???? ????????

?????? ???????

s&^? ???????????????sDD? ????? ????h???????????W???????????

????? d??ě?????

???????????^???????W??????????W?∥??

^????????? Θ ??????????????????????

?????

^> >??ě ???????????

W???? ??ě??

Figure 4:An architectures for tiered resource provisioning and management.

A macro-resource management layer is shown in Fig-ure 4to make coordination decisions across applications and across physical facilities.It takes information such as service-level agreement (SLA),application structures,and environmental conditions,and physical facility constraints from facility and application designs;monitors the operation status from application,system,and physical data collected over and across data centers;and makes decisions that affect power provisioning,cooling control,server allocation,service placement,load balancing,and job priorities.An

important role for macro-resource management is to build and re?ne models to predict performance impacts and risks on resource allocation decisions and to diagnose possible failures.Such models may in turn become abstractions that application and facility designers can use to re?ne their designs so resource utilizations can be further optimized.Although illustrated as a single unit,the macro-resource management layer is by no means centralized.It may consist of multiple sub-layers that are distributed over server clusters and data centers.How to organize this layer to perform desired coordination with ef?cient communication among submodules is a challenging research problem.

4.Microfoundations for Macro-Resource Man-agement

Macro-resource management policies rely on low-level power management “knobs”that we call the micro-foundations.In this section,we give a brief survey on power management technologies developed over the past years.

4.1.Device Architecture

Since CPU power consumption contributes a large fraction of the server’s overall power consumption,most previous ef-forts in computing system’s power management adopt CPU energy-ef?cient techniques more aggressively than other sys-tem components.Chip Multi-Processing (CMP)technology (multi-core)has a great impact in the power management in CPUs.It will make the thread-level parallelism available,using the transistor and energy budget on additional cores is more likely to yield higher performance,especially for CPU-intensive workloads [19],[9].Heterogeneous CMPs has fur-ther potentials to selectively use cores with different power and performance trade-offs to meet workload variation.

4.2.Dynamic Voltage and Frequency Scaling (DVFS)

Most of the components in servers,such as the CPU,hard drive,and main memory now have the adjustable https://www.doczj.com/doc/bc8980139.html,ponents are fully operational,but consume more power in high-power states while having degraded functionality in low-power states.For example,the C0working state of a modern-day CPU can be divided into the so called P-states (performance states)which allow clock rate reduction and T-states (throttling states)which will further throttle down a CPU (but not the actual clock rate)by inserting STPCLK (stop clock)signals and thus omitting duty cycles.Lowering supply voltage and slowing down clock signals are very powerful ways to reduce the energy consumption when high performance is not required [20],[7].In the article [21],the control-based DVFS policy combined with request batching

has been proposed,which trades off the system response capabilities to power savings and implements a feedback control framework to maintain a speci?c response time level. Another work has been speci?ed in[22];Flautner et al. adopt the performance-setting algorithms for different kinds of workload,and implements the DVFS policy on per-task basis.

4.3.Sleep(ON/OFF)Scheduling

The most effective and aggressive power saving comes from turning off components that are not used.This concept can be applied at many levels.Many components and devices,such as CPU,disk,memory,servers and routers, consume substantial power when it is turned on,even with no active workload.For example,studies have shown that a powered on server with zero workload consumes about60% of its peak power[10],[18].Turning these devices off is the only way to eliminate the idle power consumption.Core parking is a technique to selectively turn off cores to reduce CPU power consumption.Banks of memory can be turned off when not being used[17].Large sections of storage can be turned off under appropriate?le system and caching scheme.Similar concepts have been explored to putting networking devices to sleep for energy conservation[23]. There are several challenges for ON/OFF controls.First, workload needs to be routed properly to remaining active systems to preserve application performance.Secondly,it takes time to wake up a slept component(or server),and sometime,this wakeup process may consume more energy and offset the bene?t of sleeping.

4.4.Virtual Machine Management

With the resurgence of the Virtual Machine(VM)tech-nology(such as Xen,VMWare and Microsoft Hyper-V), VMs could share the conventional hardware in a secure and resource-managed fashion while each VM is hosting its own operating system and applications.The Virtual Machine Monitor(VMM)would provide the support for the resource management in such a shared hosting platform, which enables applications such as server-consolidation[24], [25],co-located hosting facilities and even the distributed web services[26].

Nathuji et al.have proposed an online power management to support the isolated and independent operation assumed by VMs running on a virtualized platform and globally coordinate the diverse power management strategies applied by the VMs to the virtualized resource[27].They utilize the”Virtual Power”to represent the’soft’versions of the hardware power state,to facilitate the deployment of the power management policies.In order to map the’soft’power state to the actual changes of the underlying virtualized resource,the Virtual Power Management(VPM)state,chan-nels,mechanisms,and rules are implemented as the multiple system level abstraction.

Another potential bene?t of using VMs is to dynamically migrate VMs(and the services running on them)to improve resource utilizations on active servers.And through doing so,shut down inactive servers.However,how to group VMs together remains challenging since hardware resource utilization across VMs are not additive.For example,due to disk contention,putting two disk IO intensive applications on the same host machine may cause signi?cant throughput degradation.

4.5.Cooling Management

As a non-trivial portion of the total data center energy consumption is attributed to the cooling system,it is im-portant to understand how well the cooling systems per-form.However,most data centers do not have sophisticated cooling management systems.The temperature and humidity conditions in server rooms at set very conservatively to eliminate any possible hot spots.

There are two phases of cooling management-static and dynamic ones.Static management,or cooling provi-sioning,affects the data center designs,such as room size, rack layout,locations of fans,and the use of outside air through air-side economizers.This is usually done through computation?uid dynamics(CFD)simulation of the air?ow and heat exchange[28].Once the data center is built,little can be done to change the static cooling capacity without large capital investment.The dynamic aspect of cooling management has to do with how to adaptively provide cooling to servers that need it when they need it.Servers only need enough cooling to keep them running reliably. Those servers at the OFF state or with very light workload do not need to be cooled.However,since the cooling system usually knows nothing about the states of the servers,it cannot make intelligent decisions on dynamic cooling.The Dynamic Smart Cooling system controls cold air?ow by changing the patterns on ventilated?oor tiles.

With latest advances in sensing,especially wireless sensor networks,we are able to collect data center environmental conditions at a?ne granularity.The ground truth data are more accurate than the simulation,and gathering those bridges the gaps between servers and CRAC systems.

5.A Few Research Directions

The dynamics of cyber and physical activities in data centers scale across nine orders of magnitude,from millisec-onds to years,and from milli-watts to mega-watts.Cyber activities,in terms of transaction response time,application process switching,and power mode intervals,have time scale of milliseconds,while the life time of servers and

data centers,which determines the capacity of power and mechanical systems last for years.The same spectrum holds for power consumption.While individual chips and components may only consume milli-watts,a data center as

a while consumes mega-watts.

5.1.Modeling and Control

Despite the extensive research on power management at chip,server,and data center levels,challenges remains, especially when integrating techniques at different layers together.

For example,researchers have shown that the composition of power state adjustment and on/off control may actually hurt energy saving goals if performed without coordina-tion[29].When the system is underloaded,the DVFS policy reduces the frequency of a processor,increasing system utilization.This will eventually increase the end-to-end delay of the system.Increased delay may cause the (DVS oblivious)On/Off policy to consider the system to be overloaded,hence turning more machines on to cope with the problem.This may cause the DVS policy to slow down the machines further,leading the On/Off policy to turn more of them on.The energy expended on keeping a larger number of machines on may not necessarily be offset by DVS savings.Hence,the resulting cycle may lead to poor energy performance,even despite the fact that both the DVS and On/Off policies have the same energy saving goal. Oblivious interactions can also happen between servers and cooling system.In[30],the authors analyzed the sen-sitivity of CRAC with respect to the heat generated by the servers.Data collected from sensors and CRAC shows that the CRAC regulate temperature much better at some locations than others inside a colo.Consider a scenario in which locations A and B rely on the same CRAC to provide cool air.The CRAC can be extremely sensitive to servers at location A,while not sensitive to servers at locations B.Consider now that we migrate load from servers at location A to servers at location B and shut down the servers at A.The CRAC then believes that there is not much heat generated in its effective zone and thus increases the temperature of the cooling air.However,because the CRAC is not sensitive to the heat generated at location B,the active servers,albeit having extra workload,do not have enough cool air supply.Servers at B are then at risk of generating thermal alarms and shutting down.

A holistic control and coordination framework for IDCs needs to deal with a variety of models,from continu-ous dynamics,to discrete events,to mode changes.Given the temporal and spatial scale of the problem,statistical modeling,queuing theory,network information theory,and feedback control theories all play important roles.5.2.Cyber-Physical Co-Design

Once an application dynamics and the resource constraints are well understood,it is desirable to co-design the software and the physical infrastructure to maximize resource utiliza-tion.The challenge for data center co-design is the lack of consistent abstraction and modularity in computing and physical dynamics.

On the physical aspect,modularity comes from power and cooling enclosure.For example,individual server blades form blade servers to share power supply and cooling fans;servers are preassembled into racks for easiness of deployment;and more recently,thousands of servers and corresponding power distribution system and cooling system are pre-manufactured into container sized units as deploy-ment modules.The cyber-modularity is very different,from processes,to virtual machines,to clusters,and to services. The physical modularity determines the isolation of power provision,power distribution and cooling control,while the cyber-modularity determines how resources are consumed. Due to the fact that different processes stress physical resources differently-some are CPU bound,some are disk IO bound,and some are network bound-it is desirable to break cyber-modularity when assigning processes to phys-ical substrates.For example,two processes,or VMs,from different applications are unlikely to generate power spikes at the same time.This will reduce the probability of power capping.

The interweaving the cyber and physical concerns brings signi?cant challenges on resource management.A central-ized approach will require the controller to gather infor-mation from a huge number of entities and model their complex interactions.At the same time,losing consistency between cyber and physical modularity means it is hard to implement a clean hierarchical approach for resource management.Where to draw the boundaries of cyber and physical modules,and what information to?ow between the boundaries,are key questions for data center level resource management.

5.3.Data Management

Part of the challenge of managing power across nine orders of magnitude is to deal with the amount of data. Archiving and analyzing years of data at?ne granularity (e.g.for system provisioning purposes)is a prohibitively dif?cult.Furthermore,a single on-line application can span across data centers over several continents.Requests can be routed among them in splits of a second.However,certain resource allocations,such as VM migration,turning servers on/off,or changing cool air supply take minutes to make effects.How to structure the systems,what data to sense, how to compress raw data without losing key information,

and what to share across system boundaries are the keys to achieve scalability.

For example,consider a10,000server cloud computing environment,if there are100software performance counters of interests,and each of them are sampled every15seconds, we will expect2.4million data points per minutes.The same data can be used for many different purposes.Take CPU utilization as an example,it can be used to predict long term usage trend(e.g.by performing daily average);to understand usage patterns within a day(e.g.by performing hourly average);to monitor load balancer behavior(e.g.by performing correlations after removing the hourly trend);or to detect anomalies(e.g.by monitoring unusually spikes). Since these queries essentially focuses on data with certain narrow band,preprocessing and indexing the data into multiple scales can speed up the query signi?cantly.At the same time,raw data out of these bands can be considered as noise and be eliminated,thus reducing storage requirements.

6.Conclusion

Data centers are complex cyber-physical systems.They are also unique in the sense that the cyber-activities deter-mine the physical properties such as power consumption. Power management in data centers has to be elastic,accom-modating the dynamics in computing demands.Micro-level power management at device and VM level are restrained to local optimality.A coordination layer must take into account information from both cyber-activities and physical dynamics in data centers to make resource utilization follow the elasticity of software services.This is a challenging goal requiring break through in many research topics,such as sensing,modeling,control,data management,resource and software abstraction,and system design.

References

[1]R.Raghavendra,P.Ranganathan,V.Talwar,Z.Wang,and

X.Zhu,“No“power”struggles:coordinated multi-level power management for the data center,”in ASPLOS XIII: Proceedings of the13th international conference on Archi-tectural support for programming languages and operating systems.New York,NY,USA:ACM,2008,pp.48–59. [2]Microsoft,“Azure service platform,”2008.[Online].

Available:https://www.doczj.com/doc/bc8980139.html,/azure/default.mspx [3]Amazon Web Services LLC,“Amazon elastic computing

cloud(amazon ec2),”2008.[Online].Available:http: //https://www.doczj.com/doc/bc8980139.html,/ec2/

[4]Google,“Google apps,”2008.[Online].Available:http:

//https://www.doczj.com/doc/bc8980139.html,/apps/intl/en/business/index.html

[5]M.Armbrust, A.Fox,R.Grif?th, A. D.Joseph,R.H.

Katz,A.Konwinski,G.Lee,D.A.Patterson,A.Rabkin,

I.Stoica,and M.Zaharia,“Above the clouds:A berkeley

view of cloud computing,”EECS Department,University of California,Berkeley,Tech.Rep.UCB/EECS-2009-28, Feb2009.[Online].Available:https://www.doczj.com/doc/bc8980139.html,/ Pubs/TechRpts/2009/EECS-2009-28.html

[6]K.G.B.W.Pitt Turner IV,J.H.Seader,“Tier classi?ca-

tions de?ne site infrastructure performance,”Uptime Institute, White Paper,Tech.Rep.,2008.

[7]J.R.Lorch and A.J.Smith,“Improving dynamic voltage scal-

ing algorithms with pace,”in SIGMETRICS’01:Proceedings of the2001ACM SIGMETRICS international conference on Measurement and modeling of computer systems.New York, NY,USA:ACM,2001,pp.50–61.

[8]T.Horvath,T.Abdelzaher,K.Skadron,and X.Liu,“Dynamic

voltage scaling in multitier web servers with end-to-end delay control,”Computers,IEEE Transactions on,vol.56,no.4,pp.

444–458,April2007.

[9]L. A.Barroso and U.H¨o lzle,“The case for energy-

proportional computing,”Computer,vol.40,no.12,pp.33–37,2007.

[10]X.Fan,W.-D.Weber,and L.A.Barroso,“Power provisioning

for a warehouse-sized computer,”in ISCA’07:Proceedings of the34th annual international symposium on Computer architecture.New York,NY,USA:ACM,2007,pp.13–

23.

[11] A.Pratt,P.Kumar,and T.Aldridge,“Evaluation of400v dc

distribution in telco and data centers to improve energy ef?-ciency,”in29th Intl.Telecommunications Energy Conference, INTELEC2007.,sept2007,pp.32–39.

[12] E.Pinheiro,W.-D.Weber,and L.A.Barroso,“Failure trends

in a large disk drive population,”in Proceedings of the5th USENIX Conference on File and Storage Technologies(FAST 2007),February2007.

[13]J.Chase,D.Anderson,P.Thakar,A.Vahdat,and R.Doyle,

“Managing Energy and Server Resources in Hosting Centers.”

in SOSP,2001.

[14]T.Heath,B.Diniz,E.V.Carrera,W.M.Jr.,and R.Bianchini,

“Energy conservation in heterogeneous server clusters,”in Proceedings of ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming(PPoPP),2005.

[15]R.Doyle,J.Chase,O.Asad,W.Jin,and A.Vahdat,“Model-

Based Resource Provisioning in a Web Service Utility.”in In Proceedings of the4th USENIX Symposium on Internet Technologies and Systems,2003.

[16]J.Dean and S.Ghemawat,“Mapreduce:Simpli?ed data

processing on large clusters,”in OSDI’04:Sixth Symposium on Operating System Design and Implementation,December 2004.

[17]M.Isard,M.Budiu,Y.Yu,A.Birrell,and D.Fetterly,“Dryad:

distributed data-parallel programs from sequential building blocks,”SIGOPS Oper.Syst.Rev.,vol.41,no.3,pp.59–72, 2007.

[18]G.Chen,W.He,J.Liu,S.Nath,L.Rigas,L.Xiao,

and F.Zhao,“Energy-aware server provisioning and load dispatching for connection-intensive internet services,”in NSDI’08:Proceedings of the5th USENIX Symposium on Networked Systems Design and Implementation.Berkeley, CA,USA:USENIX Association,2008,pp.337–350. [19]L.A.Barroso,“The price of performance,”Queue,vol.3,

no.7,pp.48–53,2005.

[20] D.Grunwald,P.Levis,K.I.Farkas, C. B.M.III,and

M.Neufeld,“Policies for Dynamic Clock Scheduling.”in OSDI,2000.

[21]M.Elnozahy,M.Kistler,and R.Rajamony,“Energy conser-

vation policies for web servers.”in USITS,2003.

[22]K.Flautner and T.Mudge,“Vertigo:automatic performance-

setting for linux,”SIGOPS Oper.Syst.Rev.,vol.36,no.SI, pp.105–116,2002.

[23]S.Nedevschi,L.Popa,G.Iannaccone,S.Ratnasamy,and

D.Wetherall,“Reducing network energy consumption via

sleeping and rate-adaptation,”in NSDI’08:Proceedings of the 5th USENIX Symposium on Networked Systems Design and Implementation.Berkeley,CA,USA:USENIX Association, 2008,pp.323–336.

[24] C. A.Waldspurger,“Memory resource management in

vmware esx server,”SIGOPS Oper.Syst.Rev.,vol.36,no.SI, pp.181–194,2002.

[25]Ensim,“Ensim virtual private servers,”2003.[On-

line].Available:https://www.doczj.com/doc/bc8980139.html,/products/materials/ datasheet vps051003.pdf

[26] A.Whitaker,M.Shaw,and S. D.Gribble,“Denali:

Lightweight virtual machines for distributed and networked applications,”in In Proceedings of the USENIX Annual Tech-nical Conference,2002.

[27]R.Nathuji and K.Schwan,“Virtualpower:coordinated power

management in virtualized enterprise systems,”in SOSP’07: Proceedings of twenty-?rst ACM SIGOPS symposium on Operating systems principles.New York,NY,USA:ACM, 2007,pp.265–278.

[28] E.Samadiani,Y.Joshi,and F.Mistree,“The thermal design

of a next generation datacenter:A conceptual exposition,”

Journal of Electronic Packaging,vol.130,no.4,2008. [29]J.Heo, D.Henriksson,X.Liu,and T.Abdelzaher,“In-

tegrating adaptive components:An emerging challenge in performance-adaptive systems and a server farm case-study,”

Real-Time Systems Symposium,IEEE International,vol.0, pp.227–238,2007.

[30]J.Liu,F.Zhao,J.O’Reilly,A.Souarez,M.Manos,C.-

J.M.Liang,and A.Tersiz,“Project genome:Wireless sensor network for data center cooling,”Microsoft Architecture Journal,Tech.Rep.,Dec2009.

易存云存储系统平台建设 项目方案 北京易存科技 2016-1-25 目录 一、方案概述....................................... (03) 二、方案要求与建设目标.................................0 4 2.1 客户需求分析..................................04 2.2 系统主要功能方案..............................05 三、系统安全方案.................................... (19) 3.1 系统部署与拓扑图...............................19 3.2 文件存储加密...................................21 3.3 SSL协议........................................22 3.4 二次保护机制............................. (23)

3.5 备份与恢复.....................................23 四、系统集成与二次开发.................................24 4.1 用户集成.......................................24 4.2 文件集成.................................... (2) 7 4.3 二次开发.................................... (2) 9 五、典型成功案例.......................................29 六、售后服务体系.................................... (30) 6.1公司概况.......................................30 6.2 服务内容与响应时间............................. 31 一、方案概述 随着互联网时代的到来,企业信息化让电子文档成为企业智慧资产的主要载体。信息流通的速度、强度和便捷度的加强,一方面让我们享受到了前所未有的方便和迅捷,但另一方面也承受着信息爆炸所带来的压力。 传统的文件管理方式已经无法满足企业在业务的快速发展中对文件的安全而高效流转的迫切需求。尤其是大文件的传输与分享,集团公司与分公司,部门与部门之间,乃至与供应商或客户之间频繁的业务往来,显得尤其重要。 文件权限失控严重,版本混乱,传递效率,查找太慢,文件日志无法追溯,历史纸质文件管理与当前业务系统有效整合对接等一系列的问题日渐变的突出和迫切。 该文档描述了北京易存科技为企业搭建文档管理系统平台的相关方案。从海量文件的存储与访问,到文件的使用,传递,在线查看,以及文件的流转再到归档

云课堂 技术解决方案

目录 第1章概述 (2) 第2章现状分析及问题 (3) 2.1方案背景 (3) 2.2教育信息化建设的发展 (3) 2.2云课堂的推出 (4) 第3章云课堂技术解决方案 (5) 3.1云终端方案概述 (5) 3.2云课堂解决方案 (5) 3.2.1 云课堂拓扑图 (5) 3.2.2 云课堂教学环境 (6) 3.2.3 云课堂主要功能 (7) 3.2.4 优课数字化教学应用系统功能 (8) 第4章方案优势 (9) 4.1私密性 (9) 4.2工作连续性 (9) 4.3方便移动性 (9) 4.4场景一致性 (9) 4.5长期积累性 (9) 4.6安全稳定性 (10) 4.7易维护性 (10) 4.8高效性 (10) 第5章实际案例 (11)

第1章概述 随着现代信息技术的飞速发展,越来越多的用户更加注重自身信息架构的简便易用性、安全性、可管理性和总体拥有成本。近几年信息化的高速发展,迫使越来越多的教育机构需要采用先进的信息化手段,解决各机构当前面临的数据安全隔离、信息共享、资源整合等实际问题,实现通过改进机器的利用率降低成本,减少管理时间和降低基础设施成本,提高工作效率。 无论是作为云计算的核心技术,还是作为绿色 IT、绿色数据中心的核心技术,虚拟化已经成为 IT 发展的重要方向,也可以说我们正面临着一场 IT 虚拟化、云计算的革命。这场 IT 虚拟化、云计算的革命正在开始席卷全球。 虚拟化技术在解决信息安全、资源利用率提升、简化 IT 管理、节能减排等方面有着得天独厚的优势,通过虚拟化技术,把数据中心的计算资源和存储资源发布给终端用户共享使用,大幅度提高服务器资源利用率,同时通过严格的访问控制,确保数据中心中所存储的安全性。

云安全管理平台解决方案 北信源云安全管理平台解决方案北京北信源软件股份有限公司 2010 云安全管理平台解决方案/webmoney 2.1问题和需求分析 2.2传统SOC 面临的问题................................................................... ...................................... 4.1资产分布式管理 104.1.1 资产流程化管理 104.1.2 资产域分布 114.2 事件行为关联分析 124.2.1 事件采集与处理 124.2.2 事件过滤与归并 134.2.3 事件行为关联分析 134.3 资产脆弱性分析 144.4 风险综合监控 154.4.1 风险管理 164.4.2 风险监控 174.5 预警管理与发布 174.5.1 预警管理 174.5.2 预警发布 194.6 实时响应与反控204.7 知识库管理 214.7.1 知识共享和转化 214.7.2 响应速度和质量 214.7.3 信息挖掘与分析 224.8 综合报表管理 245.1 终端安全管理与传统SOC 的有机结合 245.2 基于云计算技术的分层化处理 255.3 海量数据的标准化采集和处理 265.4 深入事件关联分析 275.5 面向用户服务的透明化 31云 安全管理平台解决方案 /webmoney 前言为了不断应对新的安全挑战,越来越多的行业单位和企业先后部署了防火墙、UTM、入侵检测和防护系统、漏洞扫描系统、防病毒系统、终端管理系统等等,构建起了一道道安全防线。然而,这些安全防线都仅仅抵御来自某个方面的安全威胁,形成了一个个“安全防御孤岛”,无法产生协同效应。更为严重地,这些复杂的资源及其安全防御设施在运行过程中不断产生大量的安全日志和事件,形成了大量“信息孤岛”,有限的安全管理人员面对这些数量巨大、彼此割裂的安全信息,操作着各种产品自身的控制台界面和告警窗口,显得束手无策,工作效率极低,难以发现真正的安全隐患。另一方面,企业和组织日益迫切的信息系统审计和内控要求、等级保护要求,以及不断增强的业务持续性需求,也对客户提出了严峻的挑战。对于一个完善的网络安全体系而言,需要有一个统一的网络安全管理平台来支撑,将整个网络中的各种设备、用户、资源进行合理有效的整合,纳入一个统一的监管体系,来进行统一的监控、调度、协调,以达到资源合理利用、网络安全可靠、业务稳定运行的目的。云安全管理平台解决方案 /webmoney 安全现状2.1 问题和需求分析在历经了网络基础建设、数据大集中、网络安全基础设施建设等阶段后,浙江高法逐步建立起了大量不同的安全子系统,如防病毒系统、防火墙系统、入侵检测系统等,国家主管部门和各行业也出台了一系列的安全标准和相关管理制度。但随着安全系统越来越庞大,安全防范技术越来越复杂,相关标准和制度越来越细化,相应的问题也随之出现: 1、安全产品部署越来越多,相对独立的部署方式使各个设备独立配置、管理,各产品的运行状态如何?安全策略是否得到了准确落实?安全管理员难以准确掌握,无法形成全局的安全策略统一部署和监控。 2、分散在各个安全子系统中的安全相关数据量越来越大,一方面海量数据的集中储存和分析处理成为问题;另一方面,大量的重复信息、错误信息充斥其中,海量的无效数据淹没了真正有价值的安全信息;同时,从大量的、孤立的单条事件中无法准确地发现全局性、整体性的安全威胁行为。 3、传统安全产品仅仅面向安全人员提供信息,但管理者、安全管理员、系统管理

POWERMAX 无线报警系统编程及操作指南 2006-7-11 一.安装 POWERMAX 无线报警主机 按产品说明书固定安装架,联接电话线, AC 9V 电源线,安装后备电池,使用6节5号AA 电池,跳线放在下边, 使用充电电池,跳线放在上边,主机安装在安装架上. 编程设置时主要使用下列按键: 二.注册无线探测器(ENROLLING) 先对无线探测器编防区号,安装电池,红外探测器J2放在TEST 位置(安装调试完成后J2放在OFF 位置),将红外探测器放回到包装盒里,门磁发射器(MCT-302)和磁体吸俯在一起. 按NEXT 键 直到LCD 显示屏出现 (安装模式) 按 SHOW/OK 键,输入安装员密码(出产设置9999),进入安装模式, 按主机的NEXT 键 直到LCD 显示屏出现 2 ENROLLING (注册) 按 SHOW/OK 键 LCD 显示屏出现 ZONE NO: 输入无线探测器编防区号(两位数字)01 将门磁发射器(MCT-302)与磁体分开,报警主机将收到门磁发射器发出的无线信号,报警主机发出”嘀..嘀..嘀”,表示注册成功 ,门磁发射器(MCT-302)和磁体重新吸俯在一起.继续注册下一个无线探测器 . 按 按

将无线红外探测器从包装盒里取出,触发无线红外探测器, 探测器红灯亮, 报警主机将收到无线红外探测器发出的无线信号,报警主机发出”嘀..嘀..嘀”,表示注册成功, 将红外探测器放回到包装盒里,继续注册下一个无线探测器. 按SHOW/OK键LCD显示屏出现ZONE NO: 输入无线探测器编防区号(两位数字)03 , 报警主机将收到无线烟感探测器发出的无线信号,报警主机发出”嘀..嘀..嘀”,表示注册成功, 继续注册下一个无线探测器. 完成注册无线探测器后,按AW AY键, LCD显示屏出现OK 按SHOW/OK键返回到开机状态 三.注册无线按钮(ENROLLING Keyfob) 按NEXT键直到LCD显示屏出现 按SHOW/OK键,输入安装员密码(出产设置9999),进入安装模式, 按SHOW/OK键LCD显示屏出现Keyfob NO: 输入无线按钮号 1 按无线按钮的任意键, 按钮的红灯亮,发出的无线信号,报警主机发出”嘀..嘀..嘀”,表示注册成功, 继续注册下一个无线按钮. 完成注册无线按钮,按AW AY键, LCD显示屏出现OK 按SHOW/OK键返回到开机状态 四.定义防区类型,名称及门铃功能 按SHOW/OK键,输入安装员密码(出产设置9999),进入安装模式, NO: 输入无线探测器编防区号(两位数字)01

For free samples & the latest literature: https://www.doczj.com/doc/bc8980139.html,, or phone 1-800-998-8800.For small orders, phone 1-800-835-8769. General Description The MAX4664/MAX4665/MAX4666 quad analog switch-es feature 5?max on-resistance. On-resistance is matched between switches to 0.5?max and is flat (0.5?max) over the specified signal range. Each switch can handle Rail-to-Rail ?analog signals. The off-leakage cur-rent is only 5nA max at +85°C. These analog switches are ideal in low-distortion applications and are the pre-ferred solution over mechanical relays in automatic test equipment or in applications where current switching is required. They have low power requirements, require less board space, and are more reliable than mechanical relays. The MAX4664 has four normally closed (NC) switches,the MAX4665 has four normally open (NO) switches, and the MAX4666 has two NC and two NO switches that guarantee break-before-make switching times. These switches operate from a single +4.5V to +36V supply or from dual ±4.5V to ±20V supplies. All digital inputs have +0.8V and +2.4V logic thresholds, ensuring TTL/CMOS-logic compatibility when using ±15V sup-plies or a single +12V supply. Applications Reed Relay Replacement PBX, PABX Systems Test Equipment Audio-Signal Routing Communication Systems Avionics Features o Low On-Resistance (5?max) o Guaranteed R ON Match Between Channels (0.5?max)o Guaranteed R ON Flatness over Specified Signal Range (0.5?max)o Guaranteed Break-Before-Make (MAX4666)o Rail-to-Rail Signal Handling o Guaranteed ESD Protection > 2kV per Method 3015.7o +4.5V to +36V Single-Supply Operation ±4.5V to ±20V Dual-Supply Operation o TTL/CMOS-Compatible Control Inputs MAX4664/MAX4665/MAX4666 5?, Quad, SPST, CMOS Analog Switches ________________________________________________________________Maxim Integrated Products 1 Pin Configurations/Functional Diagrams/Truth Tables 19-1504; Rev 0; 7/99 Ordering Information continued at end of data sheet. Ordering Information Rail-to-Rail is a registered trademark of Nippon Motorola, Ltd.

随着云计算在企业内应用,大多数企业都认识到了云计算的的重要性,因为它可以实现资源分配的灵活性、可伸缩性并且提高了服务器的利用率,降低了企业的成本。但是随着企业信息化程度的越来越高、信息系统支持的业务越来越复杂,管理的难度也越来越大,所以就需要选择一个合理的解决方案来支撑企业信息系统的管理和发展。 云管理平台最重要的两个特质在于管理云资源和提供云服务。即通过构建基础架构资源池(IaaS)、搭建企业级应用、开发、数据平台(PaaS),以及通过SOA架构整合服务(SaaS)来实现全服务周期的一站式服务,构建多层级、全方位的云资源管理体系。那么有没有合适的云管理平台解决方案可以推荐呢? SmartOps作为新一代多云管理平台,经过6年多的持续研发和实际运营,已经逐渐走向成熟,能通过单一入口广泛支持腾讯云、阿里云、华为云、AWS等超大规模公有云的统一监控、资源编排、资产管理、成本管理、DevOps 等管理功能,同时也支持私有云和物理裸机环境的统一纳管。SmartOps平台具有统一门户、CMDB配置

数据库、IT服务管理、运维自动化和监控告警等主要模块,支持客户自助在线处理订单、付款销账、申报问题、管理维护等商务运营流程,而且对客户的管理、交付、技术支持也都完全在平台上运行,这极大提升了整体运营效率并大幅降低成本,业务交付速度更快、自动化程度更高、成本更具竞争力、用户体验更佳。 同时,SmartOps正在构建适应业务创新发展的云管理平台,实现从服务中提炼普惠性的服务方案,并构建软件化、工具化、自动化的快速上线对外提供服务的通道。SmartOps不仅是一个云管平台,也是一个面向企业用户的服务迭代的创新平台,一切有利于企业用户数字化发展的个性化服务,都有可能在普遍落地后实现技术服务产品化、工具化的再输出。不仅如此,下一步,SmartOps还将融入更多的价值,包括借助人工智能的技术,面向企业用户领导决策提供参考价值。借助平台化的管理工具,为企业财务人员提供有价值的成本参

创新管理价值,引导教学未来——云课堂解决方案 一、概述 随着计算机教育的发展,计算机机房在各中小学已经相当普及,这些计算机资源在很大程度上提高了课题的教学效果。同时,随着机房规模的不断扩大,学校需要管理和维护的各种计算机硬件和软件资源也越来越多,而中小学维护力量相对薄弱,如何科学有效地对这些教育资源进行管理已成为各中小学面临的一个难点管理维护问题:很少中小学有专门的机房管理人员,机房维护专业性要求高,工作量大 使用体验问题:PC使用时间一长,运行速度变慢,故障变多 投资保护问题:PC更新换代较快,投资得不到保障 节能环保问题:机房耗电量大,废弃电脑会产生大量电子垃圾 二、方案简介 RCC(Ruijie Cloud Class)云课堂是根据不断整合和优化校园机房设备的工作思路,结合普教广大学校的实际情况编制的新一代计算机教室建设方案。每间教室只需一台云课堂主机设备,便可获得几十台性能超越普通PC机的虚拟机,这些虚拟机通过网络交付给云课堂终端,学生便可体验生动的云桌面环境。云课堂可按照课程提供丰富多彩的教学系统镜像,将云技术和教育场景紧密结合,实现教学集中化,管理智能化,维护简单化,将计算机教室带入云的时代。 三、方案特性 简管理

云课堂采用全新的集中管理技术管理学校所有计算机教室,管理员在云课堂集中管理平台RCC Center中根据教学课程的不同应用软件制作课程镜像, 同步给教室中的云课堂主机设备,老师上课时可根据课程安排一键选择镜像从而随时获得想要的教学环境。 管理员也不用再为记录繁杂的命令而烦恼,云课堂提供全图形控制管理界面,无论虚拟机制作,编辑,还原都只需轻轻一按。云课堂的管理模式可彻底解决机房中常见大量软件安装导致系统臃肿、软件冲突,病毒侵入、教学、考试场景切换工作量大等难题,还可省去Ghost或还原卡的繁杂设置。全校的计算机教室设备监控和软件维护在办公室中即可轻松实现,效率比PC管理提高9倍! 促教学 云课堂三大关键技术,全面提升虚拟机性能,可令终端启动和课程切换加速,教学软件运行更快,并且可以全面控制学生用机行为,杜绝上课开小差的情况发生。 智能镜像加速技术 - 所有定制好的系统镜像会由云课堂主机自动优化,在该技术的支持下,60个虚拟机启动时间只需短短几分钟,同时还提供老师在上课过程中可随时切换学生操作系统的选择,从而轻易改变教学环境,演绎云技术带给传统教学的优化和创新实践。 多级Cache缓存技术 - 实现镜像启动加速、IO加速,使云桌面启动和应用程序运行速度大幅度提升,用户体验远高于市面上其他产品。在该技术帮助下,教师常用教学课件,专用软件启动、运行速度比同配置物理机提升200%,大幅提升用机体验,让学生畅游”云海”,领略“飞”一般的感受! 多媒体教学管理软件防卸载技术–云课堂终端内嵌多媒体教学管理程序,且学生不可见。老师在使用该软件教学时,不会再出现学生因卸载或关闭管理程序而脱离教师的管理现象,大大加强对学生上课行为的控制力度,严肃课堂纪律,教学质量得以保证。 易获得 云课堂是包括课堂主机,课堂终端,多媒体教学管理软件和课堂集中管理平台在内的一套端到端的整体解决方案。其部署过程极其简单,仅需将云课堂主机和云课堂终端相连,在云课堂主机上做一次课程配置,一间全新的计算机教室即建设完成。因省去逐台PC分区设置和系统同传等过程,效率上可提高3小时以上。 同时云课堂终端功耗极低,普通教室不需强电改造即可转型为云课堂,加快校园IT信息化建设的同时,打造绿色校园 更环保 每台云课堂终端设备平均功耗20w,是传统PC机的1/12。且整个终端机身使用一体化设计,无风扇、硬盘等易损元件,寿命比PC机延长20%以上。节省开支的同时大大减少电子垃圾,响应国家倡导的绿色节能号召,创造舒适、低能耗的绿色校园环境。

General Description The MAX3483, MAX3485, MAX3486, MAX3488, MAX3490, and MAX3491 are 3.3V , low-power transceivers for RS-485 and RS-422 communication. Each part contains one driver and one receiver. The MAX3483 and MAX3488 feature slew-rate-limited drivers that minimize EMI and reduce reflections caused by improperly terminated cables, allowing error-free data transmission at data rates up to 250kbps. The partially slew-rate-limited MAX3486 transmits up to 2.5Mbps. The MAX3485, MAX3490, and MAX3491 transmit at up to 10Mbps. Drivers are short-circuit current-limited and are protected against excessive power dissipation by thermal shutdown circuitry that places the driver outputs into a high-imped- ance state. The receiver input has a fail-safe feature that guarantees a logic-high output if both inputs are open circuit. The MAX3488, MAX3490, and MAX3491 feature full- duplex communication, while the MAX3483, MAX3485, and MAX3486 are designed for half-duplex communication.Applications ●Low-Power RS-485/RS-422 Transceivers ●Telecommunications ●Transceivers for EMI-Sensitive Applications ●Industrial-Control Local Area Networks Features ●Operate from a Single 3.3V Supply—No Charge Pump!●Interoperable with +5V Logic ●8ns Max Skew (MAX3485/MAX3490/MAX3491)●Slew-Rate Limited for Errorless Data Transmission (MAX3483/MAX3488)●2nA Low-Current Shutdown Mode (MAX3483/MAX3485/MAX3486/MAX3491)●-7V to +12V Common-Mode Input Voltage Range ●Allows up to 32 Transceivers on the Bus ●Full-Duplex and Half-Duplex Versions Available ●Industry Standard 75176 Pinout (MAX3483/MAX3485/MAX3486)●Current-Limiting and Thermal Shutdown for Driver Overload Protection 19-0333; Rev 1; 5/19 Ordering Information continued at end of data sheet. *Contact factory for for dice specifications. PART TEMP . RANGE PIN-PACKAGE MAX3483CPA 0°C to +70°C 8 Plastic DIP MAX3483CSA 0°C to +70°C 8 SO MAX3483C/D 0°C to +70°C Dice*MAX3483EPA -40°C to +85°C 8 Plastic DIP MAX3483ESA -40°C to +85°C 8 SO MAX3485CPA 0°C to +70°C 8 Plastic DIP MAX3485CSA 0°C to +70°C 8 SO MAX3485C/D 0°C to +70°C Dice*MAX3485EPA -40°C to +85°C 8 Plastic DIP MAX3485ESA -40°C to +85°C 8 SO PART NUMBER GUARANTEED DATA RATE (Mbps)SUPPLY VOLTAGE (V)HALF/FULL DUPLEX SLEW-RATE LIMITED DRIVER/RECEIVER ENABLE SHUTDOWN CURRENT (nA)PIN COUNT MAX3483 0.25 3.0 to 3.6Half Yes Yes 28MAX3485 10Half No No 28MAX3486 2.5Half Yes Yes 28MAX3488 0.25Half Yes Yes —8MAX3490 10Half No No —8MAX349110Half No Yes 214MAX3483/MAX3485/MAX3486/MAX3488/MAX3490/MAX3491 Selection Table Ordering Information 找电子元器件上宇航军工

浪潮私有云平台解决方案云计算的发展 近几年,国内外IT信息技术快速发展,以云计算为代表的新兴技术已经为解决传统IT信息化建设困局找到了突破性的解决方案,并已经在国内企业、政府、金融、电信等众多关键领域取得了成功。 云计算是一种按使用量付费的模式,这种模式提供可用的、便捷的、按需的网络访问,进入可配置的计算资源共享池(资源包括网络,服务器,存储,应用软件,服务),这些资源能够被快速提供,只需投入很少的管理工作,或与服务供应商进行很少的交互。 云计算分为三种服务模式:软件即服务(SaaS)、平台即服务(PaaS)、基础设施即服务(IaaS)。 云计算根据部署部署方式的不同分为:公有云(Public Cloud)、私有云(Private Cloud)、社区云(Community Cloud)、混合云(Hybrid Cloud)。 其中私有云是为一个客户单独使用而构建的,因而提供对数据、安全性和服务质量的最有效控制。私有云可部署在企业数据中心的防火墙内,也可以部署在一个安全的主机托管场所,私有云的核心属性是专有资源。主要优势体现在以下方面: 1.数据安全 虽然每个公有云的提供商都对外宣称其服务在各方面都是非常安全,特别是对

数据的管理。但是对企业而言,特别是大型企业以及对安全要求较高的企业而言,和业务有关的数据是其的生命线,是不能受到任何形式的威胁,而私有云在这方面是非常有优势的,因为它一般都构建在防火墙后。 2、SLA(服务质量) 因为私有云一般在防火墙之后,而不是在某一个遥远的数据中心里,所以当公司员工访问那些基于私有云的应用时,它的SLA会非常稳定,不会受到网络不稳定的影响。 3、不影响现有IT管理的流程 对大型企业而言,流程是其管理的核心,如果没有完善的流程,企业将会成为一盘散沙。不仅与业务有关的流程非常繁多,而且IT部门的管理流程也较多,比如在数据管理和安全规定等方面。 客户面临由虚拟化向云服务转型的挑战 服务器虚拟化作为云计算的基础,已经被越来越多的客户认可,虚拟化已经成为数据中心建设过程中的首选方案,将服务器物理资源抽象成逻辑资源,让一台服务器变成几台甚至上百台互相隔离的虚拟服务器,用户将不再受限于物理上的界限,而是让CPU、内存、磁盘、I/O等硬件变成可以动态管理的“资源池”,从而提高资源的利用率,简化系统管理,实现服务器整合,让IT对业务的变化更具适应力。通过部署服务器虚拟化,用户能够获得如下收益: ?降低TCO成本,提高硬件资源利用率,节省了机房空间成本;

玄课堂 技术解决方案 目录 第2章现状分析及问题................................................ 错误!未定义书签。 2」方案背景........................................................ 错误!未定义书签。 2.2教育信息化建设的发展 .......................................... 错误!未定义书签。 2.2云课堂的推;h错谋!未定义书签。 第3章云课堂技术解决方案............................................. 错误!未定义书签。 3.1云终端方案概述?错误!未定义书签。 3.2云课堂解决方案?错误!未定义书签。 3.2. 1云课堂拓扑图............................................. 错误!未定义书签。 3. 2. 2 云课堂教学环境.......................................... 错谋!未定义书签。 3. 2. 3云课堂主要功能Z错误!未定义书签。 3. 2. 4优课数字化教学应用系统功能 ............................... 错误!未定义书签。 第4章方案优势...................................................... 错误!未定义书签。 4」私密性.......................................................... 错误!未定义书签。 4.2匸作连续性,错淚!未定义书签。 4.3方便移动性 .................................................... 错误!未定义书签。 4.4场景?致性 ..................................................... 错误!未定义书签。 4.5长期积累性 ..................................................... 错误!未定义书签。 4.6安全稳定性。错误!未定义书签。 4.7易维护性。错误!未定义书签。 4.8高效性,错误!未定义书签。 第5章实际案例,错误!未定义书签。 第1章概述 随着现代信息技术的飞速发展,越来越多的用户更加注重自身信息架构的简便易用性、安全性、可管理性和总体拥有成木。近几年信息化的高速发展,迫使越来越多的教育机构需要采用先进的信息化手段,解决各机构当前面临的数据安全隔离、信息共享、资源整合等实际问题,实现通过改进机

Powermax30? XP Duramax LT 手持割炬 专业级等离子系统, 其手持切割厚度可达 10 mm 。 切割能力厚度切割速度 推荐值切断能力 16 mm 125 mm/min 简单易用,二合一设计 ? 大电流切割厚金属,搭配 FineCut ? 易损件使用, 亦可精细切割薄金属。 ? 采用 Auto-Voltage?(自动电压调节)技术,可以插接到任何 120 V 或 240 V 电源上,系统附带插头适配器。 更快地完成作业 ? 切割电流提高 50%*,切割速度更快。 ? 减少边缘清理工作:荣获专利的易损件设计,提供出色的切割质量。? 两倍的易损件使用寿命*,切割效率平均提高 70%, 从而降低切割成本。 坚固耐用,稳定可靠 ? 新款 Duramax? L T 割炬耐高温、抗撞击。 ? 具备 Hypertherm Certified? 一贯的可靠性,确保在最严苛的环境中亦能有出色的性能表现。 ? 可选配经久耐用的手提箱,保护系统和齿轮。 *相对于 Powermax30

系统包含 ? 电源,4.5 m Duramax L T 手持割炬,带标准易损件, 4.5 m 工件夹 ? 操作和安全手册 ? 易损件套件,带有 1 个标准喷嘴,1 个电极,1 个 FineCut 喷嘴和 1 个 FineCut 导流器 ? 塑料手提箱 ? 肩带 全套易损件套件 全套易损件套件包含您的 全部易损件。通过购买全套易损件,您不仅能以较低 的成本购齐易损件,还能体验到系统的丰富功能。 851390

常见应用Array加热、通风和空气调节 (HVAC),采矿, 房屋/工厂维护,消防和急救,普通制造业,以及: 一般金属加工 汽车维修和改装

智慧云课堂解决方案

目录 1.现状介绍 (1) 2.问题分析 (1) 2.1.成本问题 (1) 2.2.日常管理维护问题 (1) 2.3.教学体验问题 (2) 2.4.资源分散问题 (2) 3.云课堂概方案概述 (3) 4.解决方案介绍 (3) 4.1.方案设计 (3) 4.2.方案优势 (5) 4.3.主要功能特性 (6) 4.3.1.广播教学 (6) 4.3.2.分组教学 (6) 4.3.3.班级管理 (7) 4.3.4.课堂管理 (7) 4.3.5.远程监控 (8) 4.3.6.远程设置 (8) 4.3.7.标准考试 (9) 5.配置参考 (9) 5.1.一体化云主机 (9) 5.2.一体化云终端 (10) 5.3.防火墙模块(可选) (10) 5.4.网络模块(可选) (10)

1.现状介绍 目前,传统的计算机教室,主要是利用计算机网络,终端使用台式计算机作为教师端及学生端教学应用程序运行环境的建设方式。根据管理的需要,另外还会采用安装硬件还原卡、电子课堂管理软件、网络同传软件等系统工具。教师通过电子课堂管理软件管理及控制学生的桌面,完成日常的教学工作。学生一般在教室完成上机操作并自行保存各自的作业。因此学校计算机教室需要采用新的云课堂解决方案,用先进的桌面虚拟化技术实现学生高效便捷使用,服务课堂教学。 2.问题分析 对于学校计算机实验室的建设方式,我们建议学校考虑如下几个重要问题。 2.1.成本问题 随着教育信息化的不断深入,在很多学校里,往往拥有多个个机房,上百台计算机。如此数量众多的计算机,如果全部采用传统计算机教室建设模式,不仅初期购置成本巨大,单单部署维护成本就占用较大投资,例如传统计算机教室台式计算机由于功率较高部署要有足够的电源配给、教室内要求独立的强电供应网络、高功率的机房制冷设备等均占用较大成本。 虽然近年来,计算机购置成本有所下降,但更新换代较快,使用年限明显缩短。因此,相对来讲依照传统计算机教室建设模式,成本仍然居高不下。 2.2.日常管理维护问题 计算机教室是进行各项教学、实验、考试以及自由上机的重要场所,需要各种各样的桌面环境以满足不同的教学场景需要,调查显示:90%的计算机教室更新一次教学环境需要4小时;90%以上的计算机教室只有1种操作系统;60%的管理员每学期有21天在刷新系统。管理维护非常复杂困难。 如何对这些桌面进行统一管理、调度、分配并快速交付和维护,实现终端桌

第1章引言 (7) 1.1国家政策推动企业上云 (7) 1.2云安全规范指导文件 (14) 1.3软件定义数据中心(SDDC) (15) 1.4软件定义安全(SDSec) (18) 第2章云安全风险分析 (19) 2.1业务牵引风险分析 (19) 2.2技术牵引风险分析 (21) 2.3监管牵引风险分析 (22) 第3章云安全需求分析 (25) 3.1云安全防护体系安全需求 (25) 3.1.1基础安全保障 (25) 3.1.2主机安全保障 (25) 3.1.3应用安全保障 (25) 3.2云安全运营体系安全需求 (25) 3.2.1安全审计服务 (25) 3.2.2安全运营服务 (26) 3.2.3态势感知服务 (26) 3.3多云/混合云架构安全联动管理需求 (26) 第4章方案设计思路 (27) 4.1软件定义安全的设计思路 (27) 4.2国际先进性参考模型 (28) 4.3方案场景适用性说明 (29) 第5章云安全管理平台产品技术方案 (30) 5.1方案概述 (30) 5.2方案架构设计 (31) 2

5.3产品功能简述 (32) 5.3.1安全市场 (32) 5.3.2组件管理 (36) 5.3.3资产管理 (37) 5.3.4租户信息同步 (38) 5.3.5监控告警 (39) 5.3.6订单管理 (41) 5.3.7工单管理 (42) 5.3.8计量计费 (42) 5.3.9报表管理 (43) 5.3.10云安全态势 (44) 5.3.11日志审计 (46) 5.4部署架构设计 (47) 5.4.1部署方案 (47) 5.4.2部署方案技术特点 (48) 5.5平台主要功能技术特点 (52) 5.5.1用户与资产结构 (52) 5.5.2自助服务模式 (54) 5.5.3安全服务链编排 (54) 5.5.4计量计费模式 (56) 5.5.5云安全态势感知 (56) 5.5.6在线/离线升级服务 (57) 5.5.7平台稳定可靠 (58) 第6章CSMP安全资源介绍部分 (60) 6.1CSMP安全服务清单介绍 (60) 6.2各安全组件产品级解决方案汇总 (62) 第7章CSMP方案核心价值 (62) 7.1安全产品服务化-合规高效 (62) 7.2安全能力一体化-智慧易管 (62) 3

云课堂、云管理、云录播解决方案 北京安地瑞科技有限公司 2017年 目录 1. 背景分析 (2) 2. 系统简述 (2) 3. 设计原则 (2) 4. 系统功能 (5) .云课堂功能 (5) .云管理功能 (11) .云录播功能 (13)

1.背景分析 教育信息化是国家整体信息化发展的重要组成部分和战略重点之一,教育信息化是教育与科技结合的标志性领域。云课堂、云管理、云录播系统的建设是学校教学质量与教学改革工程的重要组成部分,旨在充分利用名师名教的先进教学效果,培训师资,实现优质资源共享;使教师间能够更直观的相互学习及提高;同时,各种类型的课程录像文件也是学生预习和复习的重要资料,提升学习兴趣的同时,也提供了更直观、生动的教学的资料。 2.系统简述 根据当前和未来的教学发展需要,安地瑞公司凭借先进的音视频编解码技术,结合自身强大的技术研发优势,推出了云课堂、云管理、云录播系统。云课堂、云管理、云录播系统是将课程录制存储、教学资源管理共享以及课件直播点播等技术与网络技术相融合的具有强大监控管理能力的辅助教学应用系统。新一代的VOI桌面虚拟化,充分利用终端硬件计算性能(CPU、GPU),服务器依赖低,网络占用小,彻底解决了VDI架构性能低下、成本昂贵、高度依赖服务器和网络的问题,是多媒体教学应用的完美基础架构。通过网络把教学点的教学视频场景和授课计算机画面以标准的流媒体编解码技术进行实时采集压缩、集中存储及教学管理。在先进的产品和技术帮助下,使优秀的教师、课程、资源、知识得到充分的展示、记录、管理、分享。以专业化、国际化、特色化、易扩展为标准,为您提供一个集产品、技术、信息、应用于一体的教学平台,让您充分体验、享受“云”的强大处理能力。 3.设计原则 本设计方案是一项集网络技术、音视频技术、多媒体技术、环境控制技术、软件应用技术等于一体的高科技系统集成工程,而不是设备的简单叠加

易讯通 云管理平台解决方案 北京易讯通信息技术股份有限公司 26

2016年5月 26

目录 1总论3 1.1概述 3 1.2建设背景 3 2需求分析3 2.1客户现状 3 2.2客户需求 3 3建设方案5 3.1总体架构及组成 5 3.1.1系统架构图 (6) 3.1.2存储架构设计 (7) 3.1.3网络架构设计 (13) 3.1.4部署架构设计 (20) 3.2功能设计 21 3.2.1异构资源管理 (21) 3.2.2用户管理 (21) 3.2.3监控告警 (22) 26

3.2.4存储管理 (22) 3.2.5应用管理 (22) 3.2.6流程管理 (22) 3.2.7资源调度管理 (22) 4建设方案亮点23 4.1规模化的虚拟化数据中心 23 4.2兼容异构的虚拟化 23 4.3灵活的管理方式 24 4.4可靠的安全保障 25 4.5自动化的运维 25 4.6健壮的运维保障 26 26

1总论 1.1概述 1.2建设背景 2需求分析 2.1客户现状 2.2客户需求 1)提高运维管理效率,降低成本 目前管理维护人员都是进入机房,在机架旁操作服务器,但由于各主机都有自己的维护界面,造成系统维护人员需要逐个进行维护管理,显然这种单点式的维护需要耗费大量的人员成本,且效率很低。 2)提升数据中心安全性 通常在出现问题的情况下,可能进入机房进行查看与维护,而由于各系统的数量及种类在不断增加,以往单一的管理模式已经不能满足数据中心发展的需求,需要一个统一的管理门户来对数据中心进行管理维护,且在整个过程中,保证公安系统的安全,做到一站式的授权、认证,操作,审计。 3)业务保密性、可用性,稳定性 公安系统相关涉密系统都需要特别管理,对其上所载的业务需要做到安全、稳定,可用。尤其在在特殊服务器出现宕机等情况下,不能及时的连接或查看相关故障,延误了时间,可能会造成损失,这就需要做到服务器主机的高可用,高稳定,高安全。 26