Action Recognition by Hierarchical Mid-level Action Elements Tian Lan?,Yuke Zhu?,Amir Roshan Zamir and Silvio Savarese

Stanford University

Abstract

Realistic videos of human actions exhibit rich spatiotem-poral structures at multiple levels of granularity:an action can always be decomposed into multiple?ner-grained ele-ments in both space and time.To capture this intuition,we propose to represent videos by a hierarchy of mid-level ac-tion elements(MAEs),where each MAE corresponds to an action-related spatiotemporal segment in the video.We in-troduce an unsupervised method to generate this represen-tation from videos.Our method is capable of distinguish-ing action-related segments from background segments and representing actions at multiple spatiotemporal resolutions. Given a set of spatiotemporal segments generated from the training data,we introduce a discriminative clustering al-gorithm that automatically discovers MAEs at multiple lev-els of granularity.We develop a structured model that cap-tures a rich set of spatial,temporal and hierarchical rela-tions among the segments,where the action label and multi-ple levels of MAE labels are jointly inferred.The proposed model achieves state-of-the-art performance in multiple ac-tion recognition benchmarks.Moreover,we demonstrate the effectiveness of our model in real-world applications such as action recognition in large-scale untrimmed videos and action parsing.

1.Introduction

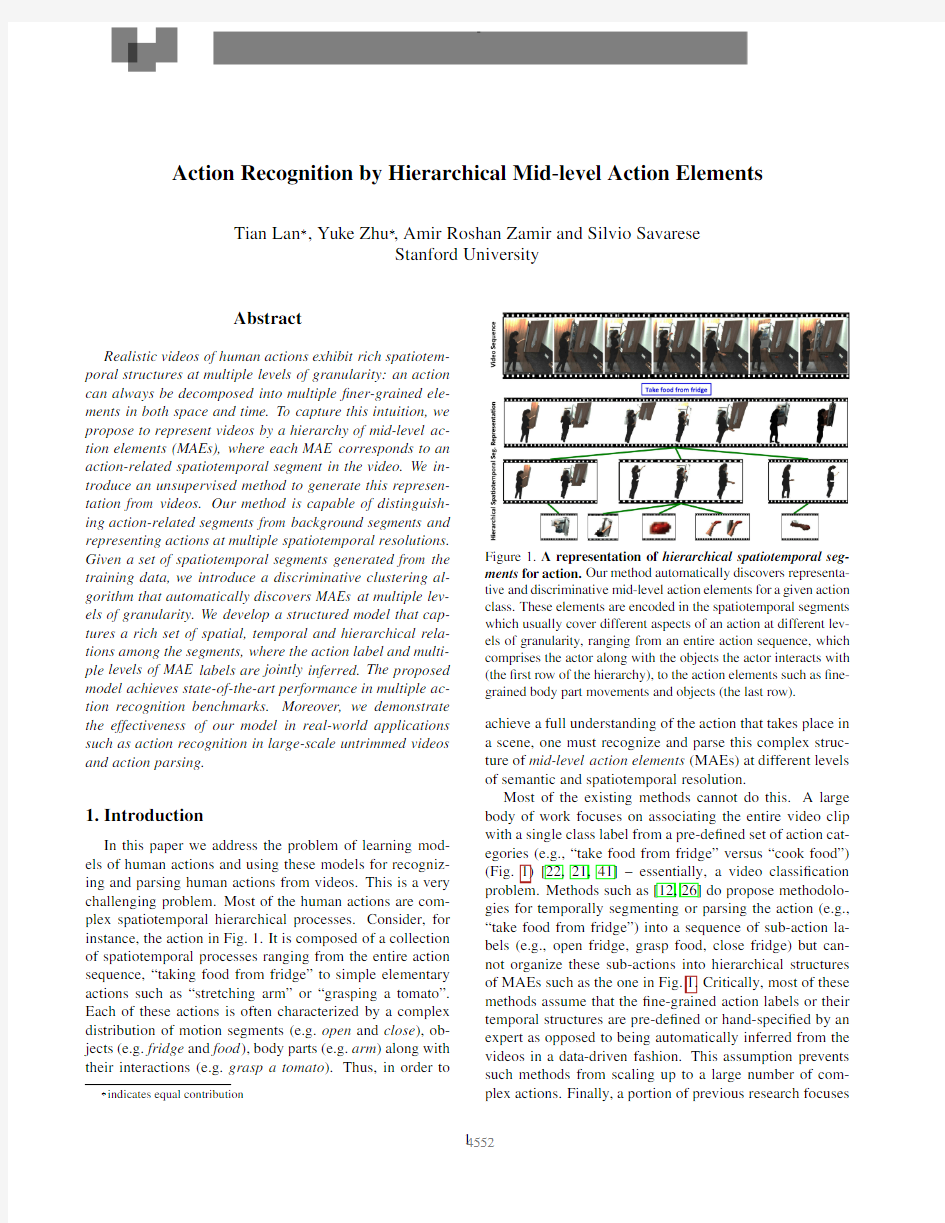

In this paper we address the problem of learning mod-els of human actions and using these models for recogniz-ing and parsing human actions from videos.This is a very challenging problem.Most of the human actions are com-plex spatiotemporal hierarchical processes.Consider,for instance,the action in Fig.1.It is composed of a collection of spatiotemporal processes ranging from the entire action sequence,“taking food from fridge”to simple elementary actions such as“stretching arm”or“grasping a tomato”. Each of these actions is often characterized by a complex distribution of motion segments(e.g.open and close),ob-jects(e.g.fridge and food),body parts(e.g.arm)along with their interactions(e.g.grasp a tomato).Thus,in order to ?indicates equal

contribution Figure1.A representation of hierarchical spatiotemporal seg-ments for action.Our method automatically discovers representa-tive and discriminative mid-level action elements for a given action class.These elements are encoded in the spatiotemporal segments which usually cover different aspects of an action at different lev-els of granularity,ranging from an entire action sequence,which comprises the actor along with the objects the actor interacts with (the?rst row of the hierarchy),to the action elements such as?ne-grained body part movements and objects(the last row). achieve a full understanding of the action that takes place in a scene,one must recognize and parse this complex struc-ture of mid-level action elements(MAEs)at different levels of semantic and spatiotemporal resolution.

Most of the existing methods cannot do this.A large body of work focuses on associating the entire video clip with a single class label from a pre-de?ned set of action cat-

egories(e.g.,“take food from fridge”versus“cook food”) (Fig.1)[22,21,41]–essentially,a video classi?cation problem.Methods such as[12,26]do propose methodolo-gies for temporally segmenting or parsing the action(e.g.,“take food from fridge”)into a sequence of sub-action la-bels(e.g.,open fridge,grasp food,close fridge)but can-not organize these sub-actions into hierarchical structures of MAEs such as the one in Fig.1.Critically,most of these methods assume that the?ne-grained action labels or their temporal structures are pre-de?ned or hand-speci?ed by an expert as opposed to being automatically inferred from the videos in a data-driven fashion.This assumption prevents such methods from scaling up to a large number of com-plex actions.Finally,a portion of previous research focuses

on modeling an action by just capturing the spatiotemporal characteristics of the actor[9,37,20,33]whereby neither the objects nor the background the actor interacts with are used to better contextualize the classi?cation process.Other methods[16,41,17,36]do propose a holistic representa-tion for activities which inherently captures some degree of background context in the video,but are unable to spatially localize or segment the actors or relevant objects.

In this work,we propose a model that is capable of modeling complex actions as a collection of mid-level ac-tion elements(MAEs)that are organized in a hierarchi-cal https://www.doczj.com/doc/eb8839708.html,pared to previous approaches,our frame-work enables:1)Multi-resolution reasoning–videos can be decomposed into a hierarchical structure of spatiotem-poral MAEs at multiple scales;2)Parsing capabilities–actions can be described(parsed)as a rich collection of spatiotemporal MAEs that capture different characteristics of the action ranging from small body motions,objects to large pieces of volumes containing person-object interac-tions.These MAEs can be spatially and temporally local-ized in the video sequence;3)Data-driven learning–the hierarchical structure of MAEs as well as the their labels do not have to be manually speci?ed,but are learnt and automatically discovered using a newly proposed weakly-supervised agglomerative clustering procedure.Note that some of the MAEs might have clear semantic meanings(see Fig.1),while others might correspond to arbitrary but dis-criminative spatiotemporal segments.In fact,these MAEs are learnt so as to establish correspondences between videos from the same action class while maximizing their discrim-inative power for different action classes.Our model has achieved state-of-the-art results on multiple action recogni-tion benchmarks and is capable of recognizing actions from large-scale untrimmed video sequences.

2.Related Work

The literature on human action recognition is immense. we refer the readers to a recent survey[2].In the following, we only review the closely related work to our work.

Space-time segment representation:Representing ac-tions as2D+t tubes is a common strategy for action recog-nition[3,5,24].Recently,there are works that use hier-archical spatiotemporal segments to capture the multi-scale characteristics of actions[5,24].Our representation differs in that we can discriminatively discover the mid-level action elements(MAEs)from a pool of region proposals.

Temporal action localization:While most action recognition approaches focus on classifying trimmed video clips[21,9,36],there are works that attempt to localize ac-tion instances from long video sequences[8,12,22,4,31]. In[31],a grammar model is developed for localizing action and(latent)sub-action instances in the video.Our work considers a more detailed parsing at both space and time,and at different semantic resolutions.

Hierarchical structure:Hierarchical structured mod-els are popular in action recognition due to its capabil-ity in capturing the multi-level granularity of human ac-tions[39,19,18,33].We follow a similar spirit by rep-resenting an action as a hierarchy of MAEs.However,most previous works focus on classifying single-action video clips where they treat these MAEs as latent variables.Our method localizes MAEs at both spatial and temporal extent.

Data-driven action primitives:Action primitives are discriminative parts that capture the appearance and motion variations of the action[26,42,13,18].Previous repre-sentations of action primitives such as interest points[42], spatiotemporal patches[13]and video snippets[26]typi-cally lack multiple levels of granularity and structures.In this work,we represent action primitives as MAEs,which are capable of capturing different aspects of actions rang-ing from the?ne-grained body part segments to the large chunks of human-object interactions.A rich set of spatial, temporal and hierarchical relations between the MAEs are also encoded.Both the MAE labels and the structures of MAEs are discovered in a data-driven manner.

Before diving into details,we?rst give an overview of our method.1)Hierarchical spatiotemporal segmentation. Given a video,we?rst develop an algorithm to automati-cally parse the video into a hierarchy of spatiotemporal seg-ments(see Fig.2).We run this algorithm for each video independently,and in this way,each video is represented as a spatiotemporal segmentation tree(Section3).2)Learn-ing.Given a set of spatiotemporal segmentation trees(one tree per video)in training,we propose a graphical model that captures the hierarchical dependencies of MAE labels at different levels of granularity.We consider a weakly su-pervised setting,where only the action label is provided for each training video,while the MAE labels are discrim-inatively discovered by clustering the spatiotemporal seg-ments.The structure of the model is de?ned by the spa-tiotemporal segmentation tree where inference can be car-ried out ef?ciently(Section4).3)Recognition and parsing.

A new video is represented by the spatiotemporal segmen-tation tree.We run our learned models on the tree for recog-nizing the actions and parsing the videos into MAE labels at different spatial,temporal and semantic resolutions. 3.Action Proposals:Hierarchical Spatiotem-

poral Segments

In this section,we describe our method for generating a hierarchy of action-related spatiotemporal segments from a video.Our method is unsupervised,i.e.during train-ing,the spatial locations of the persons and objects are not annotated.Thus,it is important that our method can automatically extract the action-related spatiotemporal seg-ments such as actors,body parts and objects from the video.

Figure2.Constructing the spatiotemporal segment hierarchy.

(a)Given a video,we?rst generate action-related region proposals for each frame.(b)Then,we cluster these proposals to produce a pool of spatiotemporal segments.(c)The last step is to agglomer-atively cluster the spatiotemporal segments into a hierarchy.

An overview of the method is shown in

Fig.2.

Our method for generating action proposals includes three major steps. A.Generating action-related spatial segments.We initially generate a diverse set of region pro-posals using the method of[10].This method works on a single frame of video,and returns a large number of seg-mentation masks that are likely to contain objects or ob-ject parts.We then score each region proposal using both appearance and motion cues,and we look for regions that have generic object-like appearance and distinct motion pat-terns relative to their surroundings.We further prune the background region proposals by training an SVM using the top scored region proposals as positive examples along with patches randomly sampled from the background as negative examples.The region proposals with scores above a thresh-old(?1)are considered as action-related spatial segments.

B.Obtaining the spatiotemporal segment pool.Given the action-related spatial segments for each frame,we seek to compute“tracklets”of these segments over time to con-struct the spatiotemporal segments.We perform spectral clustering based on the color,shape and space-time distance between pairs of spatial segments to produce a pool of spa-tiotemporal segments.In order to maintain the purity of each spatiotemporal segment,we set the number of clusters to a reasonably large number.The pool of spatiotemporal segments correspond to the action proposals at the?nest scale(bottom of Fig.1).

C.Constructing the hierarchy. Starting from the initial set of?ne-grained spatiotemporal segments,we agglomeratively cluster the most similar spa-tiotemporal segments into super-spatiotemporal segments until a single super-spatiotemporal segment is left.In this way,we produce a hierarchy of spatiotemporal segments for each video.The nodes in the hierarchy are action proposals at different levels of granularity.Due to space constraints, we refer the details of the method to Sec.S1in the supple-mentary material[1].Figure3.Graphical illustration of the model.In this example, we adopt the spatiotemporal hierarchy in Fig.2(c).The MAE labels are the red circles.The green circles are the features of each spatiotemporal segment,and the the blue circle is the action label.

4.Hierarchical Models for Action Recognition

and Parsing

So far we have explained how to parse a video into a tree of spatiotemporal segments.We run this algorithm for each video independently,and in this way,each video is repre-sented as a tree of spatiotemporal segments.Our goal is to assign each of these segments to a label so as to form a mid-level action element(MAE).We consider a weakly supervised setting.During training,only the action label is provided for each video.We discover the MAE labels in an unsupervised way by introducing a discriminative cluster-ing algorithm that assigns each spatiotemporal segment to an MAE label(Section4.1).In Section4.2,we introduce our models for action recognition and parsing,which are able to capture the hierarchical dependencies of the MAE labels at different levels of granularity.

We start by describing the notations.Given a video V n, we?rst parse it into a hierarchy of spatiotemporal seg-ments,denoted by V n={v i:i=1,...,M n}follow-ing the procedure introduced in Section3.We extract fea-tures X n from these spatiotemporal segments in the form of X n={x i:i=0,1,...,M n},where x0is the root feature vector,computed by aggregating the feature de-scriptors of all spatiotemporal segments in the video,and x i(i=1,...,M n)is the feature vector extracted from the spatiotemporal segment v i(see Fig.3).

During training,each video V n is annotated with an ac-tion label Y n∈Y and Y is the set of all possible ac-tion labels.We denote the MAE labels in the video as H n={h i:i=1,...,M n},where h i∈H is the MAE la-bel of the spatiotemporal segment v i and H is the set of all possible MAE labels(see Fig.3).For each training video,the MAE labels H n are automatically assigned to clusters of spatiotemporal segments by our discriminative clustering algorithm(Section4.1).The hierarchical struc-ture above can be compactly described using the notation

G n=(V n,E n),where a vertex v i∈V n denotes a spa-tiotemporal segment,and an edge(v i,v j)∈E n represents the interaction between a pair of spatiotemporal segments. In the next section,we describe how to automatically assign MAE labels to clusters of spatiotemporal segments.

4.1.Discovering Mid-level Action Elements(MAEs)

Given a set of training videos with action labels,our goal is to discover the MAE labels H by

assigning the clusters of spatiotemporal segments(Section3)to the correspond-ing cluster indices.Consider the example in Fig.1.The in-put video is annotated with an action label“take food from fridge”in training,and the MAEs should describe the ac-tion at different resolutions ranging from the?ne-grained action and object segments(e.g.fridge,tomato,grab)to the higher-level human-object interactions(e.g.open fridge, close fridge).These MAE labels are not provided in train-ing,but are automatically discovered by a discriminative clustering algorithm on a per-category basis.That means the MAEs are discovered by clustering the spatiotemporal segments from all the training videos within each action class.The MAEs should satisfy two key requirements:1) inclusivity-MAEs should cover all,or at least most,varia-tions in the appearance and motion of the spatiotemporal segments in an action class;2)discriminability-MAEs should be useful to distinguish an action class from others.

Inspired by the recent success of discriminative clus-tering in generating mid-level concepts[38],we develop a two-step discriminative clustering algorithm to discover the MAEs.1)Initialization:we perform an initial clus-tering to partition the spatiotemporal segments into a large number of homogeneous clusters,where each cluster con-tains segments that are highly similar in appearance and shape.2)Discriminative algorithm:a discriminative clas-si?er is trained for each cluster independently.Based on the discriminatively-learned similarity,the visually consistent clusters will then be merged into mid-level visual patterns (i.e.MAEs).The discriminative step will make sure that each MAE pattern is different enough from the rest.The two-step algorithm is explained in details below.

Initialization.We run standard spectral clustering on the feature space of the spatiotemporal segments to obtain the initial clusters.We de?ne a similarity between every pair of spatiotemporal segments v i and v j extracted from all of the training videos of the same class:K(v i,v j)= exp(?d bow(v i,v j)?d spatial(v i,v j)),where d bow is the his-togram intersection distance on the BoW representations of the dense trajectory features[41];and d spatial denotes the Euclidean distance between the averaged bounding boxes of spatiotemporal segments v i and v j in terms of four cues:x-y locations,height and width.In order to keep the purity of each cluster,we set the number of clusters quite high,pro-ducing around50clusters per action.We remove clusters M

A

E

1

M

A

E

2

Figure4.Visualization of Mid-level Action Elements(MAEs). The?gure shows two clusters(i.e.two MAEs)from the action cat-egory“take out from oven”.Each image shows the?rst frame of

a spatiotemporal segment and we only visualize?ve examples in each MAE.The two clusters capture two different temporal stages

of“take out from oven”.More visualizations are available in the supplementary video[1].

with less than5spatiotemporal segments.

Discriminative Algorithm.Given an initial set of clus-ters,we train a linear SVM classi?er for each cluster on the BoW feature space.We use all spatiotemporal segments in the cluster as positive examples.Negative examples are spa-tiotemporal segments from other action classes.For each cluster,we run the classi?er on all other clusters in the same action class.We consider the top K scoring detections of each classi?er.We de?ne the af?nity between the initial clusters c i and c j as the frequency that classi?er i and j?re on same cluster.

For each action class,we compute the pairwise af?ni-ties between all initial clusters,to obtain the af?nity matrix. Next we perform spectral clustering on the af?nity matrix

of each action independently to produce the MAE labels.In this way,the spatiotemporal segments in the training set are automatically grouped into clusters in a discriminative way, where the index of each cluster corresponds to an MAE la-bel h i∈H,where H denotes the set of all possible MAE labels.We visualize the example MAE clusters in Fig.4.

4.2.Model Formulation

For each video,we have a different tree structure

G n obtained from the spatiotemporal segmentation algo-rithm(Section3).Our goal is to jointly model the com-patibility between the input feature vectors X n,the action label Y n and MAE labels H n,as well as the dependencies between pairs of MAE labels.We achieve this by using the following potential function:

S V

n

(X n,Y n,H n)= i∈V nα?h i x i+ i∈V n b Y n,h i+ (i,j)∈E n b′h i,h j + (i,j)∈Eβ?h i,h j d ij+η?Y n x0(1)

MAE Modelα?

h i

x i:This potential captures the compat-ibility between the MAE h i and the feature vector x i of the

i-th spatiotemporal segment.In our implementation,rather

than using the raw feature[41],we use the output of the MAE classi?er on the feature vector of spatiotemporal seg-ment i.In order to learn biases between different MAEs, we append a constant1to make x i2-dimensional.

Co-occurrence Model b Y

n ,h i

,b′

h i,h j

:This potential

captures the co-occurrence constraints between pairs of MAE labels.Since the MAEs are discovered on a per-action basis,thus we restrict the co-occurrence model to allow for

only action-consistent types:b Y

n ,h i

=0if the MAE h j

is generated from the action class Y n,and?∞otherwise.

Similarly,b′

h i,h j =0if the pair of MAEs h i and h j are

generated from the same action class,and?∞otherwise.

Spatial-Temporal Modelβ?

h i,h j d ij:This potential cap-

tures the spatiotemporal relations between a pair of MAEs h i and h j.In our experiments,we explore a simpli?ed ver-sion of the spatiotemporal model with a reduced set of struc-

tures:β?

h i,h j d ij=β?h

i

bin s(i)+β?h

i

bin t(i,j).The simpli-

?cation states that the relative spatial and temporal relation of a spatiotemporal segment i with respect to its parent j is dependent on the segment type h i,but not its parent type h j. To compute the spatial feature bin s,we divide a video frame into5×5cells,and bin s(i)=1if the i-th spatiotemporal segment falls into the m-th cell,otherwise0.bin t(i,j)is a temporal feature that bins the relative temporal location of spatiotemporal segment i and j into one of three canon-ical relations including before,co-occur,and after.Hence bin t(i,j)is a sparse vector of all zeros with a single one for the bin occupied by the temporal relation between i and j.

Root Modelη?

Y n x0:This potential function captures the

compatibility between the global feature x0of the video V n and the action class Y n.In our experiment,the global fea-ture x0is computed as the aggregation of feature descriptors of all spatiotemporal segments in the video.

4.3.Inference

The goal of inference is to predict the hierarchical la-beling for a video,including the action label for the whole video as well as the MAE labels for spatiotemporal seg-ments at multiple scales.For a video V n,our inference corresponds to solving the following optimization prob-

lem:(Y?n,H?n)=arg max Y

n ,H n

S V

n

(X n,Y n,H n).For

the video V n,we jointly infer the action label Y n of the video and the MAE labels H n of the spatiotemporal seg-ments.The inference on the tree structure is exact;and we solve it using belief propagation.We emphasize that our inference returns a parsing of videos including the action label and the MAE labels at multiple levels of granularity.

4.4.Learning

Given a collection of training examples in the form of {X n,H n,Y n},we adopt a structured SVM formulation to learn the model parameters w.In the following,we develop two learning frameworks for action recognition and parsing respectively.

Action Recognition.We consider a weakly supervised setting.For a training video V n,only the action label Y n is provided.The MAE labels H n are automatically discovered using our discriminative clustering algorithm.We formulate it as follows:

min

w,ξ≥0

1

2

||w||2+C nξn

S V

n

(X n,H n,Y n)?S V

n

(X n,H?,Y?)

≥?0/1(Y n,Y?)?ξn,?n,(2) where the loss function?0/1(Y n,Y?)is a standard0-1 loss that measures the difference between the ground-truth action label Y n and the predicted action Y?for the n-th video.We use the bundle optimization solver in[7]to solve the learning problem.

Action Parsing.In the real world,a video sequence is usually not bounded for a single action,but may con-tain multiple actions at different levels of granularity:some actions occur in a sequential order;some actions could be composed of?ner-grained MAEs.See Fig.7for examples.

The proposed model can naturally be extended for ac-tion parsing.Similar to our action recognition framework, the?rst step of action parsing is to construct the spatiotem-poral segment hierarchy for an input video sequence V n, as shown in Fig.2.The only difference is that the input video is not a short video clip,but a long video sequence composed of multiple action and MAE instances.In train-ing,we?rst associate each automatically discovered spa-tiotemporal segment with a ground truth action(or MAE) label.If the spatiotemporal segment contains more than one ground truth label,we choose the label with the max-imum temporal overlap.We use Z n to denote the ground truth action and MAE labels associated with the video V n: Z n={z i:i=1,...,M n},where z i∈Z is the ground truth action(or MAE)label of the spatiotemporal segment v i,and M n is the total number of spatiotemporal segments discovered from the video.The goal of training is to learn a model that can parse the input video into a label hierarchy similar to the ground truth annotation Z n.We formulate it as follows:

min

w,ξ≥0

1

2

||w||2+C nξn

S V

n

(X n,Z n)?S V

n

(X n,Z?)≥?(Z n,Z?)?ξn,?n,

(3) where?(Z n,Z?)is a loss function for action parsing,which we de?ne as:?(Z n,Z?)= 1

M n i∈V n?0/1(z n i,z?i),where Z n is the ground truth label hierarchy,Z?is the predicted label hierarchy

and M n is the total number of spatiotemporal segments.

Note that the learning framework of action parsing is similar to Eq.(2),and the only difference lies in the loss function:we penalize incorrect predictions for every node of the spatiotemporal segments hierarchy.

5.Experiments

We conduct experiments on both action recognition and parsing.We?rst describe the datasets and experimental set-tings.We then present our results and compare with the state-of-the-art results on these datasets.

5.1.Experimental Settings and Baselines

We validate our methods on four challenging benchmark datasets,ranging from?ne-grained actions(MPI Cooking), realistic actions in sports(UCF Sports)and movies(Holly-wood2)to untrimmed action videos(THUMOS challenge). In the following,we brie?y describe the datasets,experi-mental settings and baselines.

MPI Cooking dataset[35]is a large-scale dataset of 65?ne-grained actions in cooking.It contains in total44 video sequences(or equally5609video clips,and881,755 frames),continuously recorded in kitchen.The dataset is very challenging in terms of distinguishing between actions of small inter-class variations,e.g.cut slices and cut dice. We split the dataset by taking one third of the videos to form the test set and the rest of the videos are used for training.

UCF-Sports dataset[34]consists of150video clips ex-tracted from sports https://www.doczj.com/doc/eb8839708.html,pared to MPI Cooking, the scale of UCF-Sports is small and the durations of the video clips it contains are short.However,the dataset poses many challenges due to large intra-class variations and cam-era motion.For evaluation,we apply the same train-test split as recommended by the authors of[20].

Hollywood2dataset[25]is composed of1,707video clips(823for training and884for testing)with12classes of human actions.These clips are collected from69Holly-wood movies,divided into33training movies and36test-ing movies.In these clips,actions are performed in realistic settings with camera motion and great variations.

THUMOS challenge2014[15]contains over254hours of temporally untrimmed videos and25million frames.We follow the settings of the action detection challenge.We use200untrimmed videos for training and211untrimmed videos for testing.These videos contain20action classes and are a subset of the entire THUMOS dataset.We consider a weakly supervised setting:in training,each untrimmed video is only labeled with the action class that the video contains,neither spatial nor temporal annotations are provided.Our goal is to evaluate the ability of our model in automatically extracting useful mid-level action elements (MAEs)and structures from large-scale untrimmed data.

Baselines.In order to comprehensively evaluate the per-formance of our method,we use the following baseline

MPI Cooking Per-Class

DTF[35,41]38.5

root model(ours)43.2

full model(ours)48.4

https://www.doczj.com/doc/eb8839708.html,parison of action recognition accuracies of different methods on the MPI Cooking dataset.

UCF-Sports Per-Class Hollywood2mAP

Lan et al.[20]73.1Gaidon et al.[11]54.4

Tian et al.[40]75.2Oneata et al.[28]62.4

Raptis et al.[32]79.4Jain et al.[13]62.5

Ma et al.[24]81.7Wang et al.[41]64.3

IDTF[41]79.2IDTF[41]63.0 root model(ours)80.8root model(ours)64.9

full model(ours)83.6full model(ours)66.3 https://www.doczj.com/doc/eb8839708.html,parison of our results to the state-of-the-art methods on UCF-Sports and Hollywood2datasets.Among all of the meth-ods,[32],[24],[11]and our full model use hierarchical structures.

THUMOS(untrimmed)mAP

IDTF[41]63.0

sliding window63.8

INRIA(temporally supervised)[29]66.3

full model(ours)65.4

https://www.doczj.com/doc/eb8839708.html,parison of action recognition accuracies of different methods on the THUMOS challenge(untrimmed videos). methods.1)DTF:the?rst baseline is the dense trajectory method[41],which has produced the state-of-the-art perfor-mance in multiple action recognition benchmarks.2)IDTF: the second baseline is the improved dense trajectory feature proposed in[41],which uses?sher vectors(FV)[30]to en-code the dense trajectory features.FV encoding[41,27] has been shown an improved performance over traditional Bag-of-Features encoding.3)root model:the third baseline is equivalent to our model without the hierarchical struc-ture,which only uses the IDTF features that fall into the spatiotemporal segments discovered by our method,while ignoring those in the background.4)sliding window:the fourth baseline runs sliding windows of different lengths and step sizes on an input video sequence,and performs non-maximum suppression to?nd the correct intervals of an action.This baseline is only applied to action recogni-tion of untrimmed videos and action parsing.

5.2.Experimental Results

We summarize the action recognition results on multiple benchmark datasets in Table1,2and3respectively.

Action recognition.Most existing action recognition benchmarks are composed of video clips that have been trimmed according to the action of interest.On all three benchmarks(i.e.UCF-Sports,Hollywood2and MPI),our full model with rich hierarchical structures signi?cantly

outperforms our own baseline root model (i.e.our model without hierarchical structures),which only considers the dense trajectories extracted from the spatiotemporal seg-ments discovered by our method.We can also observe

that the root model consistently improves dense trajectories [41]on all three datasets.This demonstrates that our automat-ically discovered MAEs ?re on the action-related regions and thus remove the irrelevant background trajectories.We also compare our method with the most recent results reported in the literature for UCF-Sports and Hollywood2.On UCF-Sports,all presented results follow the same train-test split [20].The baseline IDTF [24]is among the top performance.Ma et al.[24]reported 81.7%by using a bag of hierarchical space-time segments representation.We fur-ther improve their results by around 2%.On Hollywood2,our method also achieves state-of-the-art performance.The previous best result is from [41].We improve it further by 2%.Compared to the previous methods,our method is weakly supervised and does not require expensive bound-ing box annotations in training (e.g.[20,40,32])or human detection as input (e.g.[41]).

On THUMOS challenge that is composed of realistic untrimmed videos (Table 3),our method outperforms both IDTF and the sliding window baseline.Given the scale of the dataset,we skip the time-consuming spatial region pro-posals and represent action as a hierarchy of temporal seg-ments,i.e.each frame is regarded as a “spatial segment”.Our method automatically identi?es the temporal segments that are both representative and discriminative for each ac-tion class without any temporal annotation of actions in training.We also compare our methods with the best sub-mission (INRIA [29])of the temporal action localization challenge in THUMOS 2014.INRIA [29]uses a mixture of IDTF [41],SIFT [23],color features [6]and the CNN fea-tures [14].Also,their model [29]is temporally supervised ,which uses temporal annotations (the start and end frames of actions in untrimmed videos)and additional background videos in training.Our method achieves a competitive per-formance (within 1%)using only IDTF [41]and doesn’t require any temporal supervision.We provide the average precisions (AP)of all the 20action classes in Fig.5.Our method outperforms [29]in 10out of the 20classes,espe-cially Diving and CleanAndJerk ,which contain rich struc-tures and signi?cant intra-class variations.

Action parsing.Given a video sequence that contains multiple action and MAE instances,our goal is to localize each one of them.Thus during training,we assume that all of the action and MAE labels as well as their temporal ex-tent are provided.This is different from action recognition where all of the MAEs are unsupervised.We evaluate the ability of our method to perform action parsing by measur-ing the accuracy in temporally localizing all of the action and MAE instances.An action (or MAE)segment is con-

a v e r a g e p r e c i s i o n

B a

s e

b a l l P i t

c h B a s k e t b a l l D u n k B i l l i a r

d s C l

e a n A n d J e r k C l i

f f D i v i n

g C r i c k e t B o w l i n g C r i c k e t S

h o t D

i v i n g F r i s b e e C a t c h G o l f S w i n g H a m m e r T h r o w H i g h J u m p J a v e l i n T h r o w L o n g J u m p P o l e V a u l t S h o t p u t S o c c e r P e n a l t y T e n n i s S w i n g T h r o w D i s c u s V o l l e y b a l l S p i k i n g

Figure 5.Average precisions of the 20action classes of untrimmed videos from the temporal localization challenge in THUMOS.

Figure 6.Action parsing performance.We report mean Average precision (mAP)of our method and the sliding window baseline on MPI Cooking with respect to different overlapping thresholds that determine whether an action (or MAE)segment is correctly localized.

sidered as true positive if it overlaps with the ground truth segment beyond a pre-de?ned threshold.We evaluate the mean Average Precision (mAP)with the overlap threshold varying from 0.1to 0.5.

We use the original ?ne-grained action labels provided in the MPI Cooking dataset as the MAEs at the bottom level of the hierarchy,and automatically generate a set of higher-level labels by composing the ?ne-grained action la-bels.The detailed setups are explained in Sec.S2of the supplementary material [1].Examples of higher-level ac-tion labels are:“cut apart -put in bowl”,“screw open -spice -screw close”.We only consider labels with length ranging from 1to 4,and occur in the training set for more than 10times.In this way,we have in total 120action and MAE labels for parsing evaluation.

We compare our result with the sliding window baseline.The curves are shown in Fig.6.Our method shows consis-

(a)A test video containing “take out from fridge”and “take out from drawer”

actions.

(b)A test video containing “spice”,“screw close”and “put in spice holder”actions.

Figure 7.Action parsing.This ?gure shows the output of our action parser for two test videos.For each video,we visualize the inferred ?ne-grained action labels (shown on top of each image),the MAE segments (the red masks in each image)and the parent-child relations (the orange line).As we can see,our action parser is able to parse long video sequences into representative action patterns (i.e.MAEs)at multiple scales.Note that the ?gure only includes a few representative nodes of the entire tree obtained by our parser,we provide more visualizations in the supplementary video [1].

tent improvement over the baseline using different overlap threshold.If we consider an action segment is correctly lo-calized based on “intersection-over-union”score larger than 0.5(the PASCAL VOC criterion),our method outperforms the baseline by 8.5%.The mean performance gap (averaged over all different overlap threshold)between our method and the baseline is 8.6%.Some visualizations of action parsing results are shown in Fig.7.As we can see,the story of human actions is more than just the actor:as shown in the ?gure,the automatically discovered MAEs cover dif-ferent aspects of an action,ranging from human body and parts to spatiotemporal segments that are not directly re-lated to humans but carry signi?cant discriminative power (e.g.a piece of fridge segment for the action “take out from fridge”).This diverse set of mid-level visual patterns are then organized in a hierarchical way to explain the complex store of the video at different levels of granularity.

6.Conclusion

We have presented a hierarchical mid-level action ele-ment (MAE)representation for action recognition and pars-ing in videos.We consider a weakly supervised setting,where only the action labels are provided in training.Our method automatically parses an input video into a hierarchy of MAEs at multiple scales,where each MAE de?nes an action-related spatiotemporal segment in the video.We de-velop structured models to capture the rich semantic mean-ings carried by these MAEs,as well as the spatial,tem-poral and hierarchical relations among them.In this way,the action and MAE labels at different levels of granularity are jointly inferred.Our experimental results demonstrate encouraging performance over a number of standard base-line approaches as well as other reported results on several benchmark datasets.

Acknowledgments We acknowledge the support of ONR award N00014-13-1-0761.

References

[1]Supplementary material and visualization video.http://

https://www.doczj.com/doc/eb8839708.html,/?yukez/iccv2015.html. [2]J.K.Aggarwal and M.S.Ryoo.Human activity analysis:A

review.ACM Computing Survey,2011.

[3]M.Blank,L.Gorelick,E.Shechtman,M.Irani,and R.Basri.

Actions as space-time shapes.In ICCV,2005.

[4]P.Bojanowski,https://www.doczj.com/doc/eb8839708.html,jugie,F.Bach,https://www.doczj.com/doc/eb8839708.html,ptev,J.Ponce,

C.Schmid,and J.Sivic.Weakly supervised action labeling

in videos under ordering constraints.In ECCV,2014. [5]W.Brendel and S.Todorovic.Learning spatiotemporal

graphs of human activities.In ICCV,2011.

[6]S.Clinchant,J.-M.Renders,and G.Csurka.Trans-media

pseudo-relevance feedback methods in multimedia retrieval.

In Advances in Multilingual and Multimodal Information Re-trieval,pages569–576.Springer,2008.

[7]T.-M.-T.Do and https://www.doczj.com/doc/eb8839708.html,rge margin training for hid-

den markov models with partially observed states.In ICML, 2009.

[8]O.Duchenne,https://www.doczj.com/doc/eb8839708.html,ptev,J.Sivic,F.Bash,and J.Ponce.Auto-

matic annotation of human actions in video.In ICCV,2009.

[9] A.A.Efros,A.C.Berg,G.Mori,and J.Malik.Recognizing

action at a distance.In ICCV,pages726–733,2003. [10]I.Endres and D.Hoiem.Category independent object pro-

posals.In ECCV,2010.

[11] A.Gaidon,Z.Harchaoui,and C.Schmid.Activity represen-

tation with motion hierarchies.ICCV,2014.

[12]M.Hoai,https://www.doczj.com/doc/eb8839708.html,n,and F.De la Torre.Joint segmentation

and classi?cation of human actions in video.In CVPR,2011.

[13] A.Jain,A.Gupta,M.Rodriguez,and L.S.Davis.Represent-

ing videos using mid-level discriminative patches.In CVPR, 2013.

[14]Y.Jia,E.Shelhamer,J.Donahue,S.Karayev,J.Long,R.Gir-

shick,S.Guadarrama,and T.Darrell.Caffe:Convolu-tional architecture for fast feature embedding.arXiv preprint arXiv:1408.5093,2014.

[15]Y.-G.Jiang,J.Liu,A.Roshan Zamir,G.Toderici,https://www.doczj.com/doc/eb8839708.html,ptev,

M.Shah,and R.Sukthankar.THUMOS challenge:Ac-tion recognition with a large number of classes.http: //https://www.doczj.com/doc/eb8839708.html,/THUMOS14/,2014.

[16] A.Klaser,M.Marszalek,and C.Schmid.A spatio-temporal

descriptor based on3d-gradients.In British Machine Vision Conference,2008.

[17] A.Kovashka and K.Grauman.Learning a hierarchy of dis-

criminative space-time neighborhood features for human ac-tion recognition.In CVPR,2010.

[18]https://www.doczj.com/doc/eb8839708.html,n,L.Chen,Z.Deng,G.-T.Zhou,and G.Mori.Learning

action primitives for multi-level video event understanding.

In International Workshop on Visual Surveillance and Re-Identi?cation,2014.

[19]https://www.doczj.com/doc/eb8839708.html,n,T.-C.Chen,and S.Savarese.A hierarchical repre-

sentation for future action prediction.In ECCV,2014.[20]https://www.doczj.com/doc/eb8839708.html,n,Y.Wang,and G.Mori.Discriminative?gure-centric

models for joint action localization and recognition.In ICCV,2011.

[21]https://www.doczj.com/doc/eb8839708.html,ptev,M.Marszalek, C.Schmid,and B.Rozenfeld.

Learning realistic human actions from movies.In CVPR, 2008.

[22]https://www.doczj.com/doc/eb8839708.html,ptev and P.P′e rez.Retrieving actions in movies.In

ICCV,2007.

[23] D.G.Lowe.Object recognition from local scale-invariant

features.In ICCV,pages1150–1157,1999.

[24]S.Ma,J.Zhang,N.Ikizler-Cinbis,and S.Sclaroff.Action

recognition and localization by hierarchical space-time seg-ments.In ICCV,2013.

[25]M.Marszalek,https://www.doczj.com/doc/eb8839708.html,ptev,and C.Schmid.Actions in context.

In CVPR,2009.

[26]J.C.Niebles,C.-W.Chen,and L.Fei-Fei.Modeling tempo-

ral structure of decomposable motion segments for activity classi?cation.In ECCV,2010.

[27] D.Oneata,J.Verbeek,and C.Schmid.Action and event

recognition with?sher vectors on a compact feature set.In ICCV,2013.

[28] D.Oneata,J.Verbeek,and C.Schmid.Ef?cient Action Lo-

calization with Approximately Normalized Fisher Vectors.

In CVPR.IEEE,2014.

[29] D.Oneata,J.Verbeek,and C.Schmid.The lear submission

at thumos2014.In CVPR THUMOS challenge,2014. [30] F.Perronnin,J.Sanchez,and T.Mensink.Improving the

?sher kernel for large-scale image classi?cation.In ECCV, 2010.

[31]H.Pirsiavash and D.Ramanan.Parsing videos of actions

with segmental grammars.In CVPR,2014.

[32]M.Raptis,I.Kokkinos,and S.Soatto.Discovering discrim-

inative action parts from mid-level video representations.In CVPR,2012.

[33]M.Raptis and L.Sigal.Poselet key-framing:A model for

human activity recognition.In CVPR,2013.

[34]M.D.Rodriguez,J.Ahmed,and M.Shah.Action mach:A

spatial-temporal maximum average correlation height?lter for action recognition.In CVPR,2008.

[35]M.Rohrbach,S.Amin,M.Andriluka,and B.Schiele.A

database for?ne grained activity detection of cooking activ-ities.In CVPR,2012.

[36]M.Ryoo and J.Aggarwal.Spatio-temporal relationship

match:Video structure comparison for recognition of com-plex human activities.In ICCV,2009.

[37] E.Shechtman and M.Irani.Space-time behavior based cor-

relation.In CVPR,2005.

[38]S.Singh,A.Gupta,and A.A.Efros.Unsupervised discovery

of mid-level discriminative patches.In ECCV,2012. [39]K.Tang,L.Fei-Fei,and D.Koller.Learning latent temporal

structure for complex event detection.In CVPR,2012. [40]Y.Tian,R.Sukthankar,and M.Shah.Spatiotemporal de-

formable part models for action detection.In CVPR,2013.

[41]H.Wang and C.Schmid.Action recognition with improved

trajectories.In ICCV,2013.

[42] B.Yao and L.Fei-Fei.Modeling mutual context of object

and human pose in human-object interaction activities.In CVPR,2010.

《初中英语分层教学行动研究》课题研究方案 王宗平(执笔) 一、研究的背景和意义: 我校是一所城区中学,有一大部分品学兼优的学生,也有相当部分的中等生和后进生。而义务教育阶段的英语课程是为每一个学生设计的,学生两极分化现象给英语教学带来很大困难。英语分层教学是以我校教学现状为背景的教学探索,是对传统课堂教学模式的一种改革尝试,它的有效实施能为我校英语组教师在教学实践上形成更完整的理论体系。 我校实验班的老师爱岗敬业,教学基本功扎实,并深入学习新的教育教学理念,正在由“经验型”向“科研型”教师转变。 分层教学是把“因材施教”作为基本的教学原则,对传统教学的弊端进行改革,根据学生的不同学习水平和能力,依靠现有的条件和已有经验,制定不同的教学计划和教学目标,以便充分调动学生的学习积极性和主动性,从而使全体学生都能在原有基础上得到提高和发展,充分体现了素质教育的要求。 二、研究的理论依据: 分层教学的必要性。《新课程标准》指出,英语课程要面向全体学生,注重素质教育。课程特别强调要关注每个学生的情感,激发他们学习英语的兴趣,帮助他们建立学习的成就感和自信心,使他们在学习过程中发展综合语言运用能力,提高人文素养,增强实践能力,培养创新精神。它的核心思想是使每一个学生都得到不同程度的发展,强调的是“大众化”教育。 分层教学的有效性。教育心理学研究表明:人与人之间是有差异的,这种差异不仅表现在人的先天遗传因素不同,而且表现在人的后天发展的环境和条件也有区别,因而决定每一个人的发展方向、发展速度乃至最终能达到的发展水平都是不同的。因此学生学习的差异是客观存在的。美国心理学家布卢姆提出了“掌握学习的策略”的理论,他认为,“世上任何一个能够学会的东西,几乎所有的人也能学会。只要为他们提供了适当的前期和当时的学习条件。” 分层教学的人文性。“当教师把每一个学生都理解为他是一个具有个人特点

英语作业设计策略摘要:尽管现在英语作业的设计存在着严重问题,但只要我们能从学 生的实际出发进行有效的作业设计:立足兴趣,追求内容的生活化、形 式的多样化并能体现层次性,从教学的高效性出发,进行多渠道的作业 布置,就能很好地解决这些问题。 关健词:作业的设计兴趣生活化多样化层次性 虽然小学英语新课程改革已实施了好几年,但我们不少的英语教师设 计作业时仍存在着一些严重的问题,要么以读、背、抄、写等机械性题 型为主,形式单调枯燥,内容重复;要么缺乏目标意识,随意布置作业,量多质滥;或者作业形式单一,缺乏层次性,不利于学生的英语在听、说、读、写上的综合发展。要想很好地解决这些问题,我们英语老师一 定要在作业的设计上多下功夫,从学生的实际出发,进行有效的作业设计。 一、作业的设计应以“兴趣”为核心。 教师的作业设计要根据学生年龄特点,打破传统重复、枯燥的作业, 从兴趣入手,结合学生特点设计自主性、开放性、多元性作业。寓教于乐,形式新颖,玩练结合,形式多样化,让学生从游戏中找到作业的乐趣。 比如学完3a第一单元动物类单词,在户外找一面阳光能照射到的白墙,学生们可以自由发挥,用两只手做出不同的动物影子,要包括tiger, zebra, snake, 也可有学过的动物或其他东西的影子。做的好学生可以

得到一张贴纸。如果没条件去户外,在室内用投影照射在一面白墙上和学生一起活动学生可以用i like …的句子来表达,也可以用其他任何符合情景的句子表达自己的意思。这些寓教于乐的作业形式真正使作业不再成为学生的负担。另外,还可以多设计欣赏英语节目类作业。孩子都喜欢看电视,如今好多电视台都播放英语类节目,如李阳疯狂英语,中央台的洋话连篇,cctv—10套的希望英语,这些节目都配有纯正的英语,指导学生在收看的过程中,试着进行交流,让学生在欣赏生动的画面的同时,感受地道流利的英语,不知不觉中他们自己也就能够跟着说起来,学习英语的兴趣也会因此被大大地激发,这样学生自然会将学习英语当作乐趣和自我充实的手段。 二、作业设计的内容应力求生活化。 陶行知先生认为:生活即教育。的确,生活中处处有英语,生活中有取之不竭的英语教育资源。随着课堂教学的生活化,布置的作业形式也应逐步走向生活化。生活化的作业,不仅可以激发学生的好奇心,提高学生学习英语的积极性,而且还可以培养学生思维的灵活性和敏捷度。 学了水果等单词后,可布置学生回家到超市或水果店去认我们已经学过的水果类单词,把我们没学过的水果类单词纪录下来。通过英语字典或网络资源将这些单词列出来,大家一起交流学习,比一比看谁最棒。课堂上,学生都能积极踊跃展示自己的学习成果,既激发了他们学习英语的兴趣,又使得他们在动眼、动耳、动口、动手、动脑、动心、动情中主动地发展。这种走进学生生活的作业既有启发性,又有趣味性,不仅培养了学生归纳整理知识的能力,而且增强了他们学习英语、使用英

浅议分层法在QC小组活动现状调查阶段的应用 摘要:阐述了分层法在QC小组活动现状调查阶段的重要性,通过成果案例,剖析了分层法在应用过程中存在的主要问题,并对分层法在QC小组活动现状调查阶段的应用进行了深入探讨和完善提高,为QC小组活动提供了新的思路和方法。 关键词:分层法QC小组活动现状调查 QC小组活动作为质量改进和质量创新最有效的工具,已经开展了三十多年,截止到2014年,我公司已经成功举办了30届优秀QC小组成果发布会,通过不断地总结和提升,QC小组活动取得了长足进步和发展,立足解决现场实际问题,取得了显著的经济效益和社会效益。同时,通过活动的开展,也培养了一大批优秀的技术和管理人才。 一、分层法概述 所谓分层法,就是按照一定的标志,把搜集到的大量有关某一特定主题的统计数据按照不同的目的、特征加以归类、整理和汇总的一种方法。分层的目的在于把杂乱无章和错综复杂的数据加以归类汇总,使之能准确地反映客观事实。 二、分层法在现状调查阶段的重要性 《质量管理小组理论与方法》(中国质量协会编著,2013版)第四章成果评审技巧关于“现状调查”的基本任务描述为:“一是把握问题的现状,二是要找出问题的症结(关键点)所在,在确定

小组从何处改进及能够改进的程度,从而为目标值的设定提供依据”。表明了现状调查的目的就是要掌握问题的严重程度,找出关键影响因素,为目标值的设定提供依据,现状调查在整个QC小组活动中是很重要的一个环节,它起着承上启下的作用。 目前,QC小组活动现状调查常用的方法有调查表、分层法、简易图表、排列图、直方图、控制图、散布图等,而分层法是绘制调查表和排列图等其他工具的基础。从近几年的成果案例来看,分层法使用不当已成为了现状调查阶段最大的短板,直接影响到现状调查能否找准关键症结。鉴于目前小组对分层法没有引起足够的重视,在认识和使用上还存在不少误区,值得我们思考和探讨。 三、分层法在现状调查阶段应用存在的主要问题 从近几年的成果案例来看,分层法应用在现状调查阶段的主要问题表现在以下几个方面:一是,部分小组是在生产和管理的某一方面取得一定成效后,套用成果报告书程序编写成果,即所谓的倒装成果,缺乏对原始数据的收集;二是,在收集数据前没能充分考虑如何分层,导致数据收集的遗漏或缺失;三是,收集数据时进行了筛选,只收集对自己有利的数据,导致收集的数据不客观;四是,未能正确地分层或分层项目的概念混淆,导致分类数据混杂,从而无法进行归纳分析。五是,使用分层法只分析了一层,分层后发现均匀,却没有再进一步分层分析,人为地往一起合并数据。

八年级英语作业分层布置中案例分析八年级学生在英语学习中,容易产生厌学情绪。因此,分层次布置作业是非常必要的。 语法教学对我来说是个难点。一直以来都没有找到好的教学方法。这学期,我一直在研究英语分层作业的布置,因此我认为在语法教学上分层次的布置作业对语法的教学也是有好处的。我们英语教师在语法教学的过程中,充分利用现代教育资源,在语法设计上多下功夫,在设计活动时要面向全体学生,充分考虑学生的个体差异,充分体现以学生为主体的理念。作业的布置上注意激发学生的学习兴趣,帮助学生形成学习动机,使语法教学质量得到提高。 例如:教学现在完成时。教学目标中提出的几点不是所有学生都能掌握的,因此在学完语法课以后,作业的布置相对就比较重要了。 分层作业中的A类作业重点针对成绩优异的学生。 单项选择 1.He asked me _____ during the summer holidays. A. where I had been B. where I had gone C. where had I been D. where had I gone 2. What ____ Jane ____ by the time he was seven ? A. did do B. has done C did did D. had done 3. I ______ 900 English words by the time I was ten。 A. learned B. was learning C. had learned D. learnt 4. She ______lived here for ______ years. A. had a few B. has several C. had a lot of D. has a great deal of 5. By the time my parents reached home yesterday, I _____ the dinner already. A had cooked B. cooked C. have cooked D. was cooked 6. She said she __________ the principle already A .has seen B. saw C. will see D. had seen 7. She said her family _______ themselves ______ the army during the war. A. has hidden, from B. had hidden, from C. has hidden, with D. had hidden, with 8. By the time he was ten years old, he _________. A. has completed university B. has completed the university B. had completed an university D. had completed university 9. She had written a number of books ______ the end of last year. A. for B. in C. by D. at 10. He _____ to play ____ before he was 11 years old. A had learned, piano B. had learned, the piano C. has learned, the piano D. learns piano. 用动词的适当形式填空 1. We _____ (paint) the house before we _____ (move) in. 2. That rich old man ___ (make) a will before he ___ (die). 3. They ____ (study) the map of the country before they ________ (leave). 4. The robbers___(run away)before the policemen_____ (arrive).

分层教学是实施因材施教的有效方法在实际教学中,对每个学生因材施教是很难做到的,而分层教学则是解决学生之间的差距、因材施教的有效方法。教师根据学习目标,对不同层次的学生采取不同的指导方法,才能更好地满足各层次学生的需要,使他们都能得到发展。特别是对学困生,教师更要多方给他们提供学习成功的机会,使其有限的智能获得充分的发展。教师在课堂上要为学生创造良好的学习环境,要在教学活动中通过设计恰当的问题,创设问题的环境。给各层学生发言的权力,激发学生动脑思考问题,在学习实践中培养学习兴趣,激发学习动机,促进思维的发展。 我们的思考和策略: 1、个别辅导:一般学生的许多知识和技能不需要老师的特别辅导就能学会,学困生缺乏掌握抽象概念的能力,没有指导,他们往往不能自然地学会知识,需要提供具体的训练,并且要及时给予表扬和鼓励。教师在课上应该安排一定的时间让学生自己研究和探讨,让每一层的学生都能带着各自的目标有意识地进行学习,同时老师也要挤出时间给学困生以具体的辅导。 2、伙伴助学:为学困生搭配学习伙伴,既有助于学困生成绩的提高,又有利于优等生对知识的复习与巩固,使自己的知识得到升华。 3、小组讨论:小组讨论,让学生自行探讨和研究,有利于培养中等生和优等生的思维能力,同时给学困生提供了充分的学习时间。但是组内的人员来自不同的层次,因而处于不同的角色地位。一般的情况是高层次学生唱“主角”,中层次学生是“配角”,低层次学生是“观众”。长此以往,不利于中低层次学生积极的发挥,小组讨论也会变成一种纯粹的形式。鉴于这种情况,我采用“组长轮值制”来加强小组间的互动,即由高中低层次学生轮流担任组长。每个学生在小组内的

基于Matlab的层次分析法与运用 摘要:本文通过使用Matlab软件进行编程,在满足同一层次中各指标对所有的下级指标均产生影响的假定条件下,实现了层次分析法的分析运算。本程序允许用户自由设定指标层次结构内的层次数以及各层次内的指标数,通过程序的循环,用户只需输入判断矩阵的部分数据,程序可依据层次分析法的计算流程进行计算并作出判断。本程序可以方便地处理层次分析法下较大的运算量,解决层次分析法的效率问题,提高计算机辅助决策的时效性。 关键词:Matlab层次分析法判断矩阵决策 在当前信息化、全球化的大背景下,传统的手工计算已不能满足人们高效率、高准确度的决策需求。因此计算机辅助决策当仁不让地成为了管理决策的新工具、新方法。基于此,本文在充分发挥计算机强大运算功能的基础上,选用美国MathWorks公司的集成数学建模环境Matlab R2009a作为开发平台,使用M语言进行编程,对计算机辅助决策在层次分析法中的运用进行讨论。试图通过程序实现层次分析法在计算机系统上的运用,为管理决策探索出新的道路职称论文。 1 层次分析法的计算流程 根据层次分析法的相关理论,层次分析法的基本思想是将复杂的决策问题进行分解,得到若干个下层指标,再对下层指标进行分解,得到若干个再下层指标,如此建立层次结构模型,然后根据结构模型构造判断矩阵,进行单排序,最后,求出各指标对应的权重系数,进行层次总

排序。 1.1 构造层次结构模型在进行层次分析法的分析时,最主要的步骤是建立指标的层次结构模型,根据结构模型构造判断矩阵,只有判断矩阵通过了一致性检验后,方可进行分析和计算。其中,结构模型可以设计成三个层次,最高层为目标层,是决策的目的和要解决的问题,中间层为决策需考虑的因素,是决策的准则,最低层则是决策时的备选方案。一般来讲,准则层中各个指标的下级指标数没有限制,但在本文中设计的程序尚且只能在各指标具有相同数量的下级指标的假定下,完成层次分析法的分析,故本文后文选取的案例也满足这一假定。 1.2 建立判断矩阵判断矩阵是表示本层所有因素针对上一层某一个因素的相对重要性的比较给判断矩阵的要素赋值时,常采用九级标度法(即用数字1到9及其倒数表示指标间的相对重要程度),具体标度方法如表1所示。 1.3 检验判断矩阵的一致性由于多阶判断的复杂性,往往使得判断矩阵中某些数值具有前后矛盾的可能性,即各判断矩阵并不能保证完全协调一致。当判断矩阵不能保证具有完全一致性时,相应判断矩阵的特征根也将发生变化,于是就可以用判断矩阵特征根的变化来检验判断的一致性程度。在层次分析法中,令判断矩阵最大的特征值为λmax,阶数为n,则判断矩阵的一致性检验的指标记为:⑴ CI的值越大,判断矩阵的一致性越差。当阶数大于2时,判断矩阵的一致性指标CI与同阶平均随机一致性指标RI之比称为随机一致性

《在普通课堂教出尖子生的20个方法:分层教学》读后感 徐田华 我校正在进行“尊重学生差异,构建和谐课堂”的课题研究的背景下,读《在普通课堂教出尖子生的20个方法:分层教学》这本书对我而言既是一种教学理论、教学实践的学习,也的确有着比较现实指导意义。 早在两千多年前,我们的至圣先师孔子就提出了因材施教的教学原则,他承认学生在个性与才能上的差异,主张根据学生的个性与特长有针对性的进行教育,注重补偏救弊,促进学生正常发展,培养出了各具千秋的七十二贤人。而在此书中,作者琼苏穆特尼,范·弗拉门德在这本书中也提出了20个高效实用的分层教学策略,帮忙老师们因材施教充分发掘学生的天赋和才能,教出更优秀的学生。 分层教学,是一种分层的教学策略,也是一种互补合作的教学模式,更是一种先进的教学思想,强调学生个体差异是一份宝贵的可供开发的教育资源。本书主要讲在现有的课堂上,进行分层教学的20个方法。大量实用方法帮助老师给不同梯度的学生切实且急需的指导,让有天赋的孩子真正成为拔尖的学生。包括:识别课堂中尖子生的方法;对现有教学资源合理运用的方法;分层课堂由简到繁的调整方案;具体到每一个科目类别的课程分层策略。具体的分层方式有学生分层、目标分层、备课分层、评价分层。 当然了,分层的教学方式知识形式、是手段,而剥开分层教学形式的外衣,我看到的是它敢于承认学生思维方式上、发展过程中的差异,立足推动学生的发展,目的在于促进学生发展的多样性和多数学生发展的可能性。看到的是作者对学校教育中每个学生个体的尊重,是对每个学生在学校有所发展、有所提升的期望。在这个层面上,我想,这和我们学校正在进行的“尊重学生差异构建和谐

中考推断题专题复习学案 宋文 一、推断题的解题基本思路 解推断题就好比是公安人员侦破案情,要紧抓蛛丝马迹,并以此为突破口,顺藤摸瓜,最终推出答案。解题时往往需要从题目中挖出一些明显或隐含的条件,抓住突破口(突破口往往是现象特征、反应特征及结构特征),导出结论,最后别忘了把结论代入原题中验证,若“路”走得通则已经成功。 二、课前准备 1、物质的典型性质: ①最简单、相对分子质量最小的有机物是;相对分子质量最小的氧化物是。

②组成化合物种类最多的元素是元素; ③人体内含最多的金属元素是元素; ④在空气中能自燃的固体物质与最易着火的非金属单质均是指; ⑤溶于水呈碱性的气体是;反应的物质应该是铵盐与碱。 ⑥溶解时放出大量热的固体是、液体是、溶解时吸热的固体是。 ⑦一种调味品的主要成分,用该调味品可除去水壶中的水垢的是,通常用于发面食品中,使蒸出的馒头松软可口同时可除去发酵时产生的酸的是、; ⑧ CuSO4色; CuSO4溶液色;Fe2+ (亚铁离子)溶液色;Fe3+ (铁离子)溶液色; ⑨S在O2中燃烧色;S、H2在空气中燃烧色;CO、CH4在空气中燃烧色。 2、常见物质的相互转化关系 ①写出能实现下图三种物质之间转化的化学方程式: ②填下图空白并完成化学方程式: ③写出下列转换的方程式 ④箭头表示如图三种物质之间能实现的转化并写出方程式 三、知识储备【推断题常用的突破口】 1. 以特征颜色为突破口 C CO CO2 CaO CuO Cu CuSO4

(1)固体颜色 黑色Fe、C、CuO、MnO2、Fe3O4;红色Cu、Fe2O3、HgO;淡黄色S粉;绿色Cu2(OH)2CO3;蓝色CuSO4·5H2O;暗紫色KMnO4 (2)溶液颜色 蓝色溶液:含Cu2+的溶液;浅绿色溶液:含Fe2+的溶液;黄色溶液:含Fe3+的溶液 紫色溶液:含MnO4-;I2遇淀粉溶液(蓝色) (3)沉淀颜色 蓝色Cu(OH)2;红褐色Fe(OH)3;白色BaSO4、AgCl、CaCO3、Mg(OH)2、MgCO3、BaCO3 2. 以反应条件为突破口 点燃:有O2参加的反应 通电:电解H2O 催化剂:KClO3、H2O2分解制O2 高温: CaCO3分解;C还原CuO; C、 CO 还原Fe2O3 3. 以特征反应现象为突破口 (1)能使澄清石灰水变浑浊的无色无味气体是。 (2)能使黑色CuO变红(或红色Fe2O3变黑)的气体是________,固体是_______。 (3)能使燃烧着的木条正常燃烧的气体是____,燃烧得更旺的气体是____,使火焰熄灭的气体是__ _或___ _;能使带火星的木条复燃的气体是_ 。 (4)能使白色无水CuSO4粉末变蓝的气体是______。 (5)在O2中燃烧火星四射的物质是_ 。在O2中燃烧,产生大量白烟的是___________。在O2中燃烧,产生刺激性气味气体,且有蓝紫色火焰的是_______。 (6)常温下是液态的物质。 (7) 在空气中燃烧,产生刺激性气味气体,且有淡蓝色火焰的是 _______。在空气中燃烧,产生耀眼白

第二节 层次分析法的应用实例 层次分析法在解决定量与定性复杂问题时,由于方法的简单性、直观性,同时在解决各种领域的实际问题时又显示其有效性和可行性,因而深受广大工程技术人员和应用数学工作者的欢迎而被广泛采用。下面我们举例说明它的实用性。 设某港务局要改善一条河道的过河运输条件,要确定是否建立桥梁或隧道以代替现在的轮渡。 此问题可得到两个层次结构:过河效益层次结构和过河代价层次结构;由图5-3(a)和(b)分别表示。 例 过河的代价与效益分析。 (a) 过河效益层次结构 (b) 过河代价层次结构 图5-3 过河的效益与代价层次结构图 过河的效益 A 过河的效益 2B 经济效益 1B 过河的效益 3B 隧 道 2D 桥 梁 1D 渡 船 3D 美化 11 C 进出方便 10 C 舒适 9 C 自豪感 8 C 交往沟通 7C 安全可靠 6 C 建筑就业 5 C 当地商业4C 岸间商业3C 收入2C 节省时间1 C 过河的代价 A 社会代价 2B 经济代价 1B 环境代价 3B 隧 道 2D 桥 梁 1D 渡 船 3D 对生态的污染 9 C 对水的污染 8 C 汽车的排放物 7 C 居民搬迁 6 C 交往拥挤 5C 安全可靠 4 C 冲击渡船业 3 C 操作维护 2 C 投入资金 1 C

在过河效益层次结构中,对影响渡河的经济因素来说桥梁或隧道具有明显的优越性。一种是节省时间带来的效益,另一种是由于交通量的增加,可使运货增加,这就增加了地方政府的财政收入。交通的发达又将引起岸间商业的繁荣,从而有助于本地商业的发展;同时建筑施工任务又创造了大量的就业机会。以上这些效益一般都可以进行数量计算,其判断矩阵可以由货币效益直接比较而得。但社会效益和环境效益则难以用货币表示,此时就用两两比较的方法进行。从整体看,桥梁和隧道比轮渡更安全,更有助于旅行和交往,也可增加市民的自豪感。从环境效益看,桥梁和隧道可以给人们更大的舒适性、方便性,但渡船更具有美感。由此得到关于效益的各个判断矩阵如表5-9—表5-23所示。 表5-9 表5-10 表5-11 表5-12 表5-13 表5-14

分层教学如何才能做到真正的分层 ——对于我校中学分层教学实验的指导意见 中学部教学处熊伯夷 教育专家加德纳认为:“人的智能是多方面的,在一个人身上的表现有着不均衡的现象。”既然人的智能发展存在个性差异现象,而我们的教育教学又必须面向全体学生,那么,我们如何在追求共性的同时做到尊重个性?如何在减轻负担的同时又能提高质量? 再者,我国的教育从孔夫子时代起,就一直倡导“因材施教”。这就要求我们一定要从学生的实际情况出发,尊重学生的个体差异,有的放矢地进行有差别的教学,以便使每个学生都能扬长避短,学有所进。 为此,我校中学部自上一学年起,就开始在中学数学和英语两个学科对学生进行分层教学。从理论上看,分层教学无疑是使全体学生共同进步的一个有效措施,也是使因材施教落到实处的一种有效的方式。但在实际操作中,目前的分层教学实验也出现了一些困惑和瓶颈现象。最突出的问题是学生按学科成绩分层上课后,并没有做到真正意义上的“分层教学”。 如何使分层教学实施真正意义上的“分层”?笔者认为,应该从如下几个方面着手: 一、学生分层,重在进步 要对学生进行分层教学,首先必须确定对学生分层的标准。由于我们的教育对象是人,而不是像工厂中的产品制造一样千篇一律,因此,学生分层施教不能简单地依据班级学生的成绩,而要综合考量学生的自主学习能力、智力情况等因素,再对学生进行分层:A班(跃进层):基础扎实,接受能力强,学习方法正确,成绩优秀; B班(发展层):基础一般,接受能力尚可,学习比较自觉,有一定的上进心,成绩中等左右; C班(提高层):基础薄弱,接受能力不强,学习积极性不高,学习态度和学习习惯存在问题,在学习上有困难。

设计内容:语法复习之形容词 设计思路:通过不同题型的练习,对相关形容词考点的理解运用 操作模式:课堂练习 预期目的:使不同层次的学生达到相应的要求(基础达标理解运用拓展延伸) 基础达标 一、写出下列形容词的比较级和最高级 1、big 2、heavy 3、nice 4、excited 5、tired 6、difficult 7、little 8、bad 9、hot 10、short 二、单项选择 ( )1、—I always listen to the teacher in class. —It’s very clever of you to do that. A、free B、freely C、careful D、carefully ( ) 2、—How are you getting along with your classmates? Very well .They are all me. A、afraid of B、anghy with C、tired of D、friendly to ( )3、—Why are you so ? —Because our ping-pong player Wang Liqin has won the world championship. A、excited B、exciting C、bored D boring ( )4、The world is becomimg smaller and smaller because the Internet brings us . A、near B、farther C、closer D、the closest ( )5、—Do you like the song Backlight(逆光)sung by singaporean pop star stephanie Sun? —Yes ,it sounds really . A、delicious B、beautiful C、beautifully D 、terrible ( )6、—Would you like to go and see a film? —Sure,the TV programmes are too . A、surprising B、interesting C、exciting D、boring ( )7、I don’t like eating chocolate .The taste is too . A、hot B、delicious C、nice D、sweet ( )8、Mary is and she often makes her classmates lanugh. A、shy B、pretty C、busy D、funny ( )9、They all looked at the teacher when he told them the good news. A、sadly B、happily C、carefully D、angrily ( )10、—I’m leaving home this afternoon. —

中考推断题专题复习学案 一、推断题的解题基本思路 解推断题就好比是公安人员侦破案情,要紧抓蛛丝马迹,并以此为突破口,顺藤摸瓜,最终推出答案。解题时往往需要从题目中挖出一些明显或隐含的条件,抓住突破口(突破口往往是现象特征、反应特征及结构特征),导出结论,最后别忘了把结论代入原题中验证,若“路”走得通则已经成功。 二、课前准备 1、物质的典型性质: ①最简单、相对分子质量最小的有机物是;相对分子质量最小的氧化物是。 ②组成化合物种类最多的元素是元素; ③人体含最多的金属元素是元素; ④在空气中能自燃的固体物质与最易着火的非金属单质均是指; ⑤溶于水呈碱性的气体是;反应的物质应该是铵盐与碱。 ⑥溶解时放出大量热的固体是、液体是、溶解时吸热的固体是。 ⑦一种调味品的主要成分,用该调味品可除去水壶中的水垢的是,通常用于发面食品中,使蒸出的馒头松

软可口同时可除去发酵时产生的酸的是、; ⑧ CuSO4色; CuSO4溶液色;Fe2+ (亚铁离子)溶液色;Fe3+ (铁离子)溶液色; ⑨S在O2中燃烧色;S、H2在空气中燃烧色;CO、CH4在空气中燃烧色。 2、常见物质的相互转化关系 ①写出能实现下图三种物质之间转化的化学方程式: ②填下图空白并完成化学方程式: ③写出下列转换的方程式 ④箭头表示如图三种物质之间能实现的转化并写出方程式 三、知识储备【推断题常用的突破口】 1. 以特征颜色为突破口 (1)固体颜色 黑色Fe、C、CuO、MnO2、Fe3O4;红色Cu、Fe2O3、HgO;淡黄色S粉;绿色Cu2(OH)2CO3;蓝色CuSO4·5H2O;暗紫色KMnO4 (2)溶液颜色 蓝色溶液:含Cu2+的溶液;浅绿色溶液:含Fe2+的溶液;黄色溶液:含Fe3+的溶液 紫色溶液:含MnO4-;I2遇淀粉溶液(蓝色) (3)沉淀颜色 蓝色Cu(OH)2;红褐色Fe(OH)3;白色BaSO4、AgCl、CaCO3、Mg(OH)2、MgCO3、BaCO3 2. 以反应条件为突破口 点燃:有O2参加的反应 C CO CO2 CaO CuO Cu CuSO4

承诺书 我们仔细阅读了第八届苏北数学建模联赛的竞赛规则。 我们完全明白,在竞赛开始后参赛队员不能以任何方式(包括电话、电子邮件、网上咨询等)与本队以外的任何人(包括指导教师)研究、讨论与赛题有关的问题。 我们知道,抄袭别人的成果是违反竞赛规则的, 如果引用别人的成果或其他公开的资料(包括网上查到的资料),必须按照规定的参考文献的表述方式在正文引用处和参考文献中明确列出。 我们郑重承诺,严格遵守竞赛规则,以保证竞赛的公正、公平性。如有违反竞赛规则的行为,我们愿意承担由此引起的一切后果。 我们的参赛报名号为:3742 参赛组别(研究生或本科或专科):本科 参赛队员(签名) : 队员1:柯先庆 队员2:鲁松 队员3:李国强 获奖证书邮寄地址:安徽凤阳安徽科技学院数学系233100

编号专用页 参赛队伍的参赛号码:(请各个参赛队提前填写好): 3742 竞赛统一编号(由竞赛组委会送至评委团前编号):竞赛评阅编号(由竞赛评委团评阅前进行编号):

题目幸福感的评价与量化模型 摘要 本文针对身心健康、物质保障、社会关系、家庭生活以及自我价值实现等因素对人们幸福感的影响,分别运用三种不同的模型建立衡量人们幸福感的量化模型。 模型一采用灰色关联分析方法,主要根据序列曲线几何形状的相似程度来判断其联系是否紧密。经过分析求解得到五个隐变量影响程度由强至弱依次是物质保障(0.446)、身心健康(0.232)、社会幸福感(0.17)、自我价值的实现(0.093)、家庭生活(0.059)。 模型二先是用贴近度对数据进行处理,再运用层次分析法对幸福指数各因素进行权重分析,得自我价值体现对民众幸福感的影响最大,其次按影响系数从大到小依次为身心健康、物质保障、社会关系、家庭生活。 模型三运用指数拟合方法对同一地区的教师和学生的幸福指数进行分析。得到社会地位、工资与福利待遇、自我价值实现、与学生的关系、工作集体关系、业余活动是影响教师的幸福的主要因素。而健康满意度,生活满意度,学习环境满意度,自我满意度,教师满意度师是影响学生幸福的主要因素。 最后对各个模型的优缺点和推广进行了讨论分析。 关键字:幸福感灰色关联分析贴近度层次分析拟合

初中化学推断题解题技巧1、以物质的颜色为突破口 2、以物质的用途为突破口

3、以组成元素相同的物质为突破口 (1)气体氧化物:二氧化碳和一氧化碳 (2)液体氧化物:过氧化氢和氧化氢 (3)固体氧化物:氧化铁和四氧化三铁 (4)盐:氯化亚铁和氯化铁、硫酸亚铁和硫酸铁 (5)碱:氢氧化亚铁和氢氧化铁 4、以常见物质类别为突破口 (1)常见无色无味气体:氢气、氧气、一氧化碳、二氧化碳 (2)常见气体单质:氢气、氧气 (3)常见固态非金属单质:碳 (4)常见固态金属单质:铁、镁、铜、锌 (5)常见氧化物:过氧化氢、氧化氢、一氧化碳、二氧化碳、氧化钙、氧化铁、氧化铜

(6)常见的酸:稀盐酸和稀硫酸 (7)常见的碱:氢氧化钠和氢氧化钙 (8)常见的盐:氯化钠、硫酸钠、碳酸钠、硝酸银 5、以元素或物质之最为突破口 (1)地壳中的元素居前四位的是:O、Si、Al、Fe。地壳中含量最多的元素是O ;最多的非金属元素是O;最多的固态非金属元素Si;最多的金属元素是Al。 (2)空气中含量最多的是N 2 (3)相同条件下密度最小的气体是H 2 (4)相对分子质量最小的单质是H 2、氧化物是H 2 O (5)日常生活中应用最广泛的金属是Fe 6、以化学反应的特殊现象为突破口 7、以反应特点为突破口 8、以反应类型为突破口 a、化合反应 (1)燃烧红磷、铁、硫、碳(充分、不充分)、一氧化碳、氢气

木炭在氧气中充分燃烧、木炭在氧气中不充分燃烧、硫粉在氧气中燃烧、红磷在氧气中燃烧、氢气燃烧、铁丝在氧气中燃烧、镁条燃烧、铝在空气中形成保护膜、一氧化碳燃烧、二氧化碳通过炽热的碳层 (2)有水参加二氧化碳与水反应、生石灰与水反应 b、分解反应 (1)实验室制取氧气:过氧化氢和二氧化锰制氧气、高锰酸钾制取氧气、氯酸钾和二氧化锰制取氧气 (2)电解水 (3)高温煅烧石灰石、氧化汞加热分解、碳酸分解 c、置换反应 (1)氢气或碳还原金属氧化物:木炭还原氧化铜、木炭还原氧化铁、氢气还原氧化铜 (2)金属+酸(铁、镁、锌、铝):锌与稀硫酸反应、锌与稀盐酸反应、铁与稀硫酸反应、铁与稀盐酸反应、铝与稀硫酸反应、镁与稀硫酸反应 (3)金属+盐溶液(铁、铜、铝):铁与硫酸铜溶液反应、铁与硫酸铜溶液反应、铜与硝酸银反应 d、复分解反应 (1)金属氧化物+ 酸→盐+ 水 氧化铁与稀盐酸反应: 氧化铁与稀硫酸反应: 氧化铜与稀盐酸反应: 氧化铜与稀硫酸反应: 氧化锌与稀硝酸反应: (2)碱+ 酸→盐+ 水 氢氧化铜与稀盐酸反应: 氢氧化铜与稀硫酸反应:

层次分析法的一个应用 摘要 关键词: Abstract Keywords: 前言 1层次分析法理论概述 1.2层次分析法的概念 层次分析法是由美国运筹学家匹兹堡大学的 T.L.saaty教授于20世纪70年代提出的一种决策方法。它是将评价对象或问题视为一个系统,根据问题的性质和想要达到的总目标将问题分解成不同的组成要素,并按照要素间的相互关联度及隶属关系将要素按不同层次聚集组合,从而形成一个多层次的分析结构系统,把问题条理化、层次化。 层次分析法的结构符合人们思维的基本特征分解、判断、综合,把复杂的问题分解为各组成要素,再将这些要素按支配关系分组,从而形成有序的递阶层次结构,通过两两比较判断的方式确定每一层次中要素的相对重要性,然后在递阶层次结构内进行合成得到相对于目标的重要程度的总排序。因此,层次分析法从出现开始就受到了理论界广泛的支持和认可,并得到了不断的改进和完善。

1.3 AHP法下优点 (1)AHP对于解决多层次、多指标的递阶结构问题行之有效。保险公司绩效评价各指标之间相互作用,相互制约,且绩效受到多种因素的影响,可以分解成不同的子指标,例如我们从财务维度可将保险公司的绩效分解为增加盈利能力、偿付能力和发展能力三个层面,而各个层面又可以从多个角度来衡量,从而构成关联保险公司绩效评价指标体系的递阶结构体系。这样,我国上市保险公司绩效评价指标体系的递阶结构为层次分析法提供了“结构”基础。 (2)把定性分析和定量分析有机地结合起来,避免了单纯定性分析的主观臆断性和单纯利用定量分析时对数据资料的严格要求。 (3)层次分析法思路简单明了,将人们的思维数字化、系统化,便于接受并容易计算;同时,层次分析法是一种相对比较成熟的理论,有大量的是实践经验可以借鉴,这就避免了在保险公司绩效评价指标权重的确定过程中由于缺乏经验而产生的不足。 当然层次分析法也存在着缺陷:首先,其结论是建立在判断矩阵是一致性矩阵的基础上的,而在实际应用中所建立的判断矩阵,由于各方面的原因,往往不能一次性得到具有一致性的判断矩阵,而需要对其一致性进行检验,并进行多次的修改。因此,判断矩阵的建立过程比较复杂,且存在较大的主观性;其次是特征值的计算量较大;再次,许多专家认为层次分析法中采用的1-9标度法不能准确地反映专家和决策者的真实感觉和判断。采用层次分析法来确定两个指标的相对重要性时,当人们认为A1比A2重要(记为a),B1比B2明显重要(记为b),C1比C2强烈重要(记为c)时,则(c-b)比(b-a)要大得多,因而标度不应该的线性的,而是随着重要程度的增加差距越来越大。而1-9标度是等距的,所以Saaty 提出的线性评判标度与人们头脑中的实际标度并非一致。因此,这些问题都需要进行改进,但整体上不影响本文采用层次分析法确定评价指标权重。 1.4 AHP的基本步骤 用层次分析法作系统分析,首先需要把问题层次化,根据问题的性质和总目标把问题分解成为不同的因素,并且根据这些因素间的相互影响及隶属关系将因素按不同层次聚集组合,形成一个多层次的分析结构模型,并最终系统分析归结为最底层(供决策的方案、措施等)相对于最高层(总目标)的相对重要性权重的确

7A Unit 7 Integrated skills 分层作业设计 共21题(基础题用★表示,中档题用★★表示,提高题用★★★表示),学困生必做基础题、选做中档题,中等生必做中档题、选做基础题或提高题,尖子生必做提高题、选做基础题或中档题。 一、根据所给中英文提示或句意完成句子 1.★I like wearing ________________(短袖汗衫)in summer. 2.★We should do something to help the students in poor ______________(地区). 3.★★The watch is 9,000,000 yuan . I think it ’s too _______________ (not cheap) for me. 4.★★★---This wallet is too small. Can I see ________ (one more of the same kind) one? ---Sure. 5.★★★---Simon, how do you use your ______________ money? ---I use it to buy some books. 二、★★根据方框内所给词的适当形式完成句子 6. Day is on June 1st . 7. ---Who are those boys? ---They are my _______________. 8. ---Do you know the capitals of these ________________? ---Of course. 9. The students in my home town need good teachers ________________. 10. I want to buy _____________ some new clothes and shoes. 三、单项选择 11.★---What’s the ________ of the bike? ---850 yuan each. A. money B. spend C. price D. item 12.★★---__________ you _________ your help. ---That ’s all right. A. Thanks, for B. Thank, for C. Thanks, of D. Thank,

分层教学的策略与方法探讨 我觉分层教学这样的做法是可行的。比如说在我们学校就开设两个重点班和四个普通班,还有其他的班,这就使得有一部分学生产生了那种不甘人落后的心理。然后去努力的去学习,去追上他们,或者甚至超过他们。在我身边有很多这样的例子,我以前是普通班的,但后来被放到了重点班之后,我发现我们以前班的那些同学他们都开始都认真起来了,课外活动出去玩耍的也少了。另外在我们班上还是嗯老师会分层进行教学。老师让那些学在前面的学生就会自行复习,然后用剩下的学生就会跟着他讲题做一些最基本的训练。这样的方法是非常有效率的。既不耽误那些好学生,嗯,不必要在这些很基础的题上浪费时间,也能够使得这些基础差的学生,能够在短时间内提高自己的嗯基础。 班内分层目标教学模式(又称“分层教学、分类指导”教学模式) 它保留行政班,但在教学中,从好、中、差各类学生的实际出发,确定不同层次的目标,进行不同层次的教学和辅导,组织不同层次的检测,使各类学生得到充分的发展。具体做法:①了解差异,分类建组。②针对差异,分类目标。③面向全体,因材施教。④阶段考查,分类考核。⑤发展性评价,不断提高。 分层走班模式 根据学校进行的主要文化课摸底结果,按照学生知识和能力水平,分成三个或四个层次,组成新的教学集体(暂称之为A、B、C、D教学班)。“走班”并不打破原有的行政班,只是在学习这些文化课的时候,按各自的程度到不同的班去上课。“走班”实际上是一种运动式的、大范围的分层。它的特点是教师根据不同层次的学生重新组织教学内容,确定与其基础相适应又可以达到的教学目标,从而降低了“学困生”的学习难度,又满足了“学优生”扩大知识面的需求。 能力目标分层监测模式 知识与能力的分层教学由学生根据自身的条件,先选择相应的学习层次,然后根据努力的情况及后续学习的现状,再进行学期末的层次调整。这一形式参照了国外的“核心技能”原理,给学生以更多的自主选择权,学生在认识社会及认识自我的基础上,将自身的条件与阶段目标科学地联系在一起,更有利于学科知识和能力的“因材施教”。在教学上,此模式同时配合有“分层测试卡”(即分层目标练习册),由于“分层测试卡”是在承认人的发展有差异的前提下,对学